linear-list/linked-list/lru-cache

优质

小牛编辑

139浏览

2023-12-01

LRU Cache

描述

Design and implement a data structure for Least Recently Used (LRU) cache. It should support the following operations: get and set.

get(key) - Get the value (will always be positive) of the key if the key exists in the cache, otherwise return -1.

set(key, value) - Set or insert the value if the key is not already present. When the cache reached its capacity, it should invalidate the least recently used item before inserting a new item.

分析

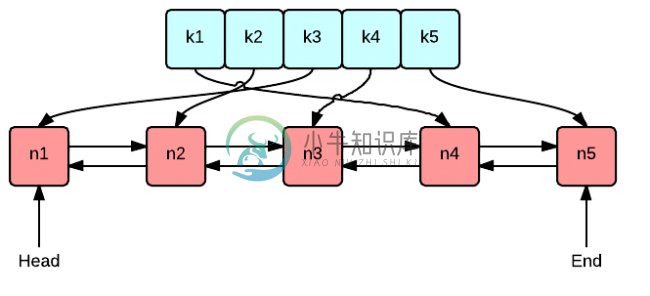

为了使查找、插入和删除都有较高的性能,这题的关键是要使用一个双向链表和一个 HashMap,因为:

- HashMap 保存每个节点的地址,可以基本保证在

O(1)时间内查找节点 - 双向链表能后在

O(1)时间内添加和删除节点,单链表则不行

具体实现细节:

- 越靠近链表头部,表示节点上次访问距离现在时间最短,尾部的节点表示最近访问最少

- 访问节点时,如果节点存在,把该节点交换到链表头部,同时更新 hash 表中该节点的地址

- 插入节点时,如果 cache 的 size 达到了上限 capacity,则删除尾部节点,同时要在 hash 表中删除对应的项;新节点插入链表头部

代码

C++的std::list 就是个双向链表,且它有个 splice()方法,O(1)时间,非常好用。

Java 中也有双向链表LinkedList, 但是 LinkedList 封装的太深,没有能在O(1)时间内删除中间某个元素的 API(C++的list有个splice(), O(1), 所以本题 C++可以放心使用splice()),于是我们只能自己实现一个双向链表。

本题有的人直接用 LinkedHashMap ,代码更短,但这是一种偷懒做法,面试官一定会让你自己重新实现。

// LRU Cache

// HashMap + Doubly Linked List

public class LRUCache {

private int capacity;

private HashMap<Integer, Node> m;

private DList list;

public LRUCache(int capacity) {

this.capacity = capacity;

m = new HashMap<>();

list = new DList();

}

// Time Complexity: O(1)

public int get(int key) {

if (!m.containsKey(key)) return -1;

Node node = m.get(key);

update(node);

return node.value;

}

// Time Complexity: O(1)

public void put(int key, int value) {

if (m.containsKey(key)){

Node node = m.get(key);

node.value = value;

update(node);

} else {

Node node = new Node(key, value);

if (m.size() >= capacity){

Node last = list.peekLast();

m.remove(last.key);

list.remove(last);

}

list.offerFirst(node);

m.put(key, node);

}

}

private void update(Node node) {

list.remove(node);

list.offerFirst(node);

}

// Node of doubly linked list

static class Node {

int key, value;

Node prev, next;

Node(int key, int value) {

this.key = key;

this.value = value;

}

}

// Doubly linked list

static class DList {

Node head, tail;

int size;

DList() {

// head and tail are two dummy nodes

head = new Node(0, 0);

tail = new Node(0, 0);

head.next = tail;

tail.prev = head;

}

// Add a new node at head

void offerFirst(Node node) {

head.next.prev = node;

node.next = head.next;

node.prev = head;

head.next = node;

size++;

}

// Remove a node in the middle

void remove(Node node) {

if (node == null) return;

node.prev.next = node.next;

node.next.prev = node.prev;

size--;

}

// Remove the tail node

Node pollLast() {

Node last = tail.prev;

remove(last);

return last;

}

Node peekLast() {

return tail.prev;

}

}

}

// LRU Cache

// 时间复杂度O(logn),空间复杂度O(n)

class LRUCache{

private:

struct CacheNode {

int key;

int value;

CacheNode(int k, int v) :key(k), value(v){}

};

public:

LRUCache(int capacity) {

this->capacity = capacity;

}

int get(int key) {

if (cacheMap.find(key) == cacheMap.end()) return -1;

// 把当前访问的节点移到链表头部,并且更新map中该节点的地址

cacheList.splice(cacheList.begin(), cacheList, cacheMap[key]);

cacheMap[key] = cacheList.begin();

return cacheMap[key]->value;

}

void put(int key, int value) {

if (cacheMap.find(key) == cacheMap.end()) {

if (cacheList.size() == capacity) { //删除链表尾部节点(最少访问的节点)

cacheMap.erase(cacheList.back().key);

cacheList.pop_back();

}

// 插入新节点到链表头部, 并且在map中增加该节点

cacheList.push_front(CacheNode(key, value));

cacheMap[key] = cacheList.begin();

} else {

//更新节点的值,把当前访问的节点移到链表头部,并且更新map中该节点的地址

cacheMap[key]->value = value;

cacheList.splice(cacheList.begin(), cacheList, cacheMap[key]);

cacheMap[key] = cacheList.begin();

}

}

private:

list<CacheNode> cacheList; // doubly linked list

unordered_map<int, list<CacheNode>::iterator> cacheMap;

int capacity;

};