入门

TensorFlow 安装

pip install tensorflow

或者在 docker 中

# CPU only

docker run -it -p 8888:8888 tensorflow/tensorflow

# GPU version

nvidia-docker run -it -p 8888:8888 tensorflow/tensorflow:latest-gpu

TensorFlow 入门

from __future__ import print_function, division

import tensorflow as tf

print('Loaded TF version', tf.__version__)

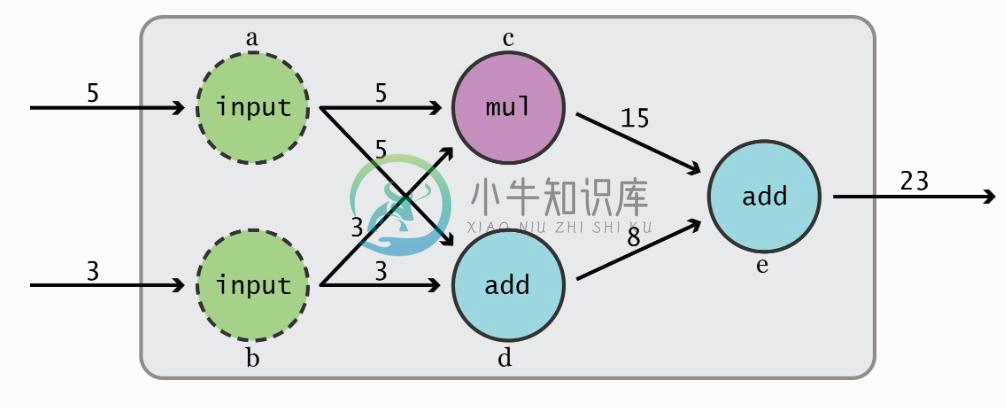

简单示例

import tensorflow as tf

a = tf.constant(5, name="input_a")

b = tf.constant(3, name="input_b")

c = tf.mul(a, b, name="mul_c")

d = tf.add(a, b, name="add_d")

e = tf.add(c, d, name="add_e")

with tf.Session() as sess:

print sess.run(e) # output => 23

writer = tf.train.SummaryWriter("./hello_graph", sess.graph)

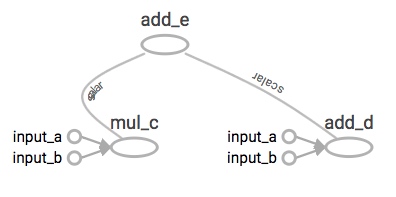

接着,可以启动 tensorboard 来查看这个 Graph(在 jupyter notebookt 中可以执行 !tensorboard --logdir="hello_graph"):

tensorboard --logdir="hello_graph"

打开网页 http://localhost:6006 并切换到 GRAPHS 标签,可以看到生成的 Graph:

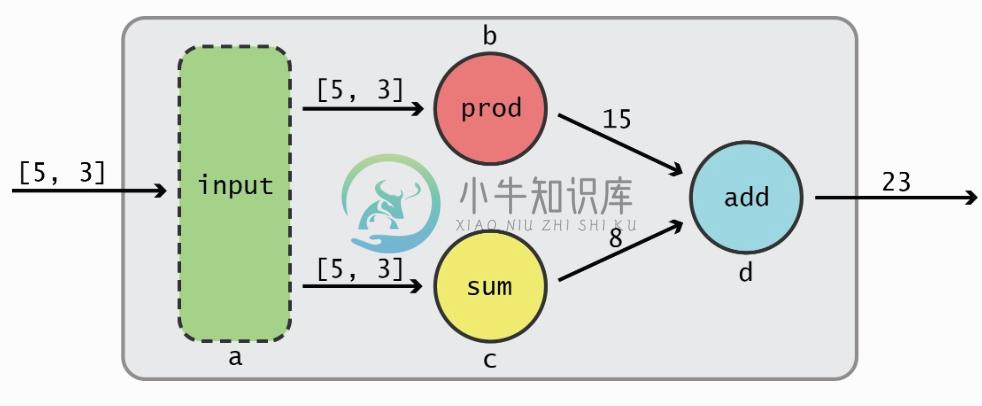

Tensor 简单示例

再来看一个输入向量的例子:

import tensorflow as tf

a = tf.constant([5,3], name="input_a")

b = tf.reduce_prod(a, name="prod_b")

c = tf.reduce_sum(a, name="sum_c")

d = tf.add(c, b, name="add_d")

with tf.Session() as sess:

print sess.run(d) # => 23

基本类型

Tensorflow 中所有的数据都称之为 Tensor,可以是一个变量、数组或者多维数组。Tensor 有几个重要的属性:

- Rank:Tensor 的纬数,比如 scalar rank=0, vector rank=1, matrix rank=2

- 类型:数据类型,比如 tf.float32, tc.uint8 等

- Shape:Tensor 的形状,比如 vector shape=[D0], matrix shape=[D0, D1]

常量:

# Constant

a = tf.constant(2)

b = tf.constant(3)

with tf.Session() as sess:

print sess.run(a+b) # => 5

变量在计算过程中是可变的,并且在训练过程中会自动更新或优化。如果只想在 tf 外手动更新变量,那需要声明变量是不可训练的,比如 not_trainable = tf.Variable(0, trainable=False)。

# Variable

# Variables maintain state across executions of the

# graph. The following example shows a variable serving

# as a simple counter.

v1 = tf.Variable(10)

v2 = tf.Variable(5)

with tf.Session() as sess:

# variables must be initialized first.

tf.initialize_all_variables().run(session=sess)

print sess.run(v1+v2) # => 15

# Placeholder and feed

# Placeholder is used as Graph input when running session

# A feed temporarily replaces the output of an operation

# with a tensor value. You supply feed data as an argument

# to a run() call. The feed is only used for the run call

# to which it is passed. The most common use case involves

# designating specific operations to be "feed" operations

# by using tf.placeholder() to create them

a = tf.placeholder(tf.int16)

b = tf.placeholder(tf.int16)

# Define some operations

add = tf.add(a, b)

mul = tf.mul(a, b)

with tf.Session() as sess:

print sess.run(add, feed_dict={a: 2, b: 3}) # ==> 5

print sess.run(mul, feed_dict={a: 2, b: 3}) # ==> 6

# Matrix

# Create a Constant op that produces a 1x2 matrix. The op is

# added as a node to the default graph.

#

# The value returned by the constructor represents the output

# of the Constant op.

matrix1 = tf.constant([[3., 3.]])

# Create another Constant that produces a 2x1 matrix.

matrix2 = tf.constant([[2.],[2.]])

# Create a Matmul op that takes 'matrix1' and 'matrix2' as inputs.

# The returned value, 'product', represents the result of the matrix

# multiplication.

product = tf.matmul(matrix1, matrix2)

with tf.Session() as sess:

print product.eval() # => 12

数据类型

Tensorflow 以图(Graph)来表示计算任务,图中的节点称之为 op(即 operation)。每个节点包括 0 个或多个 Tensor。为了进行计算,图必须在会话中启动,会话将图的 op 分发到 CPU、GPU 等设备并在执行后返回新的 Tensor。

图(Graph)和会话(Session)

如果不指定图,tensorflow 会自动创建一个,可以通过 tf.get_default_graph() 来获取这个默认图。

graph = tf.Graph()

with graph.as_default():

value1 = tf.constant([1., 2.])

value2 = tf.Variable([3., 4.])

result = value1*value2

启动图时,需要创建会话,并在改会话中启动:

# 使用自定义图

with tf.Session(graph=graph) as sess:

tf.global_variables_initializer().run()

print sess.run(result)

print result.eval()

使用默认图

sess = tf.Session()

# 在会话中执行图

print(sess.run(product))

# 使用完毕关闭会话

sess.close()

会话使用完成后必须关闭,可以调用 sess.close(),也可以使用 with 代码块

with tf.Session() as sess:

print(sess.run(product))

会话在计算图时会自动检测设备,并在有 GPU 设备的机器上自动使用 GPU。但多个 GPU 时,Tensorflow 只会使用第一个 GPU 设备,要使用其他设备必须指定:

with tf.Session() as sess:

with tf.device("/gpu:1"):

print(sess.run(product))

在 IPython 等交互式环境中,可以使用 tf.InteractiveSession 代替 tf.Session。这样,可以直接调用 Tensor.eval() 和 Operation.run() 方法,非常方便。

Tensor

Tensorflow 中所有的数据都称之为 Tensor,可以是一个变量、数组或者多维数组。Tensor 有几个重要的属性:

- Rank:Tensor 的纬数,比如

- scalar rank=0

- vector rank=1

- matrix rank=2

- 类型:数据类型,比如

- tf.float32

- tf.uint8

- Shape:Tensor 的形状,比如

- vector shape=[D0]

- matrix shape=[D0, D1]

Rank 与 Shape 的关系如下表所示

| Rank | Shape | Dimension number | Example |

|---|---|---|---|

| 0 | [] | 0-D | A 0-D tensor. A scalar. |

| 1 | [D0] | 1-D | A 1-D tensor with shape [5]. |

| 2 | [D0, D1] | 2-D | A 2-D tensor with shape [3, 4]. |

| 3 | [D0, D1, D2] | 3-D | A 3-D tensor with shape [1, 4, 3]. |

| n | [D0, D1, … Dn-1] | n-D | A tensor with shape [D0, D1, … Dn-1]. |

常量(Constant)

常量即计算过程中不可变的类型,如

a = tf.constant(2)

b = tf.constant(3)

with tf.Session() as sess:

print sess.run(a+b) # Output => 5

变量(Variable)

变量在计算过程中是可变的,并且在训练过程中会自动更新或优化,常用于模型参数。在定义时需要指定初始值。

如果只想在 tf 外手动更新变量,那需要声明变量是不可训练的,比如 not_trainable = tf.Variable(0, trainable=False)。

v1 = tf.Variable(10)

v2 = tf.Variable(5)

with tf.Session() as sess:

# variables must be initialized first.

tf.global_variables_initializer().run(session=sess)

print(sess.run(v1+v2)) # Output => 15

占位符(Placeholder)

占位符用来给计算图提供输入,常用于传递训练样本。需要在 Session.run() 时通过 feed 绑定。

a = tf.placeholder(tf.int16)

b = tf.placeholder(tf.int16)

# Define some operations

add = tf.add(a, b)

mul = tf.multiply(a, b)

with tf.Session() as sess:

print (sess.run(add, feed_dict={a: 2, b: 3})) # ==> 5

print (sess.run(mul, feed_dict={a: 2, b: 3})) # ==> 6

数据类型

Tensorflow 有着丰富的数据类型,比如 tf.int32, tf.float64 等,这些类型跟 numpy 是一致的。

import tensorflow as tf

import numpy as np

a = np.array([2, 3], dtype=np.int32)

b = np.array([4, 5], dtype=np.int32)

# Use `tf.add()` to initialize an "add" Operation

c = tf.add(a, b)

with tf.Session() as sess:

print sess.run(c) # ==> [6 8]

tf.convert_to_tensor(value, dtype=tf.float32) 是一个非常有用的转换函数,一般用来构造新的 Operation。它还可以同时接受 python 原生类型、numpy 数据以及 Tensor 数据。

数学计算

Tensorflow 内置了很多的数学计算操作,包括常见的各种数值计算、矩阵运算以及优化算法等。

import tensorflow as tf

# 使用交互式会话方便展示

sess = tf.InteractiveSession()

x = tf.constant([[2, 5, 3, -5],

[0, 3,-2, 5],

[4, 3, 5, 3],

[6, 1, 4, 0]])

y = tf.constant([[4, -7, 4, -3, 4],

[6, 4,-7, 4, 7],

[2, 3, 2, 1, 4],

[1, 5, 5, 5, 2]])

floatx = tf.constant([[2., 5., 3., -5.],

[0., 3.,-2., 5.],

[4., 3., 5., 3.],

[6., 1., 4., 0.]])

print (tf.transpose(x).eval())

print (tf.matmul(x, y).eval())

print (tf.matrix_determinant(tf.to_float(x)).eval())

print (tf.matrix_inverse(tf.to_float(x)).eval())

print (tf.matrix_solve(tf.to_float(x), [[1],[1],[1],[1]]).eval())

Reduction

Reduction 对指定的维度进行操作,并返回降维后的结果:

import tensorflow as tf

sess = tf.InteractiveSession()

x = tf.constant([[1, 2, 3],

[3, 2, 1],

[-1,-2,-3]])

boolean_tensor = tf.constant([[True, False, True],

[False, False, True],

[True, False, False]])

print (tf.reduce_prod(x).eval()) # => -216

print (tf.reduce_prod(x, reduction_indices=1).eval()) # => [6,6,-6]

print (tf.reduce_min(x, reduction_indices=1).eval()) # => [ 1 1 -3]

print (tf.reduce_max(x, reduction_indices=1).eval()) # => [ 3 3 -1]

print (tf.reduce_mean(x, reduction_indices=1).eval()) # => [ 2 2 -2]

# Computes the "logical and" of elements

print (tf.reduce_all(boolean_tensor, reduction_indices=1).eval()) # => [False False False]

# Computes the "logical or" of elements

print (tf.reduce_any(boolean_tensor, reduction_indices=1).eval()) # => [ True True True]

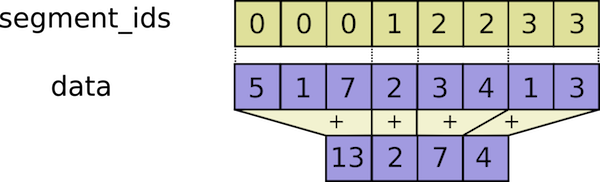

Segmentation

Segmentation 根据指定的 segment_ids 对输入分段进行计算操作,并返回降维后的结果:

import tensorflow as tf

sess = tf.InteractiveSession()

seg_ids = tf.constant([0,1,1,2,2]); # Group indexes : 0|1,2|3,4

x = tf.constant([[2, 5, 3, -5],

[0, 3,-2, 5],

[4, 3, 5, 3],

[6, 1, 4, 0],

[6, 1, 4, 0]])

print (tf.segment_sum(x, seg_ids).eval())

print (tf.segment_prod(x, seg_ids).eval())

print (tf.segment_min(x, seg_ids).eval())

print (tf.segment_max(x, seg_ids).eval())

print (tf.segment_mean(x, seg_ids).eval())

Sequence

序列比较和索引提取操作。

import tensorflow as tf

sess = tf.InteractiveSession()

x = tf.constant([[2, 5, 3, -5],

[0, 3,-2, 5],

[4, 3, 5, 3],

[6, 1, 4, 0]])

listx = tf.constant([1,2,5,3,4,5,6,7,8,3,2])

boolx = tf.constant([[True,False], [False,True]])

# 返回各列最小值的索引

print(tf.argmin(x, 0).eval()) # ==> [1 3 1 0]

# 返回各行最大值的索引

print(tf.argmax(x, 1).eval()) # ==> [1 3 2 0]

# 返回 Tensor 为 True 的位置

# ==> [[0 0]

# [1 1]]

print(tf.where(boolx).eval())

# 返回唯一化数据

print(tf.unique(listx)[0].eval()) # ==> [1 2 5 3 4 6 7 8]

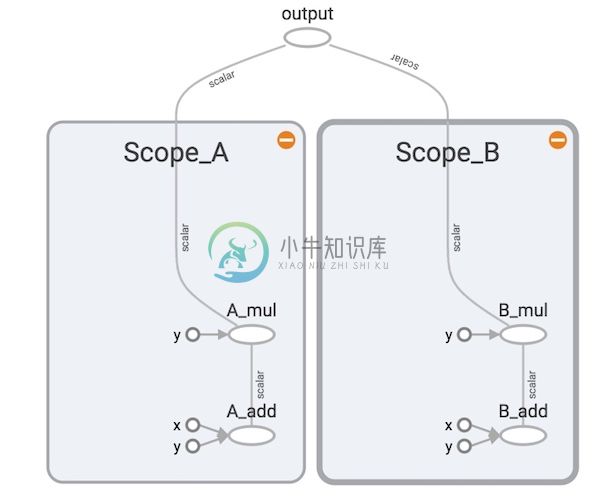

Name Scope

Name scopes 可以把复杂操作分成小的命名块,方便组织复杂的图,并方便在 TensorBoard 展示。

import tensorflow as tf

with tf.name_scope("Scope_A"):

a = tf.add(1, 2, name="A_add")

b = tf.multiply(a, 3, name="A_mul")

with tf.name_scope("Scope_B"):

c = tf.add(4, 5, name="B_add")

d = tf.multiply(c, 6, name="B_mul")

e = tf.add(b, d, name="output")

writer = tf.summary.FileWriter('./name_scope', graph=tf.get_default_graph())

writer.close()

一个完整示例

import tensorflow as tf

# Define a new Graph

graph = tf.Graph()

with graph.as_default():

with tf.name_scope("variables"):

# Variable to keep track of how many times the graph has been run

global_step = tf.Variable(0, dtype=tf.int32, trainable=False, name="global_step")

# Variable that keeps track of the sum of all output values over time:

total_output = tf.Variable(0.0, dtype=tf.float32, trainable=False, name="total_output")

# Primary transformation Operations

with tf.name_scope("transformation"):

# Separate input layer

with tf.name_scope("input"):

# Create input placeholder- takes in a Vector

a = tf.placeholder(tf.float32, shape=[None], name="input_placeholder_a")

# Separate middle layer

with tf.name_scope("intermediate_layer"):

b = tf.reduce_prod(a, name="product_b")

c = tf.reduce_sum(a, name="sum_c")

# Separate output layer

with tf.name_scope("output"):

output = tf.add(b, c, name="output")

with tf.name_scope("update"):

# Increments the total_output Variable by the latest output

update_total = total_output.assign_add(output)

# Increments the above `global_step` Variable, should be run whenever the graph is run

increment_step = global_step.assign_add(1)

# Summary Operations

with tf.name_scope("summaries"):

avg = tf.div(update_total, tf.cast(increment_step, tf.float32), name="average")

# Creates summaries for output node

tf.scalar_summary(b'Output', output, name="output_summary")

tf.scalar_summary(b'Sum of outputs over time', update_total, name="total_summary")

tf.scalar_summary(b'Average of outputs over time', avg, name="average_summary")

# Global Variables and Operations

with tf.name_scope("global_ops"):

# Initialization Op

init = tf.initialize_all_variables()

# Merge all summaries into one Operation

merged_summaries = tf.merge_all_summaries()

# Start a Session, using the explicitly created Graph

sess = tf.Session(graph=graph)

# Open a SummaryWriter to save summaries

writer = tf.train.SummaryWriter('./improved_graph', graph)

# Initialize Variables

sess.run(init)

def run_graph(input_tensor):

"""

Helper function; runs the graph with given input tensor and saves summaries

"""

feed_dict = {a: input_tensor}

_, step, summary = sess.run([output, increment_step, merged_summaries],

feed_dict=feed_dict)

writer.add_summary(summary, global_step=step)

# run graph with some inputs

run_graph([2,8])

run_graph([3,1,3,3])

run_graph([8])

run_graph([1,2,3])

run_graph([11,4])

run_graph([4,1])

run_graph([7,3,1])

run_graph([6,3])

run_graph([0,2])

run_graph([4,5,6])

# flush summeries to disk

writer.flush()

# close writer and session

writer.close()

sess.close()

Tensorboard 图:

Tensorboard 事件:

通用框架

import tensorflow as tf

# initialize variables/model parameters

# define the training loop operations

def inference(X):

# compute inference model over data X and return the result

def loss(X, Y):

# compute loss over training data X and expected outputs Y

def inputs():

# read/generate input training data X and expected outputs Y

def train(total_loss):

# train / adjust model parameters according to computed total loss

def evaluate(sess, X, Y):

# evaluate the resulting trained model

# Create a saver.

saver = tf.train.Saver()

# Launch the graph in a session, setup boilerplate

with tf.Session() as sess:

tf.initialize_all_variables().run()

X, Y = inputs()

total_loss = loss(X, Y)

train_op = train(total_loss)

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

# actual training loop

training_steps = 1000

for step in range(training_steps):

sess.run([train_op])

# for debugging and learning purposes, see how the loss gets decremented

# through training steps

if step % 10 == 0:

print "loss:", sess.run([total_loss])

# save training checkpoints in case loosing them

if step % 1000 == 0:

saver.save(sess, 'my-model', global_step=step)

evaluate(sess, X, Y)

coord.request_stop()

coord.join(threads)

saver.save(sess, 'my-model', global_step=training_steps)

万一训练过程中断,可以通过下面的方式恢复:

with tf.Session() as sess:

# model setup....

initial_step = 0

# verify if we don't have a checkpoint saved already

ckpt = tf.train.get_checkpoint_state(os.path.dirname(__file__))

if ckpt and ckpt.model_checkpoint_path:

# Restores from checkpoint

saver.restore(sess, ckpt.model_checkpoint_path)

initial_step = int(ckpt.model_checkpoint_path.rsplit('-', 1)[1])

#actual training loop

for step in range(initial_step, training_steps):

# run each train step