7. 实践案例 - 高可用

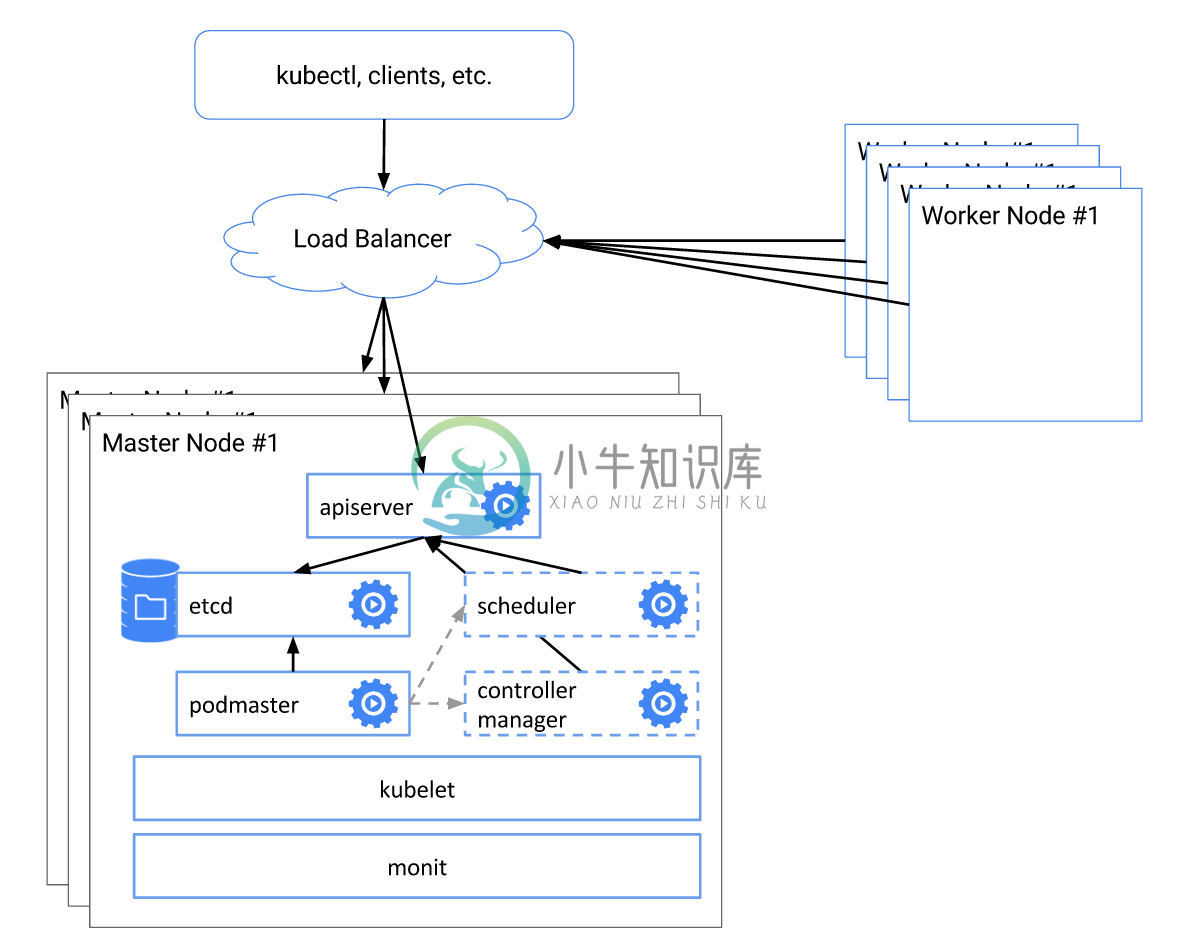

Kubernetes 从 1.5 开始,通过 kops 或者 kube-up.sh 部署的集群会自动部署一个高可用的系统,包括

- etcd 集群模式

- apiserver 负载均衡

- controller manager、scheduler 和 cluster autoscaler 自动选主(有且仅有一个运行实例)

如下图所示

注意:以下步骤假设每台机器上 Kubelet 和 Docker 已配置并处于正常运行状态。

Etcd 集群

安装 cfssl

# On all etcd nodescurl -o /usr/local/bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64curl -o /usr/local/bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64chmod +x /usr/local/bin/cfssl*

生成 CA certs:

# SSH etcd0mkdir -p /etc/kubernetes/pki/etcdcd /etc/kubernetes/pki/etcdcat >ca-config.json <<EOF{"signing": {"default": {"expiry": "43800h"},"profiles": {"server": {"expiry": "43800h","usages": ["signing","key encipherment","server auth","client auth"]},"client": {"expiry": "43800h","usages": ["signing","key encipherment","client auth"]},"peer": {"expiry": "43800h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat >ca-csr.json <<EOF{"CN": "etcd","key": {"algo": "rsa","size": 2048}}EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -# generate client certscat >client.json <<EOF{"CN": "client","key": {"algo": "ecdsa","size": 256}}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client.json | cfssljson -bare client

生成 etcd server/peer certs

# Copy files to other etcd nodesmkdir -p /etc/kubernetes/pki/etcdcd /etc/kubernetes/pki/etcdscp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/ca.pem .scp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/ca-key.pem .scp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/client.pem .scp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/client-key.pem .scp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/ca-config.json .# Run on all etcd nodescfssl print-defaults csr > config.jsonsed -i '0,/CN/{s/example.net/'"$PEER_NAME"'/}' config.jsonsed -i 's/www.example.net/'"$PRIVATE_IP"'/' config.jsonsed -i 's/example.net/'"$PUBLIC_IP"'/' config.jsoncfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server config.json | cfssljson -bare servercfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer config.json | cfssljson -bare peer

最后运行 etcd,将如下的 yaml 配置写入每台 etcd 节点的 /etc/kubernetes/manifests/etcd.yaml 文件中,注意替换

<podname>为 etcd 节点名称 (比如etcd0,etcd1和etcd2)<etcd0-ip-address>,<etcd1-ip-address>and<etcd2-ip-address>为 etcd 节点的内网 IP 地址

cat >/etc/kubernetes/manifests/etcd.yaml <<EOFapiVersion: v1kind: Podmetadata:labels:component: etcdtier: control-planename: <podname>namespace: kube-systemspec:containers:- command:- etcd --name ${PEER_NAME}- --data-dir /var/lib/etcd- --listen-client-urls https://${PRIVATE_IP}:2379- --advertise-client-urls https://${PRIVATE_IP}:2379- --listen-peer-urls https://${PRIVATE_IP}:2380- --initial-advertise-peer-urls https://${PRIVATE_IP}:2380- --cert-file=/certs/server.pem- --key-file=/certs/server-key.pem- --client-cert-auth- --trusted-ca-file=/certs/ca.pem- --peer-cert-file=/certs/peer.pem- --peer-key-file=/certs/peer-key.pem- --peer-client-cert-auth- --peer-trusted-ca-file=/certs/ca.pem- --initial-cluster etcd0=https://<etcd0-ip-address>:2380,etcd1=https://<etcd1-ip-address>:2380,etcd1=https://<etcd2-ip-address>:2380- --initial-cluster-token my-etcd-token- --initial-cluster-state newimage: gcr.io/google_containers/etcd-amd64:3.1.0livenessProbe:httpGet:path: /healthport: 2379scheme: HTTPinitialDelaySeconds: 15timeoutSeconds: 15name: etcdenv:- name: PUBLIC_IPvalueFrom:fieldRef:fieldPath: status.hostIP- name: PRIVATE_IPvalueFrom:fieldRef:fieldPath: status.podIP- name: PEER_NAMEvalueFrom:fieldRef:fieldPath: metadata.namevolumeMounts:- mountPath: /var/lib/etcdname: etcd- mountPath: /certsname: certshostNetwork: truevolumes:- hostPath:path: /var/lib/etcdtype: DirectoryOrCreatename: etcd- hostPath:path: /etc/kubernetes/pki/etcdname: certsEOF

注意:以上方法需要每个 etcd 节点都运行 kubelet。如果不想使用 kubelet,还可以通过 systemd 的方式来启动 etcd:

export ETCD_VERSION=v3.1.10curl -sSL https://github.com/coreos/etcd/releases/download/${ETCD_VERSION}/etcd-${ETCD_VERSION}-linux-amd64.tar.gz | tar -xzv --strip-components=1 -C /usr/local/bin/rm -rf etcd-$ETCD_VERSION-linux-amd64*touch /etc/etcd.envecho "PEER_NAME=$PEER_NAME" >> /etc/etcd.envecho "PRIVATE_IP=$PRIVATE_IP" >> /etc/etcd.envcat >/etc/systemd/system/etcd.service <<EOF[Unit]Description=etcdDocumentation=https://github.com/coreos/etcdConflicts=etcd.serviceConflicts=etcd2.service[Service]EnvironmentFile=/etc/etcd.envType=notifyRestart=alwaysRestartSec=5sLimitNOFILE=40000TimeoutStartSec=0ExecStart=/usr/local/bin/etcd --name ${PEER_NAME}--data-dir /var/lib/etcd--listen-client-urls https://${PRIVATE_IP}:2379--advertise-client-urls https://${PRIVATE_IP}:2379--listen-peer-urls https://${PRIVATE_IP}:2380--initial-advertise-peer-urls https://${PRIVATE_IP}:2380--cert-file=/etc/kubernetes/pki/etcd/server.pem--key-file=/etc/kubernetes/pki/etcd/server-key.pem--client-cert-auth--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.pem--peer-cert-file=/etc/kubernetes/pki/etcd/peer.pem--peer-key-file=/etc/kubernetes/pki/etcd/peer-key.pem--peer-client-cert-auth--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.pem--initial-cluster etcd0=https://<etcd0-ip-address>:2380,etcd1=https://<etcd1-ip-address>:2380,etcd2=https://<etcd2-ip-address>:2380--initial-cluster-token my-etcd-token--initial-cluster-state new[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl start etcd

kube-apiserver

把 kube-apiserver.yaml 放到每台 Master 节点的 /etc/kubernetes/manifests/,并把相关的配置放到 /srv/kubernetes/,即可由 kubelet 自动创建并启动 apiserver:

- basic_auth.csv - basic auth user and password

- ca.crt - Certificate Authority cert

- known_tokens.csv - tokens that entities (e.g. the kubelet) can use to talk to the apiserver

- kubecfg.crt - Client certificate, public key

- kubecfg.key - Client certificate, private key

- server.cert - Server certificate, public key

- server.key - Server certificate, private key

apiserver 启动后,还需要为它们做负载均衡,可以使用云平台的弹性负载均衡服务或者使用 haproxy/lvs 等为 master 节点配置负载均衡。

如果使用 kubeadm 来部署集群的话,上述配置可以自动生成

# on master0# copy etcd certsmkdir -p /etc/kubernetes/pki/etcdscp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/ca.pem /etc/kubernetes/pki/etcdscp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/client.pem /etc/kubernetes/pki/etcdscp root@<etcd0-ip-address>:/etc/kubernetes/pki/etcd/client-key.pem /etc/kubernetes/pki/etcd# deploy master0cat >config.yaml <<EOFapiVersion: kubeadm.k8s.io/v1alpha1kind: MasterConfigurationapi:advertiseAddress: <private-ip>etcd:endpoints:- https://<etcd0-ip-address>:2379- https://<etcd1-ip-address>:2379- https://<etcd2-ip-address>:2379caFile: /etc/kubernetes/pki/etcd/ca.pemcertFile: /etc/kubernetes/pki/etcd/client.pemkeyFile: /etc/kubernetes/pki/etcd/client-key.pemnetworking:podSubnet: <podCIDR>apiServerCertSANs:- <load-balancer-ip>apiServerExtraArgs:apiserver-count: "3"EOFkubeadm init --config=config.yaml# on other master nodesscp root@<master0-ip-address>:/etc/kubernetes/pki/* /etc/kubernetes/pkirm apiserver.crt# 然后再执行上述 master0 的所有步骤

kube-controller-manager 和 kube-scheduler

kube-controller manager 和 kube-scheduler 需要保证任何时刻都只有一个实例运行,需要一个选主的过程,所以在启动时要设置 --leader-elect=true,比如

kube-scheduler --master=127.0.0.1:8080 --v=2 --leader-elect=truekube-controller-manager --master=127.0.0.1:8080 --cluster-cidr=10.245.0.0/16 --allocate-node-cidrs=true --service-account-private-key-file=/srv/kubernetes/server.key --v=2 --leader-elect=true

把 kube-scheduler.yaml 和 kube-controller-manager.yaml 放到每台 master 节点的 /etc/kubernetes/manifests/ 即可。

kube-dns

kube-dns 可以通过 Deployment 的方式来部署,默认 kubeadm 会自动创建。但在大规模集群的时候,需要放宽资源限制,比如

dns_replicas: 6dns_cpu_limit: 100mdns_memory_limit: 512Midns_cpu_requests 70mdns_memory_requests: 70Mi

另外,也需要给 dnsmasq 增加资源,比如增加缓存大小到 10000,增加并发处理数量 --dns-forward-max=1000 等。

kube-proxy

默认 kube-proxy 使用 iptables 来为 Service 作负载均衡,这在大规模时会产生很大的 Latency,可以考虑使用 IPVS 的替代方式(注意 IPVS 在 v1.9 中还是 beta 状态)。

另外,需要注意配置 kube-proxy 使用 kube-apiserver 负载均衡的 IP 地址:

kubectl get configmap -n kube-system kube-proxy -o yaml > kube-proxy-сm.yamlsed -i 's#server:.*#server: https://<masterLoadBalancerFQDN>:6443#g' kube-proxy-cm.yamlkubectl apply -f kube-proxy-cm.yaml --force# restart all kube-proxy pods to ensure that they load the new configmapkubectl delete pod -n kube-system -l k8s-app=kube-proxy

kubelet

kubelet 需要配置 kube-apiserver 负载均衡的 IP 地址

sudo sed -i 's#server:.*#server: https://<masterLoadBalancerFQDN>:6443#g' /etc/kubernetes/kubelet.confsudo systemctl restart kubelet

数据持久化

除了上面提到的这些配置,持久化存储也是高可用 Kubernetes 集群所必须的。

- 对于公有云上部署的集群,可以考虑使用云平台提供的持久化存储,比如 aws ebs 或者 gce persistent disk

- 对于物理机部署的集群,可以考虑使用 iSCSI、NFS、Gluster 或者 Ceph 等网络存储,也可以使用 RAID