ORM 实例

sqlacalchemy发行版包含各种代码示例,说明了一组选定的模式,有些是典型的,有些则不那么典型。所有这些都是可运行的,可以在 /examples 分发目录。所有的描述和源代码都可以在这里找到。

一些用户贡献的其他SQLAlChemy示例可以在wiki上找到,网址是 https://www.sqlalchemy.org/trac/wiki/UsageRecipes 。

作图食谱

邻接表

使用邻接列表模型映射的字典结构的字典示例。

例如。::

node = TreeNode('rootnode')

node.append('node1')

node.append('node3')

session.add(node)

session.commit()

dump_tree(node)文件列表:adjacency_list.py

联想

说明“关联对象”模式用法的示例,其中中间类调解多对多模式中关联的两个类之间的关系。

Listing of files:

proxied_association.py - Same example as basic_association, adding in usage of

sqlalchemy.ext.associationproxyto make explicit references toOrderItemoptional.basic_association.py - Illustrate a many-to-many relationship between an "Order" and a collection of "Item" objects, associating a purchase price with each via an association object called "OrderItem"

dict_of_sets_with_default.py - An advanced association proxy example which illustrates nesting of association proxies to produce multi-level Python collections, in this case a dictionary with string keys and sets of integers as values, which conceal the underlying mapped classes.

异步集成

示例说明SQLAlchemy的异步引擎功能。

Listing of files:

async_orm.py - Illustrates use of the sqlalchemy.ext.asyncio.AsyncSession object for asynchronous ORM use.

greenlet_orm.py - Illustrates use of the sqlalchemy.ext.asyncio.AsyncSession object for asynchronous ORM use, including the optional run_sync() method.

basic.py - Illustrates the asyncio engine / connection interface.

gather_orm_statements.py - Illustrates how to run many statements concurrently using

asyncio.gather()along many asyncio database connections, merging ORM results into a singleAsyncSession.

有向图

有向图结构的持久性示例。图形存储为一组边,每个边引用一个节点表中的“下”和“上”节点。基本的持久性和对上下邻居的查询说明如下:

n2 = Node(2) n5 = Node(5) n2.add_neighbor(n5) print(n2.higher_neighbors())

文件列表:directed_graph.py

作为词典的动态关系

说明如何将类似字典的外观放在“动态”关系之上,以便字典操作(假设使用简单的字符串键)可以在大型集合上操作,而无需一次加载整个集合。

文件列表:dynamic_dict.py

一般关联

说明将多种类型的父对象与特定子对象关联的各种方法。

这些示例都使用声明性扩展和声明性混合。每一个都在最后给出了相同的用例-两个类, Customer 和 Supplier ,都是 HasAddresses mixin,它确保父类具有 addresses 包含以下内容的集合 Address 物体。

这个 discriminator_on_association.py 和 generic_fk.py 脚本是2007年博客文章中提供的食谱的现代化版本 Polymorphic Associations with SQLAlchemy 。

Listing of files:

table_per_related.py - Illustrates a generic association which persists association objects within individual tables, each one generated to persist those objects on behalf of a particular parent class.

generic_fk.py - Illustrates a so-called "generic foreign key", in a similar fashion to that of popular frameworks such as Django, ROR, etc. This approach bypasses standard referential integrity practices, in that the "foreign key" column is not actually constrained to refer to any particular table; instead, in-application logic is used to determine which table is referenced.

table_per_association.py - Illustrates a mixin which provides a generic association via a individually generated association tables for each parent class. The associated objects themselves are persisted in a single table shared among all parents.

discriminator_on_association.py - Illustrates a mixin which provides a generic association using a single target table and a single association table, referred to by all parent tables. The association table contains a "discriminator" column which determines what type of parent object associates to each particular row in the association table.

大型收藏品

大型集合示例。

说明要与一起使用的选项 relationship() 当相关对象的列表非常大时,包括:

“动态”关系,查询访问的数据切片

如何结合使用删除层叠

passive_deletes=True大大提高了相关集合删除的性能。

文件列表:large_collection.py

物化路径

说明了使用SQLAlchemy ORM的分层数据的“物化路径”模式。

Listing of files:

materialized_paths.py - Illustrates the "materialized paths" pattern.

嵌套集合

说明了使用sqlacalchemy orm为层次数据实现“嵌套集”模式的基本方法。

Listing of files:

nested_sets.py - Celko's "Nested Sets" Tree Structure.

性能

用于各种SQLAlchemy用例的性能分析套件。

每个套件都关注具有特定性能概要和相关影响的特定用例:

大块镶块

单独插入,有或无交易

获取大量行

运行大量的短查询

所有套件都包括各种说明核心和ORM使用的使用模式,并且通常按照性能从最差到最大的顺序进行排序,与SQLAlchemy提供的功能量相反,从最大到最小(这两个东西通常完全对应)。

在包级别提供了一个命令行工具,允许运行单个套件::

$ python -m examples.performance --help

usage: python -m examples.performance [-h] [--test TEST] [--dburl DBURL]

[--num NUM] [--profile] [--dump]

[--echo]

{bulk_inserts,large_resultsets,single_inserts}

positional arguments:

{bulk_inserts,large_resultsets,single_inserts}

suite to run

optional arguments:

-h, --help show this help message and exit

--test TEST run specific test name

--dburl DBURL database URL, default sqlite:///profile.db

--num NUM Number of iterations/items/etc for tests;

default is module-specific

--profile run profiling and dump call counts

--dump dump full call profile (implies --profile)

--echo Echo SQL output示例运行如下:

$ python -m examples.performance bulk_inserts

或使用选项:

$ python -m examples.performance bulk_inserts \

--dburl mysql+mysqldb://scott:tiger@localhost/test \

--profile --num 1000参见

文件列表

Listing of files:

short_selects.py - This series of tests illustrates different ways to SELECT a single record by primary key

large_resultsets.py - In this series of tests, we are looking at time to load a large number of very small and simple rows.

bulk_inserts.py - This series of tests illustrates different ways to INSERT a large number of rows in bulk.

__main__.py - Allows the examples/performance package to be run as a script.

single_inserts.py - In this series of tests, we're looking at a method that inserts a row within a distinct transaction, and afterwards returns to essentially a "closed" state. This would be analogous to an API call that starts up a database connection, inserts the row, commits and closes.

bulk_updates.py - This series of tests illustrates different ways to UPDATE a large number of rows in bulk.

用时间运行所有测试

这是默认的运行形式:

$ python -m examples.performance single_inserts

Tests to run: test_orm_commit, test_bulk_save,

test_bulk_insert_dictionaries, test_core,

test_core_query_caching, test_dbapi_raw_w_connect,

test_dbapi_raw_w_pool

test_orm_commit : Individual INSERT/COMMIT pairs via the

ORM (10000 iterations); total time 13.690218 sec

test_bulk_save : Individual INSERT/COMMIT pairs using

the "bulk" API (10000 iterations); total time 11.290371 sec

test_bulk_insert_dictionaries : Individual INSERT/COMMIT pairs using

the "bulk" API with dictionaries (10000 iterations);

total time 10.814626 sec

test_core : Individual INSERT/COMMIT pairs using Core.

(10000 iterations); total time 9.665620 sec

test_core_query_caching : Individual INSERT/COMMIT pairs using Core

with query caching (10000 iterations); total time 9.209010 sec

test_dbapi_raw_w_connect : Individual INSERT/COMMIT pairs w/ DBAPI +

connection each time (10000 iterations); total time 9.551103 sec

test_dbapi_raw_w_pool : Individual INSERT/COMMIT pairs w/ DBAPI +

connection pool (10000 iterations); total time 8.001813 sec单个测试的转储配置文件

可以为所有测试或更常见的单个测试转储python概要文件输出:

$ python -m examples.performance single_inserts --test test_core --num 1000 --dump

Tests to run: test_core

test_core : Individual INSERT/COMMIT pairs using Core. (1000 iterations); total fn calls 186109

186109 function calls (186102 primitive calls) in 1.089 seconds

Ordered by: internal time, call count

ncalls tottime percall cumtime percall filename:lineno(function)

1000 0.634 0.001 0.634 0.001 {method 'commit' of 'sqlite3.Connection' objects}

1000 0.154 0.000 0.154 0.000 {method 'execute' of 'sqlite3.Cursor' objects}

1000 0.021 0.000 0.074 0.000 /Users/classic/dev/sqlalchemy/lib/sqlalchemy/sql/compiler.py:1950(_get_colparams)

1000 0.015 0.000 0.034 0.000 /Users/classic/dev/sqlalchemy/lib/sqlalchemy/engine/default.py:503(_init_compiled)

1 0.012 0.012 1.091 1.091 examples/performance/single_inserts.py:79(test_core)

...写自己的套房

探查器套件系统是可扩展的,可以应用于您自己的一组测试。这是一种很有价值的技术,可用于决定某些性能关键的例程集的正确方法。例如,如果我们想分析几种加载方式之间的差异,我们可以创建一个文件 test_loads.py ,内容如下:

from examples.performance import Profiler

from sqlalchemy import Integer, Column, create_engine, ForeignKey

from sqlalchemy.orm import relationship, joinedload, subqueryload, Session

from sqlalchemy.ext.declarative import declarative_base

Base = declarative_base()

engine = None

session = None

class Parent(Base):

__tablename__ = 'parent'

id = Column(Integer, primary_key=True)

children = relationship("Child")

class Child(Base):

__tablename__ = 'child'

id = Column(Integer, primary_key=True)

parent_id = Column(Integer, ForeignKey('parent.id'))

# Init with name of file, default number of items

Profiler.init("test_loads", 1000)

@Profiler.setup_once

def setup_once(dburl, echo, num):

"setup once. create an engine, insert fixture data"

global engine

engine = create_engine(dburl, echo=echo)

Base.metadata.drop_all(engine)

Base.metadata.create_all(engine)

sess = Session(engine)

sess.add_all([

Parent(children=[Child() for j in range(100)])

for i in range(num)

])

sess.commit()

@Profiler.setup

def setup(dburl, echo, num):

"setup per test. create a new Session."

global session

session = Session(engine)

# pre-connect so this part isn't profiled (if we choose)

session.connection()

@Profiler.profile

def test_lazyload(n):

"load everything, no eager loading."

for parent in session.query(Parent):

parent.children

@Profiler.profile

def test_joinedload(n):

"load everything, joined eager loading."

for parent in session.query(Parent).options(joinedload("children")):

parent.children

@Profiler.profile

def test_subqueryload(n):

"load everything, subquery eager loading."

for parent in session.query(Parent).options(subqueryload("children")):

parent.children

if __name__ == '__main__':

Profiler.main()我们可以直接运行新脚本:

$ python test_loads.py --dburl postgresql+psycopg2://scott:tiger@localhost/test Running setup once... Tests to run: test_lazyload, test_joinedload, test_subqueryload test_lazyload : load everything, no eager loading. (1000 iterations); total time 11.971159 sec test_joinedload : load everything, joined eager loading. (1000 iterations); total time 2.754592 sec test_subqueryload : load everything, subquery eager loading. (1000 iterations); total time 2.977696 sec

关系联接条件

各种各样的例子 relationship() 配置,利用 primaryjoin 组成特殊类型联接条件的参数。

Listing of files:

threeway.py - Illustrate a "three way join" - where a primary table joins to a remote table via an association table, but then the primary table also needs to refer to some columns in the remote table directly.

cast.py - Illustrate a

relationship()that joins two columns where those columns are not of the same type, and a CAST must be used on the SQL side in order to match them.

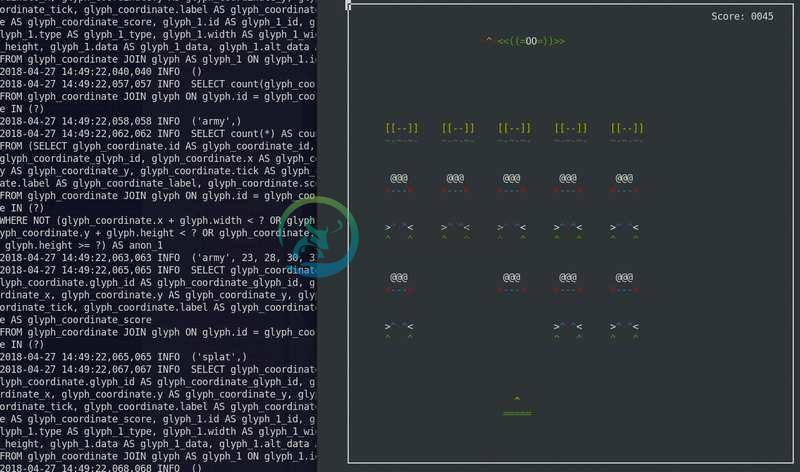

太空侵略者

使用sqlite作为状态机的空间入侵游戏。

最初开发于2012年。适合在Python3中工作。

使用ascii art在文本控制台中运行。

跑步::

python -m examples.space_invaders.space_invaders

运行时,请查看日志中的SQL输出:

tail -f space_invaders.log

享受!

文件列表:space_in侵略者.py

XML持久化

说明了在关系数据库中用elementtree表示的三种持久化和查询XML文档的策略。这些技术不直接将任何映射应用到elementtree对象,因此与本地的elementtree和lxml兼容,并且可以适应任何类型的DOM表示系统。还演示了沿着类似xpath的字符串进行查询。

例如。::

# parse an XML file and persist in the database

doc = ElementTree.parse("test.xml")

session.add(Document(file, doc))

session.commit()

# locate documents with a certain path/attribute structure

for document in find_document('/somefile/header/field2[@attr=foo]'):

# dump the XML

print(document)Listing of files:

pickle_type.py - illustrates a quick and dirty way to persist an XML document expressed using ElementTree and pickle.

adjacency_list.py - Illustrates an explicit way to persist an XML document expressed using ElementTree.

optimized_al.py - Uses the same strategy as

adjacency_list.py, but associates each DOM row with its owning document row, so that a full document of DOM nodes can be loaded using O(1) queries - the construction of the "hierarchy" is performed after the load in a non-recursive fashion and is more efficient.

版本控制对象

使用历史记录表进行版本控制

说明一个扩展,它为实体创建版本表,并存储每次更改的记录。给定的扩展生成一个匿名的“历史”类,它表示目标对象的历史版本。

相比于 使用临时行进行版本控制 将更新作为新行写入同一表的示例,而不使用单独的历史记录表。

使用通过单元测试模块进行说明。 test_versioning.py ,可以通过 pytest ::

# assume SQLAlchemy is installed where pytest is cd examples/versioned_history pytest test_versioning.py

使用声明性的示例用法片段:

from history_meta import Versioned, versioned_session

Base = declarative_base()

class SomeClass(Versioned, Base):

__tablename__ = 'sometable'

id = Column(Integer, primary_key=True)

name = Column(String(50))

def __eq__(self, other):

assert type(other) is SomeClass and other.id == self.id

Session = sessionmaker(bind=engine)

versioned_session(Session)

sess = Session()

sc = SomeClass(name='sc1')

sess.add(sc)

sess.commit()

sc.name = 'sc1modified'

sess.commit()

assert sc.version == 2

SomeClassHistory = SomeClass.__history_mapper__.class_

assert sess.query(SomeClassHistory).\

filter(SomeClassHistory.version == 1).\

all() \

== [SomeClassHistory(version=1, name='sc1')]这个 Versioned mixin设计用于声明性。要将扩展与经典映射器一起使用,请 _history_mapper 可以应用函数:

from history_meta import _history_mapper m = mapper(SomeClass, sometable) _history_mapper(m) SomeHistoryClass = SomeClass.__history_mapper__.class_

版本控制示例还与ORM乐观并发特性集成在 配置版本计数器 . 要启用此功能,请设置标志 Versioned.use_mapper_versioning 为真:

class SomeClass(Versioned, Base):

__tablename__ = 'sometable'

use_mapper_versioning = True

id = Column(Integer, primary_key=True)

name = Column(String(50))

def __eq__(self, other):

assert type(other) is SomeClass and other.id == self.id如果 SomeClass 如果数据库隔离级别允许两个UPDATE语句继续进行,那么其中一个将失败,因为它不再针对最后一个已知的版本标识符。

Listing of files:

history_meta.py - Versioned mixin class and other utilities.

test_versioning.py - Unit tests illustrating usage of the

history_meta.pymodule functions.

使用临时行进行版本控制

几个例子说明了截取更改的技术,这些更改首先被解释为行的更新,而不是将其转换为新行的插入,使前一行作为历史版本保持不变。

相比于 使用历史记录表进行版本控制 将历史记录行写入单独的历史记录表的示例。

Listing of files:

versioned_rows_w_versionid.py - Illustrates a method to intercept changes on objects, turning an UPDATE statement on a single row into an INSERT statement, so that a new row is inserted with the new data, keeping the old row intact.

versioned_rows.py - Illustrates a method to intercept changes on objects, turning an UPDATE statement on a single row into an INSERT statement, so that a new row is inserted with the new data, keeping the old row intact.

versioned_map.py - A variant of the versioned_rows example built around the concept of a "vertical table" structure, like those illustrated in 垂直属性映射 examples.

versioned_update_old_row.py - Illustrates the same UPDATE into INSERT technique of

versioned_rows.py, but also emits an UPDATE on the old row to affect a change in timestamp. Also includes aSessionEvents.do_orm_execute()hook to limit queries to only the most recent version.

垂直属性映射

说明“垂直表”映射。

“垂直表”是指将对象的各个属性存储为表中不同行的技术。“垂直表”技术用于持久化具有各种属性集的对象,而代价是简单的查询控制和简洁性。在内容/文档管理系统中,为了灵活地表示用户创建的结构,通常会出现这种情况。

给出了该方法的两种变体。在第二行中,每一行引用一个“数据类型”,其中包含有关存储在属性中的信息类型的信息,如整数、字符串或日期。

例子::

shrew = Animal(u'shrew')

shrew[u'cuteness'] = 5

shrew[u'weasel-like'] = False

shrew[u'poisonous'] = True

session.add(shrew)

session.flush()

q = (session.query(Animal).

filter(Animal.facts.any(

and_(AnimalFact.key == u'weasel-like',

AnimalFact.value == True))))

print('weasel-like animals', q.all())Listing of files:

dictlike-polymorphic.py - Mapping a polymorphic-valued vertical table as a dictionary.

dictlike.py - Mapping a vertical table as a dictionary.

继承映射配方

基本继承映射

如中所述,单表、联接表和具体表继承的工作示例 映射类继承层次结构 .

Listing of files:

concrete.py - Concrete-table (table-per-class) inheritance example.

single.py - Single-table (table-per-hierarchy) inheritance example.

joined.py - Joined-table (table-per-subclass) inheritance example.

特殊API

属性检测

示例说明对SQLAlchemy属性管理系统的修改。

Listing of files:

active_column_defaults.py - Illustrates use of the

AttributeEvents.init_scalar()event, in conjunction with Core column defaults to provide ORM objects that automatically produce the default value when an un-set attribute is accessed.custom_management.py - Illustrates customized class instrumentation, using the

sqlalchemy.ext.instrumentationextension package.listen_for_events.py - Illustrates how to attach events to all instrumented attributes and listen for change events.

水平切分

使用SQLAlchemy Sharding API的基本示例。切分是指在多个数据库之间水平缩放数据。

“切分”映射的基本组件包括:

倍数

Engine实例,每个实例都分配了一个“shard id”。这些Engine实例可能引用不同的数据库,或同一数据库中的不同模式/帐户,或者甚至可以仅通过选项来区分它们,这些选项将导致它们在使用时访问不同的模式或表。一个可以返回单个shard id的函数,给定一个要保存的实例;这称为“shard选择器”。

可以返回应用于特定实例标识符的碎片ID列表的函数,称为“ID选择器”。如果它返回所有碎片ID,将搜索所有碎片。

给定特定查询(“查询选择器”),可以返回要尝试的碎片ID列表的函数。如果返回所有碎片ID,将查询所有碎片并将结果连接在一起。

在这些例子中,不同种类的碎片用于同一个基本示例,该示例在每个大陆上容纳天气数据。我们提供了示例shard_chooser、id_chooser和query_chooser函数。查询选择器演示了对SQL表达式元素的检查,以便尝试确定请求的单个shard。

构造通用分片例程是解决在多个数据库之间组织实例问题的一种雄心勃勃的方法。对于更直白的替代方法,“不同实体”方法是一种以显式方式将对象分配给不同表(以及潜在的数据库节点)的简单方法-在wiki的 EntityName 。

Listing of files:

separate_tables.py - Illustrates sharding using a single SQLite database, that will however have multiple tables using a naming convention.

separate_databases.py - Illustrates sharding using distinct SQLite databases.

扩展ORM

ORM查询事件

说明ORM SELECT行为增强的配方 Session.execute() 具有 2.0 style 使用 select() 以及 1.x style Query 对象。

示例包括 with_loader_criteria() 选项以及 SessionEvents.do_orm_execute() 钩子。

从SQLAlchemy 1.4开始 Query 构造与 Select 构造,使这两个对象基本相同。

Listing of files:

filter_public.py - Illustrates a global criteria applied to entities of a particular type.

temporal_range.py - Illustrates a custom per-query criteria that will be applied to selected entities.

狗堆缓存

演示如何嵌入 dogpile.cache ORM查询的功能,允许完全的缓存控制以及从长期缓存中提取“延迟加载”属性的能力。

在本演示中,演示了以下技术:

使用

SessionEvents.do_orm_execute()事件挂钩规避的基本技巧

Session.execute()从自定义缓存源(而不是数据库)中提取。使用dogpile.cache的基本缓存,使用允许对固定配置集进行全局控制的“区域”。

使用定制

UserDefinedOption对象来配置语句对象中的选项。

参见

重新执行语句 -包括这里介绍的技术的一般示例。

例如。::

# query for Person objects, specifying cache

stmt = select(Person).options(FromCache("default"))

# specify that each Person's "addresses" collection comes from

# cache too

stmt = stmt.options(RelationshipCache(Person.addresses, "default"))

# execute and results

result = session.execute(stmt)

print(result.scalars.all())若要运行,必须同时安装sqlAlchemy和dogpoill.cache或将其安装在当前pythonpath上。演示将为数据文件创建本地目录,插入初始数据,然后运行。第二次运行演示将使用已经存在的缓存文件,并且只会针对两个表发出一条SQL语句-但是显示的结果将使用几十个Lazyloads,这些语句都是从缓存中提取的。

按照复杂性的顺序,演示脚本本身作为python模块运行,以便相对导入工作:

python -m examples.dogpile_caching.helloworld python -m examples.dogpile_caching.relationship_caching python -m examples.dogpile_caching.advanced python -m examples.dogpile_caching.local_session_caching

Listing of files:

environment.py - Establish data / cache file paths, and configurations, bootstrap fixture data if necessary.

caching_query.py - Represent functions and classes which allow the usage of Dogpile caching with SQLAlchemy. Introduces a query option called FromCache.

model.py - The datamodel, which represents Person that has multiple Address objects, each with PostalCode, City, Country.

fixture_data.py - Installs some sample data. Here we have a handful of postal codes for a few US/Canadian cities. Then, 100 Person records are installed, each with a randomly selected postal code.

helloworld.py - Illustrate how to load some data, and cache the results.

relationship_caching.py - Illustrates how to add cache options on relationship endpoints, so that lazyloads load from cache.

advanced.py - Illustrate usage of Query combined with the FromCache option, including front-end loading, cache invalidation and collection caching.

local_session_caching.py - This example creates a new dogpile.cache backend that will persist data in a dictionary which is local to the current session. remove() the session and the cache is gone.

PostGIS集成

一个简单的例子说明了帮助嵌入PostGIS功能的技术。

开发此示例的初衷是希望将其外推到一个全面的PostGIS集成层。我们很高兴地宣布,这已经取得了成果,因为 GeoAlchemy 。

示例说明:

允许创建/删除与addgeometrycolumn/dropgeometrycolumn一起工作的DDL扩展

几何图形类型以及一些子类型,将结果行值转换为支持地理信息系统的对象,并与DDL扩展集成。

一种支持地理信息系统的对象,它存储一个原始几何值,并为诸如astext()之类的函数提供一个工厂。

一种ORM比较器,它可以覆盖映射对象上的标准列方法,以生成GIS运算符。

一个属性事件侦听器,它截获字符串并转换为geomfromtext()。

一个独立的运算符示例。

该实现仅限于公共的、众所周知的和简单易用的扩展点。

例如。::

print(session.query(Road).filter(

Road.road_geom.intersects(r1.road_geom)).all())Listing of files:

table_per_related.py - Illustrates a generic association which persists association objects within individual tables, each one generated to persist those objects on behalf of a particular parent class.

generic_fk.py - Illustrates a so-called "generic foreign key", in a similar fashion to that of popular frameworks such as Django, ROR, etc. This approach bypasses standard referential integrity practices, in that the "foreign key" column is not actually constrained to refer to any particular table; instead, in-application logic is used to determine which table is referenced.

table_per_association.py - Illustrates a mixin which provides a generic association via a individually generated association tables for each parent class. The associated objects themselves are persisted in a single table shared among all parents.

discriminator_on_association.py - Illustrates a mixin which provides a generic association using a single target table and a single association table, referred to by all parent tables. The association table contains a "discriminator" column which determines what type of parent object associates to each particular row in the association table.