��

A Guide to Production Level Deep Learning

��

��

⛴️

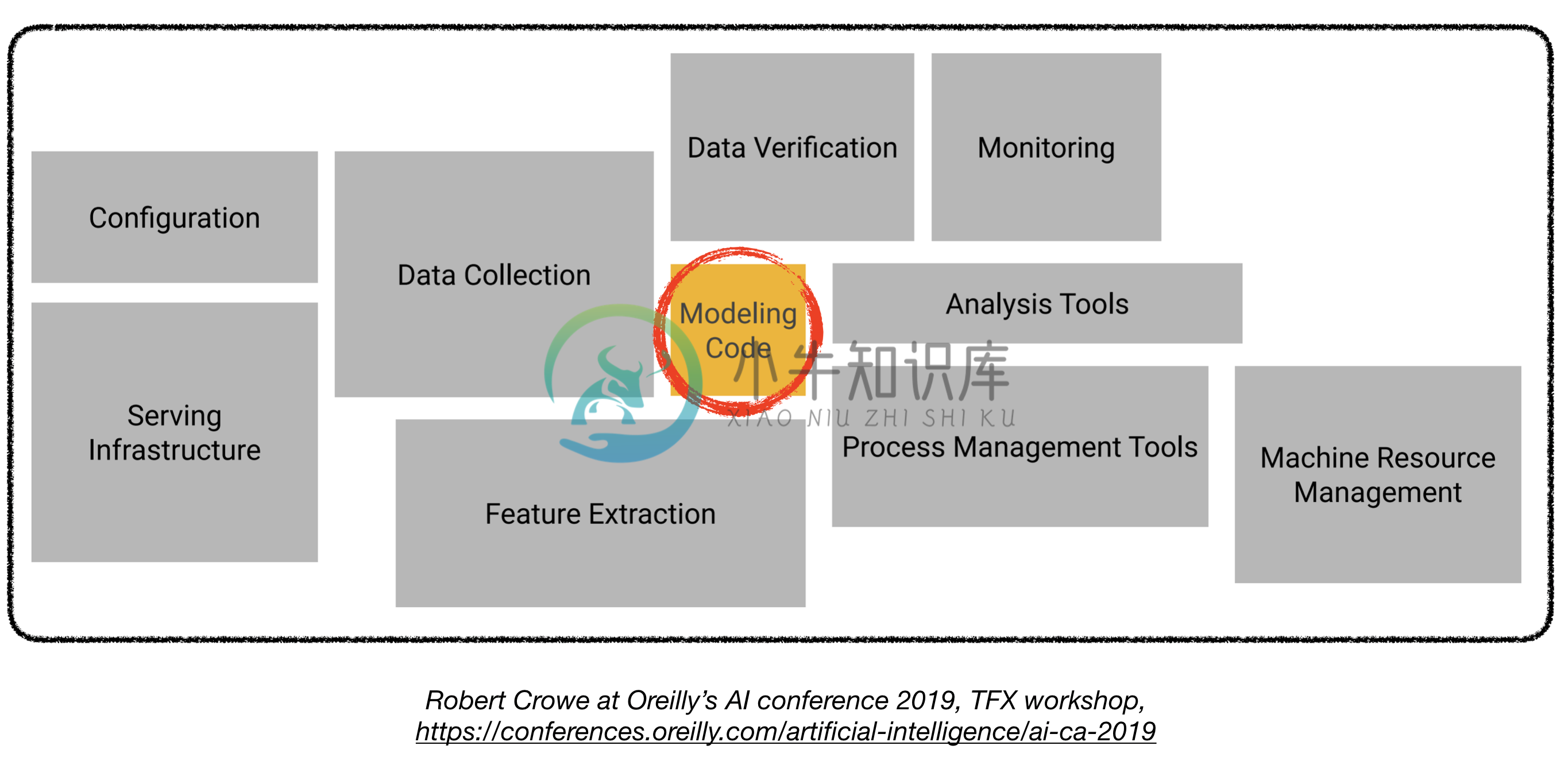

Deploying deep learning models in production can be challenging, as it is far beyond training models with good performance. Several distinct components need to be designed and developed in order to deploy a production level deep learning system (seen below):

This repo aims to be an engineering guideline for building production-level deep learning systems which will be deployed in real world applications.

The material presented here is borrowed from Full Stack Deep Learning Bootcamp (by Pieter Abbeel at UC Berkeley, Josh Tobin at OpenAI, and Sergey Karayev at Turnitin), TFX workshop by Robert Crowe, and Pipeline.ai's Advanced KubeFlow Meetup by Chris Fregly.

Machine Learning Projects

Fun

- Technically infeasible or poorly scoped

- Never make the leap to production

- Unclear success criteria (metrics)

- Poor team management

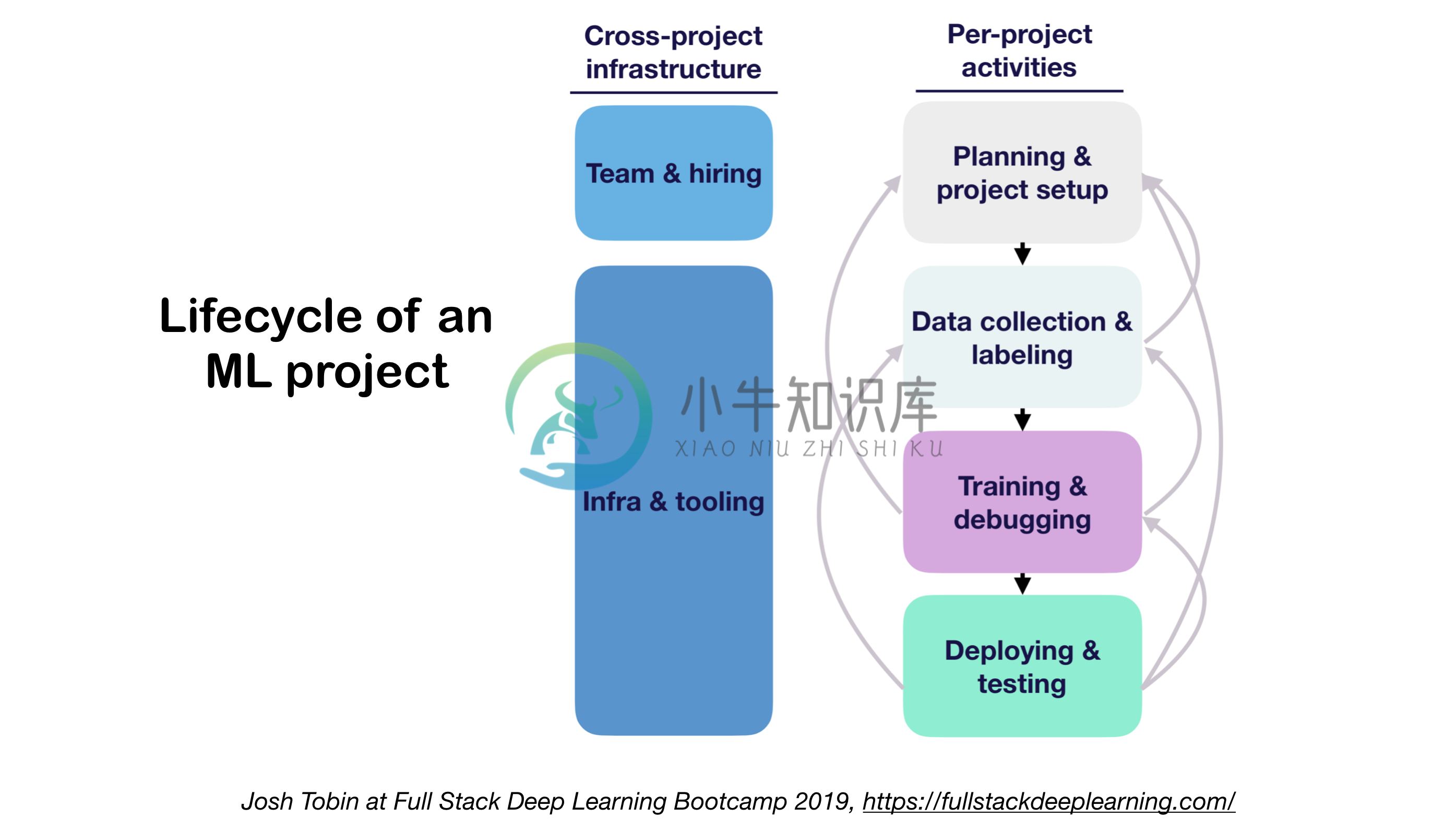

1. ML Projects lifecycle

- Importance of understanding state of the art in your domain:

- Helps to understand what is possible

- Helps to know what to try next

2. Mental Model for ML project

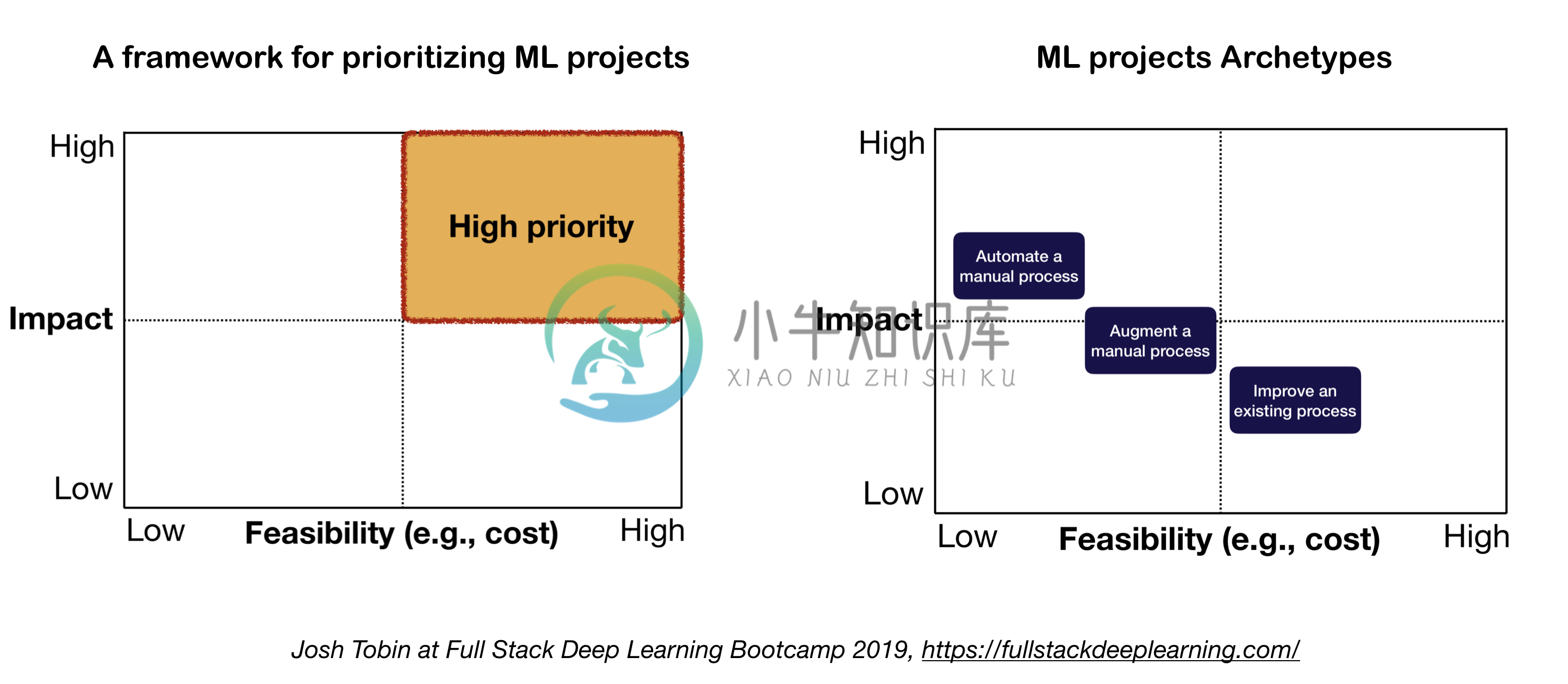

The two important factors to consider when defining and prioritizing ML projects:

- High Impact:

- Complex parts of your pipeline

- Where "cheap prediction" is valuable

- Where automating complicated manual process is valuable

- Low Cost:

- Cost is driven by:

- Data availability

- Performance requirements: costs tend to scale super-linearly in the accuracy requirement

- Problem difficulty:

- Some of the hard problems include: unsupervised learning, reinforcement learning, and certain categories of supervised learning

- Cost is driven by:

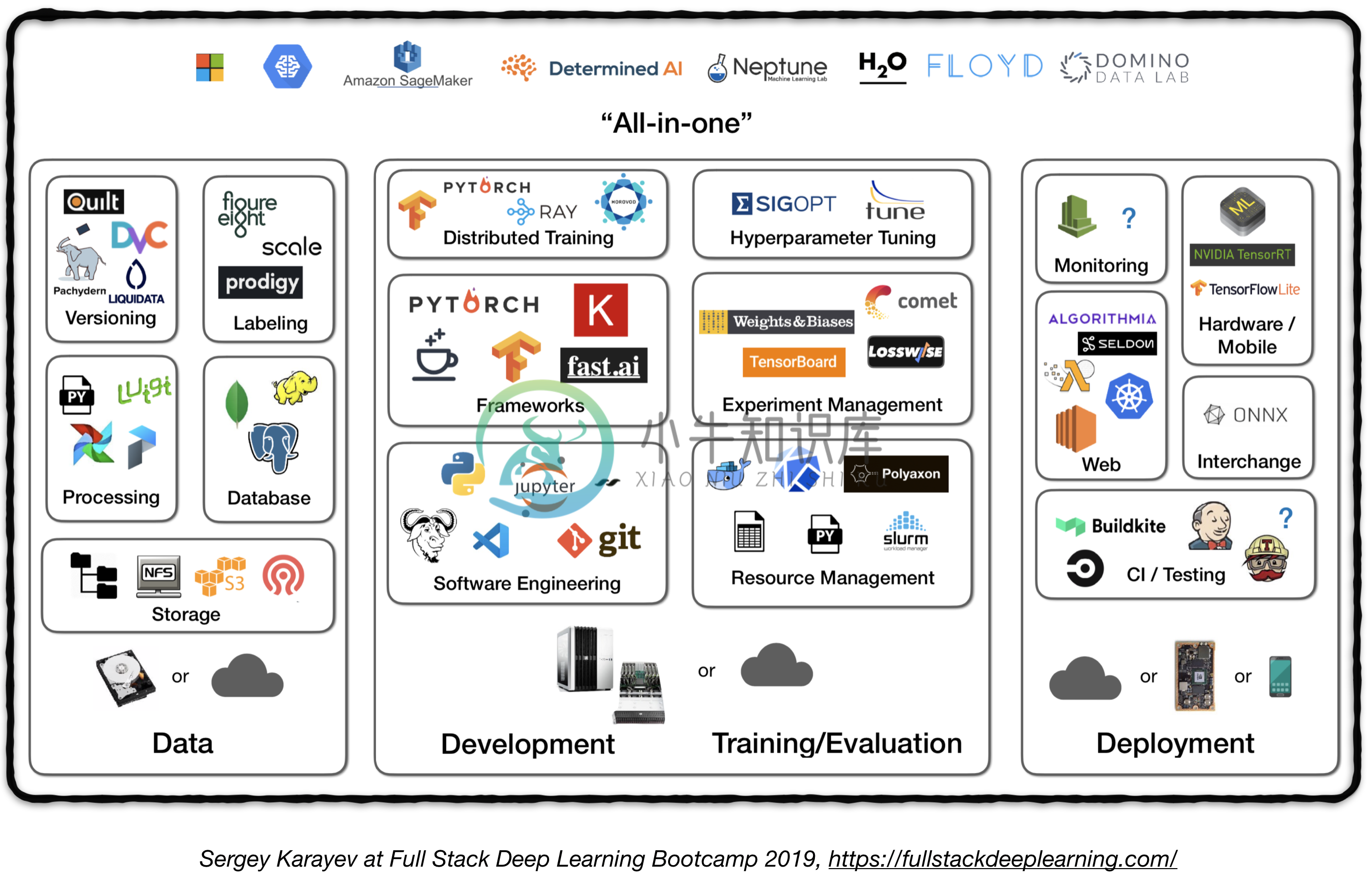

Full stack pipeline

The following figure represents a high level overview of different components in a production level deep learning system:

In the following, we will go through each module and recommend toolsets and frameworks as well as best practices from practitioners that fit each component.1. Data Management

1.1 Data Sources

- Supervised deep learning requires a lot of labeled data

- Labeling own data is costly!

- Here are some resources for data:

- Open source data (good to start with, but not an advantage)

- Data augmentation (a MUST for computer vision, an option for NLP)

- Synthetic data (almost always worth starting with, esp. in NLP)

1.2 Data Labeling

- Requires: separate software stack (labeling platforms), temporary labor, and QC

- Sources of labor for labeling:

- Crowdsourcing (Mechanical Turk): cheap and scalable, less reliable, needs QC

- Hiring own annotators: less QC needed, expensive, slow to scale

- Data labeling service companies:

- Labeling platforms:

- Diffgram: Training Data Software (Computer Vision)

- Prodigy: An annotation tool poweredby active learning (by developers of Spacy), text and image

- HIVE: AI as a Service platform for computer vision

- Supervisely: entire computer vision platform

- Labelbox: computer vision

- Scale AI data platform (computer vision & NLP)

1.3. Data Storage

- Data storage options:

- Object store: Store binary data (images, sound files, compressed texts)

- Database: Store metadata (file paths, labels, user activity, etc).

- Postgres is the right choice for most of applications, with the best-in-class SQL and great support for unstructured JSON.

- Data Lake: to aggregate features which are not obtainable from database (e.g. logs)

- Feature Store: store, access, and share machine learning features(Feature extraction could be computationally expensive and nearly impossible to scale, hence re-using features by different models and teams is a key to high performance ML teams).

- FEAST (Google cloud, Open Source)

- Michelangelo Palette (Uber)

- Suggestion: At training time, copy data into a local or networked filesystem (NFS). 1

1.4. Data Versioning

- It's a "MUST" for deployed ML models:

Deployed ML models are part code, part data. 1 No data versioning means no model versioning. - Data versioning platforms:

1.5. Data Processing

- Training data for production models may come from different sources, including Stored data in db and object stores, log processing, and outputs of other classifiers.

- There are dependencies between tasks, each needs to be kicked off after its dependencies are finished. For example, training on new log data, requires a preprocessing step before training.

- Makefiles are not scalable. "Workflow manager"s become pretty essential in this regard.

- Workflow orchestration:

2. Development, Training, and Evaluation

2.1. Software engineering

- Winner language: Python

- Editors:

- Vim

- Emacs

- VS Code (Recommended by the author): Built-in git staging and diff, Lint code, open projects remotely through ssh

- Notebooks: Great as starting point of the projects, hard to scale (fun fact: Netflix’s Notebook-Driven Architecture is an exception, which is entirely based on nteract suites).

- Streamlit: interactive data science tool with applets

- Compute recommendations 1:

- For individuals or startups:

- Development: a 4x Turing-architecture PC

- Training/Evaluation: Use the same 4x GPU PC. When running many experiments, either buy shared servers or use cloud instances.

- For large companies:

- Development: Buy a 4x Turing-architecture PC per ML scientist or let them use V100 instances

- Training/Evaluation: Use cloud instances with proper provisioning and handling of failures

- For individuals or startups:

- Cloud Providers:

- GCP: option to connect GPUs to any instance + has TPUs

- AWS:

2.2. Resource Management

- Allocating free resources to programs

- Resource management options:

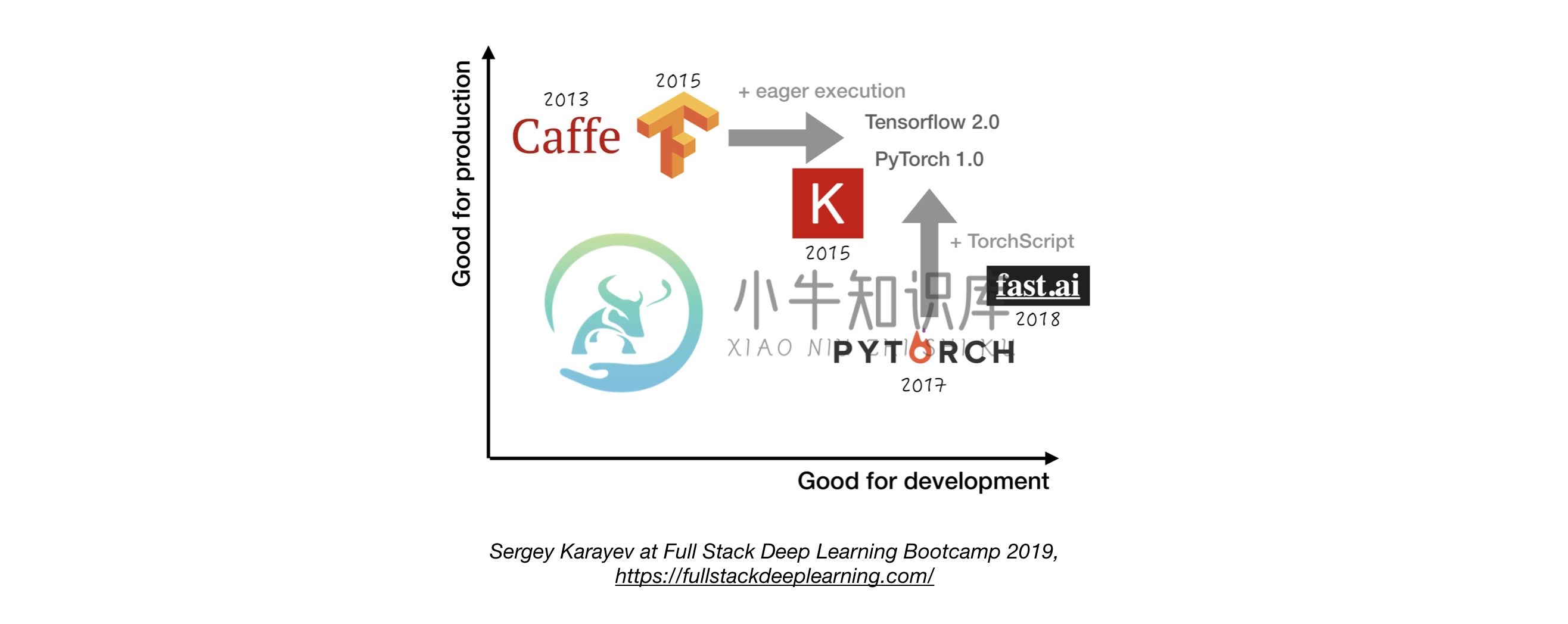

2.3. DL Frameworks

- Unless having a good reason not to, use Tensorflow/Keras or PyTorch. 1

- The following figure shows a comparison between different frameworks on how they stand for "developement" and "production".

2.4. Experiment management

- Development, training, and evaluation strategy:

- Always start simple

- Train a small model on a small batch. Only if it works, scale to larger data and models, and hyperparameter tuning!

- Experiment management tools:

- Tensorboard

- provides the visualization and tooling needed for ML experimentation

- Losswise (Monitoring for ML)

- Comet: lets you track code, experiments, and results on ML projects

- Weights & Biases: Record and visualize every detail of your research with easy collaboration

- MLFlow Tracking: for logging parameters, code versions, metrics, and output files as well as visualization of the results.

- Automatic experiment tracking with one line of code in python

- Side by side comparison of experiments

- Hyper parameter tuning

- Supports Kubernetes based jobs

- Always start simple

2.5. Hyperparameter Tuning

Approaches:

- Grid search

- Random search

- Bayesian Optimization

- HyperBand and Asynchronous Successive Halving Algorithm (ASHA)

- Population-based Training

Platforms:

- RayTune: Ray Tune is a Python library for hyperparameter tuning at any scale (with a focus on deep learning and deep reinforcement learning). Supports any machine learning framework, including PyTorch, XGBoost, MXNet, and Keras.

- Katib: Kubernete's Native System for Hyperparameter Tuning and Neural Architecture Search, inspired by [Google vizier](https://static.googleusercontent.com/media/ research.google.com/ja//pubs/archive/ bcb15507f4b52991a0783013df4222240e942381.pdf) and supports multiple ML/DL frameworks (e.g. TensorFlow, MXNet, and PyTorch).

- Hyperas: a simple wrapper around hyperopt for Keras, with a simple template notation to define hyper-parameter ranges to tune.

- SIGOPT: a scalable, enterprise-grade optimization platform

- Sweeps from [Weights & Biases] (https://www.wandb.com/): Parameters are not explicitly specified by a developer. Instead they are approximated and learned by a machine learning model.

- Keras Tuner: A hyperparameter tuner for Keras, specifically for tf.keras with TensorFlow 2.0.

2.6. Distributed Training

- Data parallelism: Use it when iteration time is too long (both tensorflow and PyTorch support)

- Model parallelism: when model does not fit on a single GPU

- Other solutions:

- Horovod

3. Troubleshooting [TBD]

4. Testing and Deployment

4.1. Testing and CI/CD

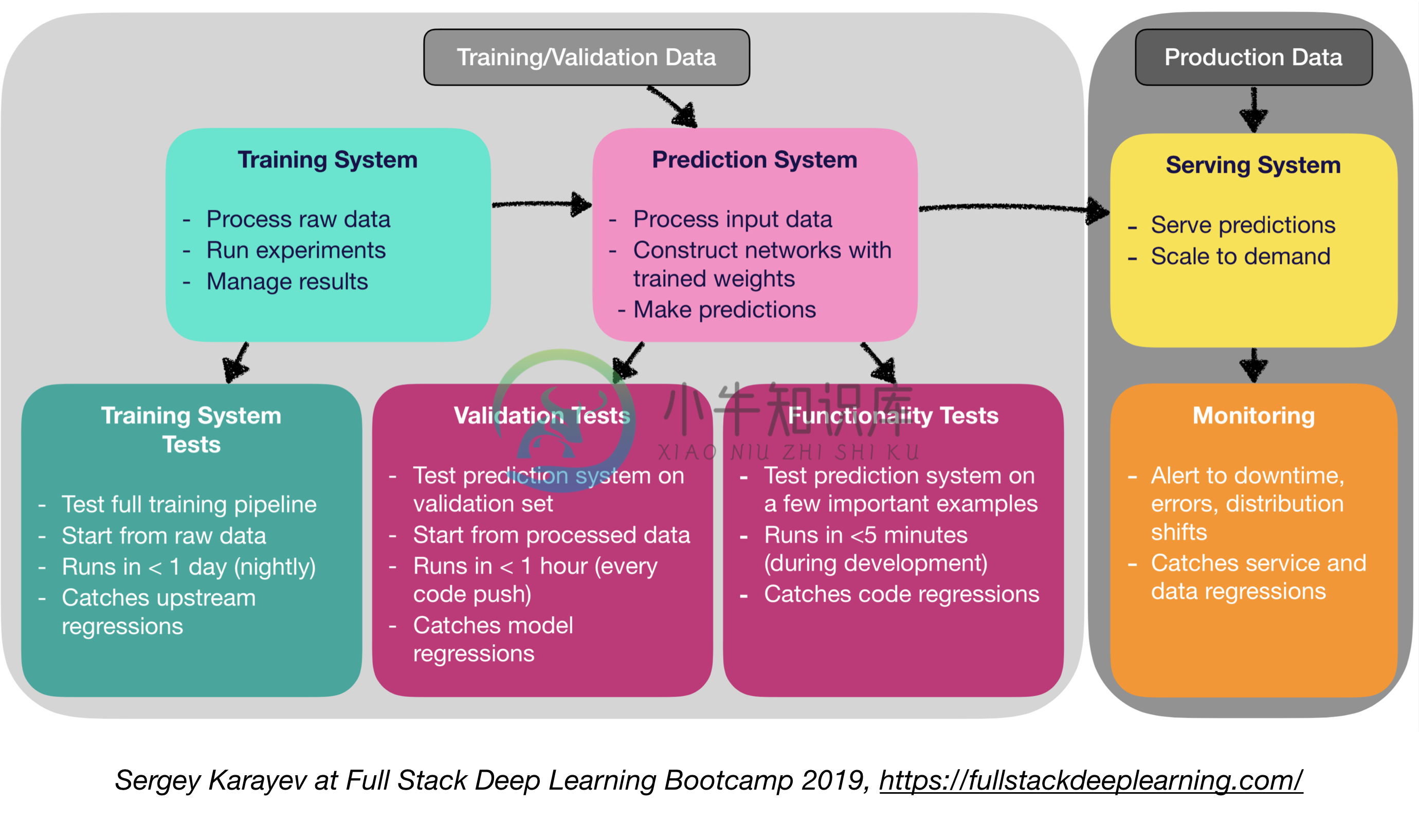

Machine Learning production software requires a more diverse set of test suites than traditional software:

- Unit and Integration Testing:

- Types of tests:

- Training system tests: testing training pipeline

- Validation tests: testing prediction system on validation set

- Functionality tests: testing prediction system on few important examples

- Types of tests:

- Continuous Integration: Running tests after each new code change pushed to the repo

- SaaS for continuous integration:

- Argo: Open source Kubernetes native workflow engine for orchestrating parallel jobs (incudes workflows, events, CI and CD).

- CircleCI: Language-Inclusive Support, Custom Environments, Flexible Resource Allocation, used by instacart, Lyft, and StackShare.

- Travis CI

- Buildkite: Fast and stable builds, Open source agent runs on almost any machine and architecture, Freedom to use your own tools and services

- Jenkins: Old school build system

4.2. Web Deployment

- Consists of a Prediction System and a Serving System

- Prediction System: Process input data, make predictions

- Serving System (Web server):

- Serve prediction with scale in mind

- Use REST API to serve prediction HTTP requests

- Calls the prediction system to respond

- Serving options:

-

- Deploy to VMs, scale by adding instances

-

- Deploy as containers, scale via orchestration

- Containers

- Docker

- Container Orchestration:

- Kubernetes (the most popular now)

- MESOS

- Marathon

-

- Deploy code as a "serverless function"

-

- Deploy via a model serving solution

-

- Model serving:

- Specialized web deployment for ML models

- Batches request for GPU inference

- Frameworks:

- Tensorflow serving

- MXNet Model server

- Clipper (Berkeley)

- SaaS solutions

- Seldon: serve and scale models built in any framework on Kubernetes

- Algorithmia

- Decision making: CPU or GPU?

- CPU inference:

- CPU inference is preferable if it meets the requirements.

- Scale by adding more servers, or going serverless.

- GPU inference:

- TF serving or Clipper

- Adaptive batching is useful

- CPU inference:

- (Bonus) Deploying Jupyter Notebooks:

- Kubeflow Fairing is a hybrid deployment package that let's you deploy your Jupyter notebook codes!

4.5 Service Mesh and Traffic Routing

- Transition from monolithic applications towards a distributed microservice architecture could be challenging.

- A Service mesh (consisting of a network of microservices) reduces the complexity of such deployments, and eases the strain on development teams.

- Istio: a service mesh to ease creation of a network of deployed services with load balancing, service-to-service authentication, monitoring, with few or no code changes in service code.

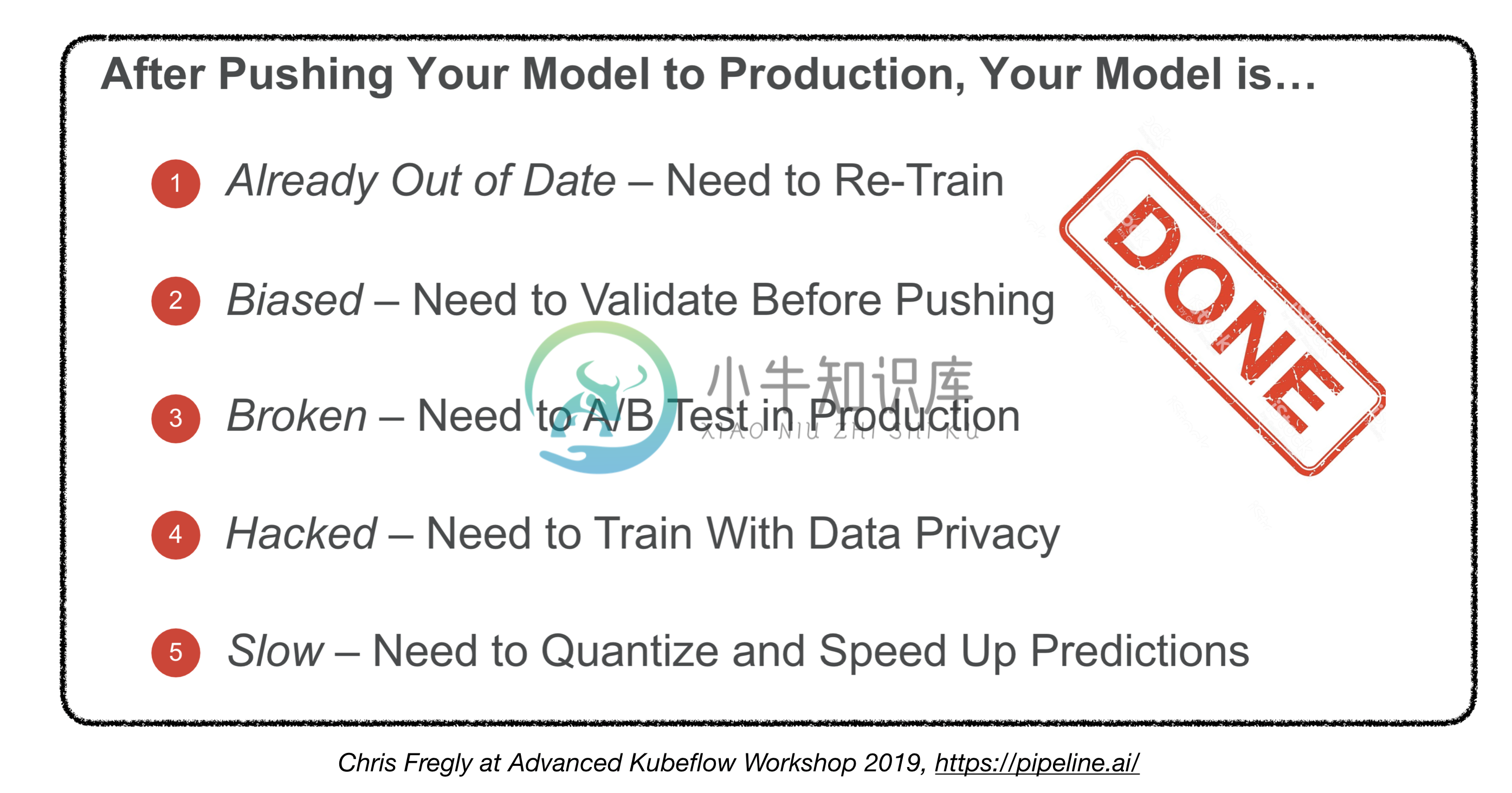

4.4. Monitoring:

- Purpose of monitoring:

- Alerts for downtime, errors, and distribution shifts

- Catching service and data regressions

- Cloud providers solutions are decent

- Kiali:an observability console for Istio with service mesh configuration capabilities. It answers these questions: How are the microservices connected? How are they performing?

Are we done?

4.5. Deploying on Embedded and Mobile Devices

- Main challenge: memory footprint and compute constraints

- Solutions:

- Quantization

- Reduced model size

- MobileNets

- Knowledge Distillation

- DistillBERT (for NLP)

- Embedded and Mobile Frameworks:

- Tensorflow Lite

- PyTorch Mobile

- Core ML

- ML Kit

- FRITZ

- OpenVINO

- Model Conversion:

- Open Neural Network Exchange (ONNX): open-source format for deep learning models

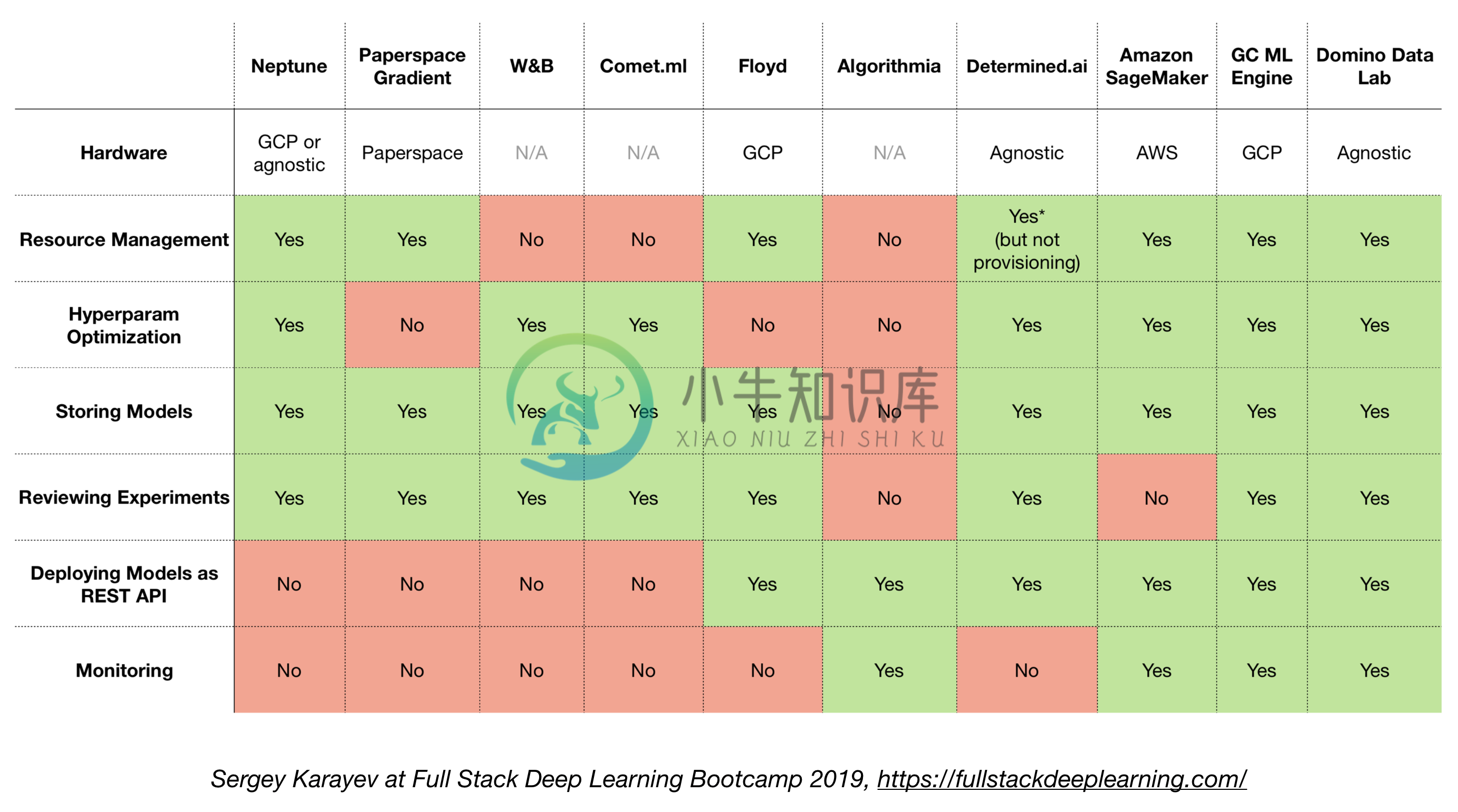

4.6. All-in-one solutions

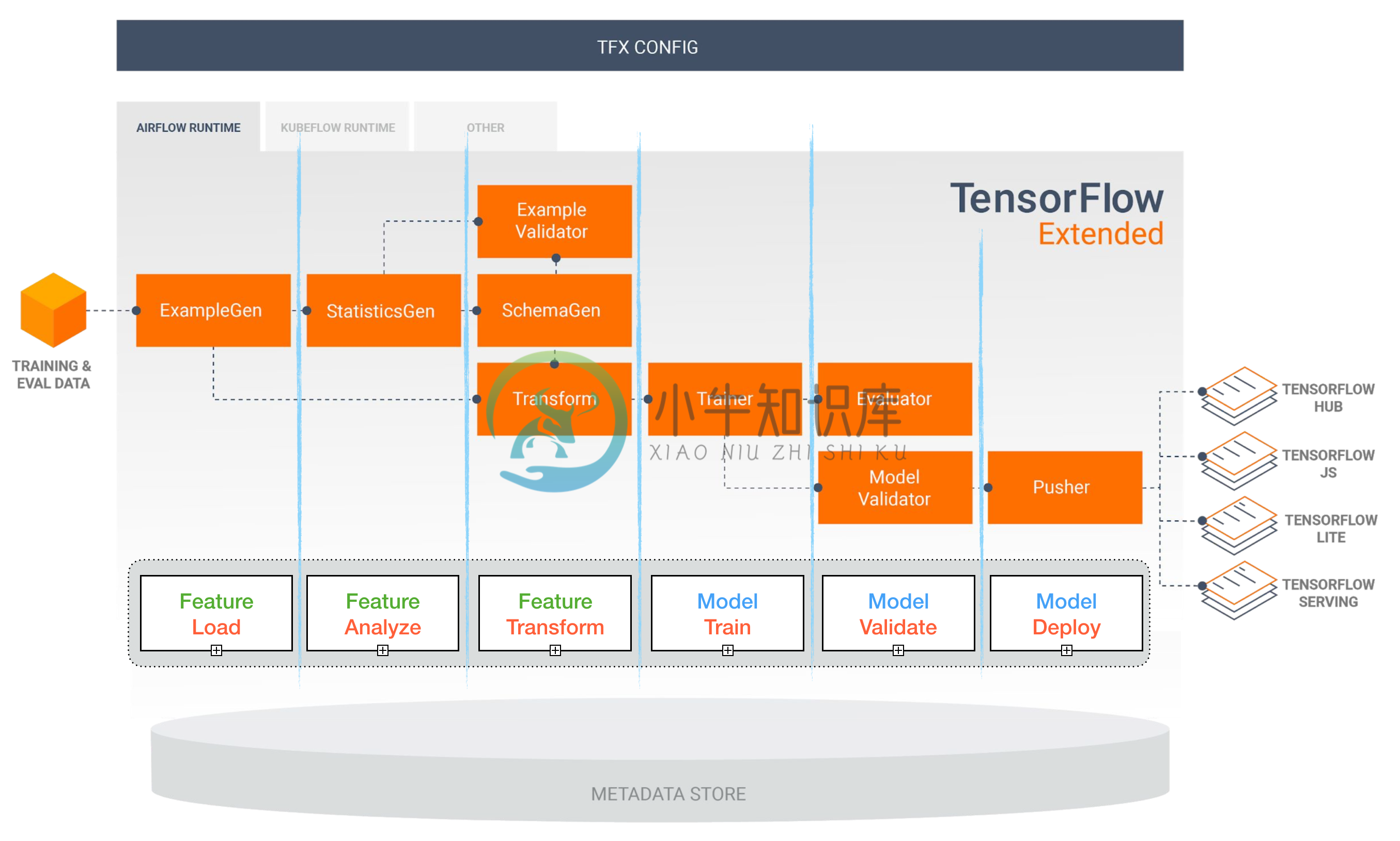

- Tensorflow Extended (TFX)

- Michelangelo (Uber)

- Google Cloud AI Platform

- Amazon SageMaker

- Neptune

- FLOYD

- Paperspace

- Determined AI

- Domino data lab

Tensorflow Extended (TFX)

[TBD]

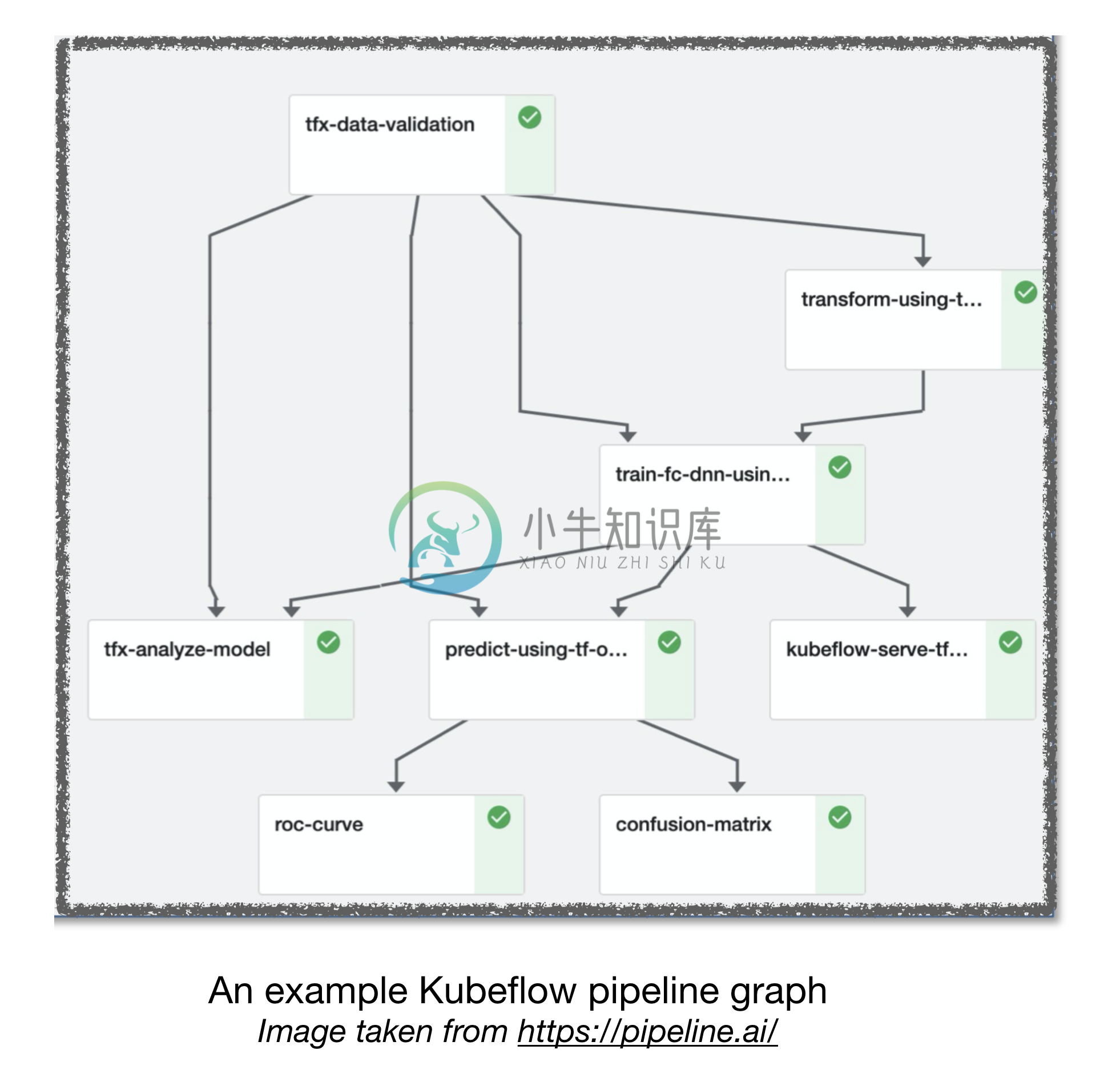

Airflow and KubeFlow ML Pipelines

[TBD]

Other useful links:

- Lessons learned from building practical deep learning systems

- Machine Learning: The High Interest Credit Card of Technical Debt

Contributing

References:

[1]: Full Stack Deep Learning Bootcamp, Nov 2019.

[2]: Advanced KubeFlow Workshop by Pipeline.ai, 2019.

-

原文链接 Mixed precision in AI frameworks (Automatic Mixed Precision): 混合精度计算,最高3倍加速比,利用Tensor Cores;(Get upto 3X speedup running on Tensor Cores With just a few lines of code added to your existing train

-

Deep Machine Learning libraries and frameworks At the end of 2015, all eyes were on the year’s accomplishments, as well as forecasting technology trends of 2016 and beyond. One particular field that h

-

Deep-Learning-in-Production In this repository, I will share some useful notes and references about deploying deep learning-based models in production. Convert PyTorch Models in Production: PyTorch Pr

-

production Production app for D.Tube Accessible live on https://d.tube For issues, please refer to the unminified code repository: dtube/dtube

-

Awesome production machine learning This repository contains a curated list of awesome open source libraries that will help you deploy, monitor, version, scale, and secure your production machine lear

-

Level Project Status Level began with the ambitious idea of solving the problems caused by real-time communication tools. After pouring thousands of hours effort into the cause, I made the tough decis

-

人工神经网络(ANN)是一种高效的计算系统,其中心主题借鉴了生物神经网络的类比。 神经网络是机器学习的一种模型。 在20世纪80年代中期和90年代初期,在神经网络中进行了许多重要的建筑改进。 在本章中,您将了解有关深度学习的更多信息,这是一种人工智能的方法。 深度学习源自十年来爆炸性的计算增长,成为该领域的一个重要竞争者。 因此,深度学习是一种特殊的机器学习,其算法受到人脑结构和功能的启发。 机器

-

DEEP(Digital Enterprise End-to-end Platform)是由 AWS 支持的成本低,维护成本低的数字化平台。帮助企业开发人员提高工作效率。 使用DEEP Framework,开发人员可以立即使用: 简化的“类似于生产”的开发环境 使用微服务架构的企业级平台 零发展几乎无限的可扩展性(又名无服务器计算) 来自云提供商(例如AWS,GCP等)的Web服务的抽象使用