Jupyter DataTables

Jupyter Notebook extension to leverage pandas DataFrames by integrating DataTables JS.

About

Data scientists and in fact many developers work with pd.DataFrame on daily basis to interpret data to process them. In my typical workflow. The common workflow is to display the dataframe, take a look at the data schema and then produce multiple plots to check the distribution of the data to have a clearer picture, perhaps search some data in the table, etc...

What if those distribution plots were part of the standard DataFrame and we had the ability to quickly search through the table with minimal effort? What if it was the default representation?

The jupyter-datatables uses jupyter-require to draw the table.

Installation

pip install jupyter-datatables

Usage

import numpy as np

import pandas as pd

from jupyter_datatables import init_datatables_mode

init_datatables_mode()

That's it, your default pandas representation will now use Jupyter DataTables!

df = pd.DataFrame(np.abs(np.random.randn(50, 5)), columns=list(string.ascii_uppercase[:5]))

In most cases, you don't need to worry too much about the size of your data. Jupyter DataTables calculates required sample size based on a confidence interval (by default this would be 0.95) and margin of error and ceils it to the highest 'smart' value.

For example, for a data containing 100,000 samples, given 0.975 confidence interval and 0.02 margin of error, the Jupyter DataTables would calculate that 3044 samples are required and it would round it up to 4000.

With additional note:

Sample size: 4,000 out of 100,000

We can also handle wide tables with ease.

df = pd.DataFrame(np.abs(np.random.randn(50, 20)), columns=list(string.ascii_uppercase[:20]))

As per 0.3.0, there is a support for interactive tooltips:

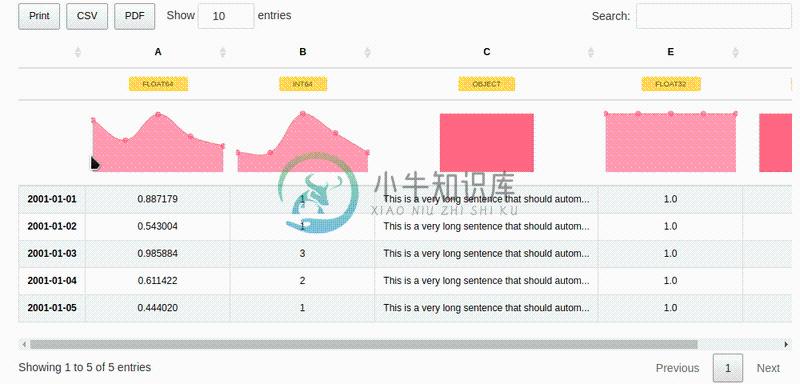

And also support for custom indices including Date type:

dft = pd.DataFrame({'A': np.random.rand(5),

'B': [1, 1, 3, 2, 1],

'C': 'This is a very long sentence that should automatically be trimmed',

'D': [pd.Timestamp('20010101'), pd.Timestamp('20010102'), pd.Timestamp('20010103'), pd.Timestamp('20010104'), pd.Timestamp('20010105')],

'E': pd.Series([1.0] * 5).astype('float32'),

'F': [False, True, False, False, True],

})

dft.D = dft.D.apply(pd.to_datetime)

dft.set_index('D', inplace=True)

Current status and future plans:

Check out the Project Board where we track issues and TODOs for our Jupyter tooling!

Author: Marek Cermak macermak@redhat.com, @AICoE

-

Jupyter notebook is formerly known as IPython notebook, it is a tool that helps you create readable analyses. Jupyter works with python kernel by default, but it also supports many other kernels. Keyb

-

Beyond Jupyter Notebooks �� All material from the PyCon.DE 2018 Talk "Beyond Jupyter Notebooks - Building your own data science platform with Python & Docker" Resources of the presentation Video of th

-

OCaml Jupyter An OCaml kernel for Jupyter notebook. This provides an OCaml REPL with a great user interface such as markdown/HTML documentation, LaTeX formula by MathJax, and image embedding. Getting

-

mkdocs-jupyter: Use Jupyter Notebooks in mkdocs Add Jupyter Notebooks directly to the mkdocs navigation Support for multiple formats: .ipynb and .py files (using jupytext) Same style as regular Jupyte

-

Guide for Reproducible Research and Data Science in Jupyter Notebooks This guide is a community-resource of crowdsourced guidelines and tutorials for reproducible research in Jupyter Notebooks. This r

-

A plugin for JupyterLab that lets you set up and use as many filebrowsers as you like, connected to whatever local and/or remote filesystem-like resources you want. The backend is built on top of PyFi