异常:在Python中创建Spark会话时,在向驱动程序发送端口号之前,Java网关进程退出

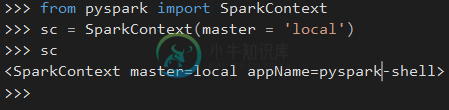

因此,我试图使用以下方法在Python2.7中创建一个Spark会话:

#Initialize SparkSession and SparkContext

from pyspark.sql import SparkSession

from pyspark import SparkContext

#Create a Spark Session

SpSession = SparkSession \

.builder \

.master("local[2]") \

.appName("V2 Maestros") \

.config("spark.executor.memory", "1g") \

.config("spark.cores.max","2") \

.config("spark.sql.warehouse.dir", "file:///c:/temp/spark-warehouse")\

.getOrCreate()

#Get the Spark Context from Spark Session

SpContext = SpSession.sparkContext

我发现以下错误指向python\lib\pyspark.zip\pyspark\java_gateway.py路径`

Exception: Java gateway process exited before sending the driver its port number

import atexit

import os

import sys

import select

import signal

import shlex

import socket

import platform

from subprocess import Popen, PIPE

if sys.version >= '3':

xrange = range

from py4j.java_gateway import java_import, JavaGateway, GatewayClient

from py4j.java_collections import ListConverter

from pyspark.serializers import read_int

# patching ListConverter, or it will convert bytearray into Java ArrayList

def can_convert_list(self, obj):

return isinstance(obj, (list, tuple, xrange))

ListConverter.can_convert = can_convert_list

def launch_gateway():

if "PYSPARK_GATEWAY_PORT" in os.environ:

gateway_port = int(os.environ["PYSPARK_GATEWAY_PORT"])

else:

SPARK_HOME = os.environ["SPARK_HOME"]

# Launch the Py4j gateway using Spark's run command so that we pick up the

# proper classpath and settings from spark-env.sh

on_windows = platform.system() == "Windows"

script = "./bin/spark-submit.cmd" if on_windows else "./bin/spark-submit"

submit_args = os.environ.get("PYSPARK_SUBMIT_ARGS", "pyspark-shell")

if os.environ.get("SPARK_TESTING"):

submit_args = ' '.join([

"--conf spark.ui.enabled=false",

submit_args

])

command = [os.path.join(SPARK_HOME, script)] + shlex.split(submit_args)

# Start a socket that will be used by PythonGatewayServer to communicate its port to us

callback_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

callback_socket.bind(('127.0.0.1', 0))

callback_socket.listen(1)

callback_host, callback_port = callback_socket.getsockname()

env = dict(os.environ)

env['_PYSPARK_DRIVER_CALLBACK_HOST'] = callback_host

env['_PYSPARK_DRIVER_CALLBACK_PORT'] = str(callback_port)

# Launch the Java gateway.

# We open a pipe to stdin so that the Java gateway can die when the pipe is broken

if not on_windows:

# Don't send ctrl-c / SIGINT to the Java gateway:

def preexec_func():

signal.signal(signal.SIGINT, signal.SIG_IGN)

proc = Popen(command, stdin=PIPE, preexec_fn=preexec_func, env=env)

else:

# preexec_fn not supported on Windows

proc = Popen(command, stdin=PIPE, env=env)

gateway_port = None

# We use select() here in order to avoid blocking indefinitely if the subprocess dies

# before connecting

while gateway_port is None and proc.poll() is None:

timeout = 1 # (seconds)

readable, _, _ = select.select([callback_socket], [], [], timeout)

if callback_socket in readable:

gateway_connection = callback_socket.accept()[0]

# Determine which ephemeral port the server started on:

gateway_port = read_int(gateway_connection.makefile(mode="rb"))

gateway_connection.close()

callback_socket.close()

if gateway_port is None:

raise Exception("Java gateway process exited before sending the driver its port number")

# In Windows, ensure the Java child processes do not linger after Python has exited.

# In UNIX-based systems, the child process can kill itself on broken pipe (i.e. when

# the parent process' stdin sends an EOF). In Windows, however, this is not possible

# because java.lang.Process reads directly from the parent process' stdin, contending

# with any opportunity to read an EOF from the parent. Note that this is only best

# effort and will not take effect if the python process is violently terminated.

if on_windows:

# In Windows, the child process here is "spark-submit.cmd", not the JVM itself

# (because the UNIX "exec" command is not available). This means we cannot simply

# call proc.kill(), which kills only the "spark-submit.cmd" process but not the

# JVMs. Instead, we use "taskkill" with the tree-kill option "/t" to terminate all

# child processes in the tree (http://technet.microsoft.com/en-us/library/bb491009.aspx)

def killChild():

Popen(["cmd", "/c", "taskkill", "/f", "/t", "/pid", str(proc.pid)])

atexit.register(killChild)

# Connect to the gateway

gateway = JavaGateway(GatewayClient(port=gateway_port), auto_convert=True)

# Import the classes used by PySpark

java_import(gateway.jvm, "org.apache.spark.SparkConf")

java_import(gateway.jvm, "org.apache.spark.api.java.*")

java_import(gateway.jvm, "org.apache.spark.api.python.*")

java_import(gateway.jvm, "org.apache.spark.ml.python.*")

java_import(gateway.jvm, "org.apache.spark.mllib.api.python.*")

# TODO(davies): move into sql

java_import(gateway.jvm, "org.apache.spark.sql.*")

java_import(gateway.jvm, "org.apache.spark.sql.hive.*")

java_import(gateway.jvm, "scala.Tuple2")

return gateway

我对Spark和Pyspark还是个新手,因此无法在这里调试这个问题。我还试着看了一些其他的建议:Spark+Python-Java网关进程在向驱动程序发送端口号之前退出?和Pyspark:Exception:Java网关进程在向驱动程序发送端口号之前退出

但目前还无法解决这个问题。请救命!

下面是spark环境的样子:

# This script loads spark-env.sh if it exists, and ensures it is only loaded once.

# spark-env.sh is loaded from SPARK_CONF_DIR if set, or within the current directory's

# conf/ subdirectory.

# Figure out where Spark is installed

if [ -z "${SPARK_HOME}" ]; then

export SPARK_HOME="$(cd "`dirname "$0"`"/..; pwd)"

fi

if [ -z "$SPARK_ENV_LOADED" ]; then

export SPARK_ENV_LOADED=1

# Returns the parent of the directory this script lives in.

parent_dir="${SPARK_HOME}"

user_conf_dir="${SPARK_CONF_DIR:-"$parent_dir"/conf}"

if [ -f "${user_conf_dir}/spark-env.sh" ]; then

# Promote all variable declarations to environment (exported) variables

set -a

. "${user_conf_dir}/spark-env.sh"

set +a

fi

fi

# Setting SPARK_SCALA_VERSION if not already set.

if [ -z "$SPARK_SCALA_VERSION" ]; then

ASSEMBLY_DIR2="${SPARK_HOME}/assembly/target/scala-2.11"

ASSEMBLY_DIR1="${SPARK_HOME}/assembly/target/scala-2.10"

if [[ -d "$ASSEMBLY_DIR2" && -d "$ASSEMBLY_DIR1" ]]; then

echo -e "Presence of build for both scala versions(SCALA 2.10 and SCALA 2.11) detected." 1>&2

echo -e 'Either clean one of them or, export SPARK_SCALA_VERSION=2.11 in spark-env.sh.' 1>&2

exit 1

fi

if [ -d "$ASSEMBLY_DIR2" ]; then

export SPARK_SCALA_VERSION="2.11"

else

export SPARK_SCALA_VERSION="2.10"

fi

fi

下面是我的Spark环境是如何在Python中设置的:

import os

import sys

# NOTE: Please change the folder paths to your current setup.

#Windows

if sys.platform.startswith('win'):

#Where you downloaded the resource bundle

os.chdir("E:/Udemy - Spark/SparkPythonDoBigDataAnalytics-Resources")

#Where you installed spark.

os.environ['SPARK_HOME'] = 'E:/Udemy - Spark/Apache Spark/spark-2.0.0-bin-hadoop2.7'

#other platforms - linux/mac

else:

os.chdir("/Users/kponnambalam/Dropbox/V2Maestros/Modules/Apache Spark/Python")

os.environ['SPARK_HOME'] = '/users/kponnambalam/products/spark-2.0.0-bin-hadoop2.7'

os.curdir

# Create a variable for our root path

SPARK_HOME = os.environ['SPARK_HOME']

# Create a variable for our root path

SPARK_HOME = os.environ['SPARK_HOME']

#Add the following paths to the system path. Please check your installation

#to make sure that these zip files actually exist. The names might change

#as versions change.

sys.path.insert(0,os.path.join(SPARK_HOME,"python"))

sys.path.insert(0,os.path.join(SPARK_HOME,"python","lib"))

sys.path.insert(0,os.path.join(SPARK_HOME,"python","lib","pyspark.zip"))

sys.path.insert(0,os.path.join(SPARK_HOME,"python","lib","py4j-0.10.1-src.zip"))

#Initialize SparkSession and SparkContext

from pyspark.sql import SparkSession

from pyspark import SparkContext

共有1个答案

看了很多帖子后,我终于让Spark在我的Windows笔记本电脑上工作了。我使用Anaconda Python,但我确信这也将与标准分布一起工作。

所以,你需要确保你能独立运行Spark。我的假设是您安装了有效的python路径和Java。对于Java,我在路径中定义了“C:\ProgramData\Oracle\Java\JavaPath”,该路径重定向到Java8 bin文件夹。

>

转到%spark_home%\bin并尝试运行pyspark,这是Python Spark Shell。如果您的环境与我的环境类似,您将看到无法找到winutils和Hadoop的Exception。第二个例外情况是缺少配置单元:

Pyspark.sql.utils.IllegalArgumentException:u“实例化'org.apache.spark.sql.hive.hivessionStateBuilder'时出错:”

然后我找到并简单地遵循https://jaceklaskowski.gitbooks.io/mastering-apache-spark/spark-tips-and-tricks-running-spark-windows.html:

希望这对您有帮助,并且您可以享受在本地运行Spark代码的乐趣。

-

为什么我的浏览器屏幕上会出现这个错误, 有什么办法可以修好它吗?

-

这是回溯 我将PyDev与Eclipse Eclipse Neon.2发行版(4.6.2)一起使用。配置如下:Libraries环境 注意:我使用的是最新的Spark版本:spark-2.1.0-bin-hadoop2.7

-

我正试图用Anaconda在我的Windows10中安装Spark,但当我试图在JupyterNotebook中运行pyspark时,我遇到了一个错误。我正在遵循本教程中的步骤。然后,我已经下载了Java8并安装了Spark 3.0.0和Hadoop 2.7。 我已经为SPARK_HOME、JAVA_HOME设置了路径,并在“path”环境中包含了“/bin”路径。 在Anaconda pyspa

-

尝试使用时我遇到了一个问题。我在我的用户路径中安装并添加了。但根据文档,它不需要任何其他依赖项。 我的问题是,我必须安装其他东西吗?像Spark本身或类似的东西? 我在中使用。

-

代码在下面 获取错误异常:Java网关进程在发送其端口号之前退出

-

我运行Windows10,并通过Anaconda3安装了Python3。我在用Jupyter笔记本。我从这里安装了Spark(Spark-2.3.0-bin-Hadoop2.7.tgz)。我已经解压缩了这些文件,并将它们粘贴到我的目录d:\spark中。我已经修改了环境变量: 用户变量: 变量:SPARK_HOME 值:D:\spark\bin 我已经通过conda安装/更新了以下模块: 熊猫 皮