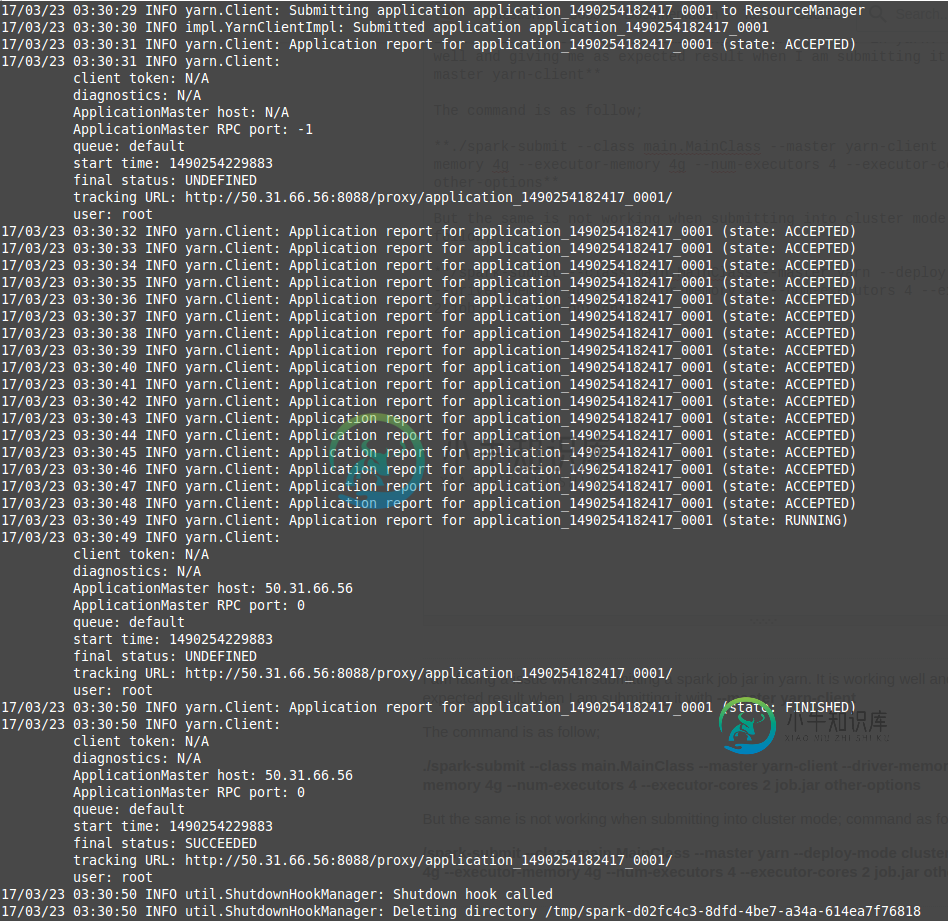

Spark作业对纱线客户端正常工作,但对纱线簇完全不工作

我正面临一个问题,当提交一个火花作业罐子在纱。当我用-master yarn-client提交它时,它工作得很好,并给出了我预期的结果

命令如下所示;

./spark-submit--类main.mainclass--主纱--客户端--驱动程序--内存4G--执行器--内存4G--num-执行器4--执行器-核心2 job.jar其他--选项

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>128</value>

<description>Minimum limit of memory to allocate to each container request at the Resource Manager.</description>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>20048</value>

<description>Maximum limit of memory to allocate to each container request at the Resource Manager.</description>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

<description>The minimum allocation for every container request at the RM, in terms of virtual CPU cores. Requests lower than this won't take effect, and the specified value will get allocated the minimum.</description>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>2</value>

<description>The maximum allocation for every container request at the RM, in terms of virtual CPU cores. Requests higher than this won't take effect, and will get capped to this value.</description>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>24096</value>

<description>Physical memory, in MB, to be made available to running containers</description>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>4</value>

<description>Number of CPU cores that can be allocated for containers.</description>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

17/03/23 03:30:44 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3315fed4{/static,null,AVAILABLE}

17/03/23 03:30:44 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3e430b9a{/,null,AVAILABLE}

17/03/23 03:30:44 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@77184f65{/api,null,AVAILABLE}

17/03/23 03:30:44 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@643f7b84{/stages/stage/kill,null,AVAILABLE}

17/03/23 03:30:44 INFO server.ServerConnector: Started ServerConnector@27614db2{HTTP/1.1}{0.0.0.0:37212}

17/03/23 03:30:44 INFO server.Server: Started @7799ms

17/03/23 03:30:44 INFO util.Utils: Successfully started service 'SparkUI' on port 37212.

17/03/23 03:30:44 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://50.31.66.56:37212

17/03/23 03:30:44 INFO cluster.YarnClusterScheduler: Created YarnClusterScheduler

17/03/23 03:30:44 INFO cluster.SchedulerExtensionServices: Starting Yarn extension services with app application_1490254182417_0001 and attemptId Some(appattempt_1490254182417_0001_000001)

17/03/23 03:30:44 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 45469.

17/03/23 03:30:44 INFO netty.NettyBlockTransferService: Server created on 50.31.66.56:45469

17/03/23 03:30:44 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 50.31.66.56, 45469)

17/03/23 03:30:44 INFO storage.BlockManagerMasterEndpoint: Registering block manager 50.31.66.56:45469 with 2004.6 MB RAM, BlockManagerId(driver, 50.31.66.56, 45469)

17/03/23 03:30:44 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 50.31.66.56, 45469)

17/03/23 03:30:44 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@60245f4e{/metrics/json,null,AVAILABLE}

17/03/23 03:30:49 INFO scheduler.EventLoggingListener: Logging events to hdfs://mecku-1:54310/spark/application_1490254182417_0001_1

17/03/23 03:30:49 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(spark://YarnAM@50.31.66.56:50465)

17/03/23 03:30:49 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8030

17/03/23 03:30:49 INFO yarn.YarnRMClient: Registering the ApplicationMaster

17/03/23 03:30:49 INFO yarn.YarnAllocator: Will request 4 executor containers, each with 2 cores and 4505 MB memory including 409 MB overhead

17/03/23 03:30:49 INFO yarn.YarnAllocator: Canceled 0 container requests (locality no longer needed)

17/03/23 03:30:49 INFO yarn.YarnAllocator: Submitted container request (host: Any, capability: <memory:4505, vCores:2>)

17/03/23 03:30:49 INFO yarn.YarnAllocator: Submitted container request (host: Any, capability: <memory:4505, vCores:2>)

17/03/23 03:30:49 INFO yarn.YarnAllocator: Submitted container request (host: Any, capability: <memory:4505, vCores:2>)

17/03/23 03:30:49 INFO yarn.YarnAllocator: Submitted container request (host: Any, capability: <memory:4505, vCores:2>)

17/03/23 03:30:49 INFO yarn.ApplicationMaster: Started progress reporter thread with (heartbeat : 3000, initial allocation : 200) intervals

17/03/23 03:30:49 INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with SUCCEEDED

17/03/23 03:30:49 INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered.

17/03/23 03:30:49 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://localhost:54310/user/root/.sparkStaging/application_1490254182417_0001

17/03/23 03:30:49 INFO storage.DiskBlockManager: Shutdown hook called

17/03/23 03:30:49 INFO util.ShutdownHookManager: Shutdown hook called

17/03/23 03:30:49 INFO util.ShutdownHookManager: Deleting directory /tmp/hadoop-root/nm-local-dir/usercache/root/appcache/application_1490254182417_0001/spark-d77de654-4040-4b43-8155-efb155008b4b

17/03/23 03:30:49 INFO util.ShutdownHookManager: Deleting directory /tmp/hadoop-root/nm-local-dir/usercache/root/appcache/application_1490254182417_0001/spark-d77de654-4040-4b43-8155-efb155008b4b/userFiles-d71596df-df26-4b88-b51e-f0b962daf84a

17/03/23 03:30:40 INFO yarn.ApplicationMaster: ApplicationAttemptId: appattempt_1490254182417_0001_000001

共有1个答案

也许,你的从节点不工作了。您应该检查下面的节点命令,

sudo -u yarn yarn node -list

如果你找不到所有的节点,你应该修复节点的设置。例如,selinux off(检查getenfore),以及每个节点的yarn-site.xml和core-site.xml。

-

我正在使用spark submit执行以下命令: spark submit script\u测试。py—主纱线—部署模式群集spark submit script\u测试。py—主纱线簇—部署模式簇 这工作做得很好。我可以在Spark History Server UI下看到它。但是,我无法在RessourceManager UI(纱线)下看到它。 我感觉我的作业没有发送到集群,但它只在一个节点上

-

aws上的3台机器(32个内核和64 GB内存) 我手动安装了带有hdfs和yarn服务的Hadoop2(没有使用EMR)。 机器#1运行hdfs-(NameNode&SeconderyNameNode)和yarn-(resourcemanager),在masters文件中定义 问题是,我认为我做错了,因为这项工作需要相当多的时间,大约一个小时,我认为它不是很优化。 我使用以下命令运行flink:

-

我试图通过以下命令向CDH纱线集群提交spark作业 我试过几种组合,但都不起作用。。。现在,我的本地/root以及HDFS/user/root/lib中都有所有poi JAR,因此我尝试了以下方法 如何将JAR分发到所有集群节点?因为上面这些都不起作用,作业仍然无法引用该类,因为我一直收到相同的错误: 同样的命令也适用于“--master本地”,但没有指定--jar,因为我已经将我的jar复制到

-

首先,我想说的是我看到的解决这个问题的唯一方法是:Spark 1.6.1 SASL。但是,在为spark和yarn认证添加配置时,仍然不起作用。下面是我在Amazon's EMR的一个yarn集群上使用spark-submit对spark的配置: 注意,我用代码将spark.authenticate添加到了sparkContext的hadoop配置中,而不是core-site.xml(我假设我可以

-

即使是一个简单的WordCount mapduce也会因相同的错误而失败。 Hadoop 2.6.0 下面是纱线原木。 似乎在资源协商期间发生了某种超时 但我无法验证这一点,即超时的确切原因。 2016-11-11 15:38:09313信息组织。阿帕奇。hadoop。纱线服务器resourcemanager。amlauncher。AMLauncher:启动appattempt\u 1478856

-

此代码在本地主机上完美工作,但在线时需要太长时间才能进入下一页,并且在线时不会向手机发送短信。虽然它正在生成密码,但没有将其发送到手机,并且需要太多时间来生成。但是本地主机正在发送msg。