如何在Android中实现输入音频的IIR带通滤波器

如何在我当前的android代码中实现IIR带通滤波器?我有一个android应用程序,它可以录制音频(实际上是频率)并将其保存在一个文件夹中。wav文件。

我已经设法在网上找到了一个IIR过滤器库,但我不确定如何在代码中实现它。

https://github.com/ddf/Minim/blob/master/src/ddf/minim/effects/BandPass.java https://github.com/DASAR/Minim-Android/blob/master/src/ddf/minim/effects/IIRFilter.java

我应该在将接收到的声音信号输出到计算机之前,将18k-20k带通滤波器添加到代码中。wav文件。

我当前的代码

package com.example.audio;

import ddf.minim.effects.*;

import java.io.BufferedInputStream;

import java.io.BufferedOutputStream;

import java.io.DataInputStream;

import java.io.DataOutputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import com.varma.samples.audiorecorder.R;

import android.app.Activity;

import android.content.Context;

import android.media.AudioFormat;

import android.media.AudioManager;

import android.media.AudioRecord;

import android.media.AudioTrack;

import android.media.MediaRecorder;

import android.media.MediaScannerConnection;

import android.os.Bundle;

import android.os.Environment;

import android.os.Handler;

import android.os.Message;

import android.text.SpannableStringBuilder;

import android.text.style.RelativeSizeSpan;

import android.util.Log;

import android.view.View;

import android.view.View.OnClickListener;

import android.widget.Button;

import android.widget.LinearLayout;

import android.widget.TextView;

import android.widget.Toast;

public class RecorderActivity extends Activity {

private static final int RECORDER_BPP = 16;

private static final String AUDIO_RECORDER_FILE_EXT_WAV = ".wav";

private static final String AUDIO_RECORDER_FOLDER = "AudioRecorder";

private static final String AUDIO_RECORDER_TEMP_FILE = "record_temp.raw";

private static final int RECORDER_SAMPLERATE = 44100;// 44100; //18000

private static final int RECORDER_CHANNELS = AudioFormat.CHANNEL_IN_STEREO; //AudioFormat.CHANNEL_IN_STEREO;

private static final int RECORDER_AUDIO_ENCODING = AudioFormat.ENCODING_PCM_16BIT;

private static final int PLAY_CHANNELS = AudioFormat.CHANNEL_OUT_STEREO; //AudioFormat.CHANNEL_OUT_STEREO;

private static final int FREQUENCY_LEFT = 2000; //Original:18000 (16 Dec)

private static final int FREQUENCY_RIGHT = 2000; //Original:18000 (16 Dec)

private static final int AMPLITUDE_LEFT = 1;

private static final int AMPLITUDE_RIGHT = 1;

private static final int DURATION_SECOND = 10;

private static final int SAMPLE_RATE = 44100;

private static final float SWEEP_RANGE = 1000.0f;

String store;

private AudioRecord recorder = null;

private int bufferSize = 0;

private Thread recordingThread = null;

private boolean isRecording = false;

double time;

float[] buffer1;

float[] buffer2;

byte[] byteBuffer1;

byte[] byteBuffer2;

byte[] byteBufferFinal;

int bufferIndex;

short x;

short y;

AudioTrack audioTrack;

Button btnPlay, btnStart, btnStop;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

setButtonHandlers();

enableButtons(false);

btnPlay = (Button) findViewById(R.id.btnPlay);

btnStop = (Button) findViewById(R.id.btnStop);

btnStart = (Button) findViewById(R.id.btnStart);

bufferSize = AudioRecord.getMinBufferSize(RECORDER_SAMPLERATE, RECORDER_CHANNELS, RECORDER_AUDIO_ENCODING);

buffer1 = new float[(int) (DURATION_SECOND * SAMPLE_RATE)];

buffer2 = new float[(int) (DURATION_SECOND * SAMPLE_RATE)];

float f1 = 0.0f, f2 = 0.0f;

for (int sample = 0, step = 0; sample < buffer1.length; sample++) {

time = sample / (SAMPLE_RATE * 1.0);

//f1 = (float)(FREQUENCY_LEFT + ((sample / (buffer1.length * 1.0)) * SWEEP_RANGE)); // frequency sweep

//f2 = (float)(FREQUENCY_RIGHT + ((sample / (buffer1.length * 1.0)) * SWEEP_RANGE)); // frequency sweep

f1 = FREQUENCY_LEFT; // static frequency

f2 = FREQUENCY_RIGHT; // static frequency

buffer1[sample] = (float) (AMPLITUDE_LEFT * Math.sin(2 * Math.PI * f1 * time));

buffer2[sample] = (float) (AMPLITUDE_RIGHT * Math.sin(2 * Math.PI * f2 * time));

}

byteBuffer1 = new byte[buffer1.length * 2]; // two bytes per audio

// frame, 16 bits

for (int i = 0, bufferIndex = 0; i < byteBuffer1.length; i++) {

x = (short) (buffer1[bufferIndex++] * 32767.0); // [2^16 - 1]/2 =

// 32767.0

byteBuffer1[i] = (byte) x; // low byte

byteBuffer1[++i] = (byte) (x >>> 8); // high byte

}

byteBuffer2 = new byte[buffer2.length * 2];

for (int j = 0, bufferIndex = 0; j < byteBuffer2.length; j++) {

y = (short) (buffer2[bufferIndex++] * 32767.0);

byteBuffer2[j] = (byte) y; // low byte

byteBuffer2[++j] = (byte) (y >>> 8); // high byte

}

byteBufferFinal = new byte[byteBuffer1.length * 2];

// LL RR LL RR LL RR

for (int k = 0, index = 0; index < byteBufferFinal.length - 4; k = k + 2) {

byteBufferFinal[index] = byteBuffer1[k]; // LEFT

// {0,1/4,5/8,9/12,13;...}

byteBufferFinal[index + 1] = byteBuffer1[k + 1];

index = index + 2;

byteBufferFinal[index] = byteBuffer2[k]; // RIGHT

// {2,3/6,7/10,11;...}

byteBufferFinal[index + 1] = byteBuffer2[k + 1];

index = index + 2;

}

try {

FileOutputStream ss = new FileOutputStream(Environment.getExternalStorageDirectory().getPath() + "/" + AUDIO_RECORDER_FOLDER + "/source.txt");

ss.write(byteBufferFinal);

ss.flush();

ss.close();

}

catch (IOException ioe){

Log.e("IO Error","Write source error.");

}

}

private void setButtonHandlers() {

((Button) findViewById(R.id.btnStart)).setOnClickListener(startClick);

((Button) findViewById(R.id.btnStop)).setOnClickListener(stopClick);

((Button) findViewById(R.id.btnPlay)).setOnClickListener(playClick);

}

private void enableButton(int id, boolean isEnable) {

((Button) findViewById(id)).setEnabled(isEnable);

}

private void enableButtons(boolean isRecording) {

enableButton(R.id.btnStart, !isRecording);

enableButton(R.id.btnStop, isRecording);

enableButton(R.id.btnPlay, isRecording);

}

private String getFilename() {

String filepath = Environment.getExternalStorageDirectory().getPath();

File file = new File(filepath, AUDIO_RECORDER_FOLDER);

if (!file.exists()) {

file.mkdirs();

}

MediaScannerConnection.scanFile(this, new String[]{filepath}, null, null);

store = file.getAbsolutePath() + "/" + "Audio"

+ AUDIO_RECORDER_FILE_EXT_WAV;

return store;

}

private String getTempFilename() {

String filepath = Environment.getExternalStorageDirectory().getPath();

File file = new File(filepath, AUDIO_RECORDER_FOLDER);

if (!file.exists()) {

file.mkdirs();

}

File tempFile = new File(filepath, AUDIO_RECORDER_TEMP_FILE);

if (tempFile.exists())

tempFile.delete();

return (file.getAbsolutePath() + "/" + AUDIO_RECORDER_TEMP_FILE);

}

private void startRecording() {

//BandPass bandpass = new BandPass(19000,2000,44100);

/* BandPass bandpass = new BandPass(50,2,SAMPLE_RATE);

int [] freqR = {FREQUENCY_RIGHT};

int [] freqL = {FREQUENCY_LEFT};

float[] testL = shortToFloat(freqR);

float [] testR = shortToFloat(freqL);

bandpass.process(testL,testR);

bandpass.printCoeff();

*/

recorder = new AudioRecord(MediaRecorder.AudioSource.CAMCORDER,

RECORDER_SAMPLERATE, RECORDER_CHANNELS,

RECORDER_AUDIO_ENCODING, bufferSize);

AudioManager am = (AudioManager)getSystemService(Context.AUDIO_SERVICE);

am.setStreamVolume(AudioManager.STREAM_MUSIC, am.getStreamMaxVolume(AudioManager.STREAM_MUSIC), 0);

/*

* AudioTrack audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC,

* (int) RECORDER_SAMPLERATE,AudioFormat.CHANNEL_OUT_STEREO,

* AudioFormat.ENCODING_PCM_16BIT, bufferSize, AudioTrack.MODE_STREAM);

*/

audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC,

(int) SAMPLE_RATE, PLAY_CHANNELS,

AudioFormat.ENCODING_PCM_16BIT, byteBufferFinal.length,

AudioTrack.MODE_STATIC);

audioTrack.write(byteBufferFinal, 0, byteBufferFinal.length);

audioTrack.play();

BandPass bandpass = new BandPass(50,2,SAMPLE_RATE);

int [] freqR = {FREQUENCY_RIGHT};

int [] freqL = {FREQUENCY_LEFT};

float[] testL = shortToFloat(freqR);

float [] testR = shortToFloat(freqL);

bandpass.process(testL,testR);

bandpass.printCoeff();

audioTrack.setPlaybackRate(RECORDER_SAMPLERATE);

recorder.startRecording();

isRecording = true;

recordingThread = new Thread(new Runnable() {

@Override

public void run() {

try {

writeAudioDataToFile();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}, "AudioRecorder Thread");

recordingThread.start();

}

double[][] deinterleaveData(double[] samples, int numChannels) {

// assert(samples.length() % numChannels == 0);

int numFrames = samples.length / numChannels;

double[][] result = new double[numChannels][];

for (int ch = 0; ch < numChannels; ch++) {

result[ch] = new double[numFrames];

for (int i = 0; i < numFrames; i++) {

result[ch][i] = samples[numChannels * i + ch];

}

}

return result;

}

private void writeAudioDataToFile() throws IOException {

int read = 0;

byte data[] = new byte[bufferSize];

String filename = getTempFilename();

FileOutputStream os = null;

FileOutputStream rs = null;

try {

os = new FileOutputStream(filename);

rs = new FileOutputStream(getFilename().split(".wav")[0] + ".txt");

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

if (null != os) {

while (isRecording) {

read = recorder.read(data, 0, bufferSize);

if (AudioRecord.ERROR_INVALID_OPERATION != read) {

try {

os.write(data);

rs.write(data);

} catch (IOException e) {

e.printStackTrace();

}

}

}

try {

os.close();

rs.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

private void stopRecording() {

if (null != recorder) {

isRecording = false;

audioTrack.flush();

audioTrack.stop();

audioTrack.release();

recorder.stop();

recorder.release();

recorder = null;

recordingThread = null;

}

copyWaveFile(getTempFilename(), getFilename());

deleteTempFile();

MediaScannerConnection.scanFile(this, new String[]{getFilename()}, null, null);

AudioManager am = (AudioManager)getSystemService(Context.AUDIO_SERVICE);

am.setStreamVolume(AudioManager.STREAM_MUSIC, 0, 0);

}

private void deleteTempFile() {

File file = new File(getTempFilename());

file.delete();

}

private void copyWaveFile(String inFilename, String outFilename) {

FileInputStream in = null;

FileOutputStream out = null;

long totalAudioLen = 0;

long totalDataLen = totalAudioLen + 36;

long longSampleRate = RECORDER_SAMPLERATE;

int channels = 2;

long byteRate = RECORDER_BPP * RECORDER_SAMPLERATE * channels / 8;

byte[] data = new byte[bufferSize];

try {

in = new FileInputStream(inFilename);

out = new FileOutputStream(outFilename);

totalAudioLen = in.getChannel().size();

totalDataLen = totalAudioLen + 36;

WriteWaveFileHeader(out, totalAudioLen, totalDataLen,

longSampleRate, channels, byteRate);

while (in.read(data) != -1) {

out.write(data);

}

in.close();

out.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

private void playWaveFile() {

String filepath = store;

Log.d("PLAYWAVEFILE", "I AM INSIDE");

// define the buffer size for audio track

int minBufferSize = AudioTrack.getMinBufferSize(8000,

AudioFormat.CHANNEL_OUT_STEREO, AudioFormat.ENCODING_PCM_16BIT);

int bufferSize = 512;

audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC,

(int) RECORDER_SAMPLERATE, AudioFormat.CHANNEL_OUT_STEREO,

AudioFormat.ENCODING_PCM_16BIT, minBufferSize,

AudioTrack.MODE_STREAM);

int count = 0;

byte[] data = new byte[bufferSize];

try {

FileInputStream fileInputStream = new FileInputStream(filepath);

DataInputStream dataInputStream = new DataInputStream(

fileInputStream);

audioTrack.play();

Toast.makeText(RecorderActivity.this, "this is my Toast message!!! =)",

Toast.LENGTH_LONG).show();

while ((count = dataInputStream.read(data, 0, bufferSize)) > -1) {

Log.d("PLAYWAVEFILE", "WHILE INSIDE");

audioTrack.write(data, 0, count);

//BandPass bandpass = new BandPass(19000,2000,44100); //Actual

//BandPass bandpass = new BandPass(5000,2000,44100); //Test

//int [] freqR = {FREQUENCY_RIGHT};

//int [] freqL = {FREQUENCY_LEFT};

//float[] testR = shortToFloat(freqR);

//float [] testL = shortToFloat(freqL);

//bandpass.process(testR,testL);

// BandPass bandpass = new BandPass(19000,2000,44100);

//float bw = bandpass.getBandWidth();

//float hello = bandpass.getBandWidth();

//float freq = bandpass.frequency();

//float[] test = {FREQUENCY_RIGHT,FREQUENCY_LEFT};

//shortToFloat(test);

//test [0] = FREQUENCY_RIGHT;

//test [1] = FREQUENCY_LEFT;

//bandpass.process(FREQUENCY_LEFT,FREQUENCY_RIGHT);

//Log.d("MyApp","I am here");

//Log.d("ADebugTag", "Valueeees: " + Float.toString(hello));

//Log.d("Bandwidth: " , "Bandwidth: " + Float.toString(bw));

//Log.d("Frequency: " , "Frequency is " + Float.toString(freq));

//bandpass.setBandWidth(20);

//bandpass.printCoeff();

}

audioTrack.stop();

audioTrack.release();

dataInputStream.close();

fileInputStream.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

private void WriteWaveFileHeader(FileOutputStream out, long totalAudioLen,

long totalDataLen, long longSampleRate, int channels, long byteRate)

throws IOException {

byte[] header = new byte[44];

header[0] = 'R'; // RIFF/WAVE header

header[1] = 'I';

header[2] = 'F';

header[3] = 'F';

header[4] = (byte) (totalDataLen & 0xff);

header[5] = (byte) ((totalDataLen >> 8) & 0xff);

header[6] = (byte) ((totalDataLen >> 16) & 0xff);

header[7] = (byte) ((totalDataLen >> 24) & 0xff);

header[8] = 'W';

header[9] = 'A';

header[10] = 'V';

header[11] = 'E';

header[12] = 'f'; // 'fmt ' chunk

header[13] = 'm';

header[14] = 't';

header[15] = ' ';

header[16] = 16; // 4 bytes: size of 'fmt ' chunk

header[17] = 0;

header[18] = 0;

header[19] = 0;

header[20] = 1; // format = 1

header[21] = 0;

header[22] = (byte) channels;

header[23] = 0;

header[24] = (byte) (longSampleRate & 0xff);

header[25] = (byte) ((longSampleRate >> 8) & 0xff);

header[26] = (byte) ((longSampleRate >> 16) & 0xff);

header[27] = (byte) ((longSampleRate >> 24) & 0xff);

header[28] = (byte) (byteRate & 0xff);

header[29] = (byte) ((byteRate >> 8) & 0xff);

header[30] = (byte) ((byteRate >> 16) & 0xff);

header[31] = (byte) ((byteRate >> 24) & 0xff);

header[32] = (byte) (2 * 16 / 8); // block align

header[33] = 0;

header[34] = RECORDER_BPP; // bits per sample

header[35] = 0;

header[36] = 'd';

header[37] = 'a';

header[38] = 't';

header[39] = 'a';

header[40] = (byte) (totalAudioLen & 0xff);

header[41] = (byte) ((totalAudioLen >> 8) & 0xff);

header[42] = (byte) ((totalAudioLen >> 16) & 0xff);

header[43] = (byte) ((totalAudioLen >> 24) & 0xff);

out.write(header, 0, 44);

}

private View.OnClickListener startClick = new View.OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

Thread recordThread = new Thread(new Runnable() {

@Override

public void run() {

isRecording = true;

startRecording();

}

});

recordThread.start();

btnStart.setEnabled(false);

btnStop.setEnabled(true);

btnPlay.setEnabled(false);

}

};

private View.OnClickListener stopClick = new View.OnClickListener() {

@Override

public void onClick(View v) {

new Handler().postDelayed(new Runnable() {

@Override

public void run() {

// TODO Auto-generated method stub

stopRecording();

enableButtons(false);

btnPlay.setEnabled(true);

// stop();

}

}, 100);

}

};

private View.OnClickListener playClick = new View.OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

playWaveFile();

btnPlay.setEnabled(true);

String filepath = store;

final String promptPlayRecord = "PlayWaveFile()\n" + filepath;

SpannableStringBuilder biggerText = new SpannableStringBuilder(promptPlayRecord);

biggerText.setSpan(new RelativeSizeSpan(2.05f), 0, promptPlayRecord.length(), 0);

Toast.makeText(RecorderActivity.this, biggerText, Toast.LENGTH_LONG).show();

}

};

}

下面的方法是将我的16位整数转换为float,因为库使用float

/**

* Convert int[] audio to 32 bit float format.

* From [-32768,32768] to [-1,1]

* @param audio

*/

private float[] shortToFloat(int[] audio) {

Log.d("SHORTTOFLOAT","INSIDE SHORTTOFLOAT");

float[] converted = new float[audio.length];

for (int i = 0; i < converted.length; i++) {

// [-32768,32768] -> [-1,1]

converted[i] = audio[i] / 32768f; /* default range for Android PCM audio buffers) */

}

return converted;

}

在“SaveRecording”方法下实现带通滤波器的尝试

//BandPass bandpass = new BandPass(19000,2000,44100);

Since I am trying to implement a range of 18k to 20k, I input the above values to the bandpass filter.

BandPass bandpass = new BandPass(50,2,44100); (This is just to test if the frequency has any changes since 18k-20k is not within human range)

int [] freqR = {FREQUENCY_RIGHT};

int [] freqL = {FREQUENCY_LEFT};

float[] testL = shortToFloat(freqR);

float [] testR = shortToFloat(freqL);

bandpass.process(testL,testR);

bandpass.printCoeff();

因为我是用立体声录音的,所以我使用的是在IIRFilter中找到的公共最终同步无效进程(float[]sigLeft,float[]sigRight){}。java类。

然而,即使我实施了上述方法,我也没有听到任何分歧。我做错了什么?有人能建议/帮助我吗?

非常感谢!这在信号处理方面是一个全新的领域。非常感谢任何关于如何进步的提示!!!

更新

因为我必须输出数据。用wav文件过滤声音信号,我认为这样做的方法是将带通滤波器置于“StartRecording”方法下,但是,它不起作用。为什么我做错了?

private void startRecording() {

int count = 0;

recorder = new AudioRecord(MediaRecorder.AudioSource.CAMCORDER,

RECORDER_SAMPLERATE, RECORDER_CHANNELS,

RECORDER_AUDIO_ENCODING, bufferSize);

AudioManager am = (AudioManager)getSystemService(Context.AUDIO_SERVICE);

am.setStreamVolume(AudioManager.STREAM_MUSIC, am.getStreamMaxVolume(AudioManager.STREAM_MUSIC), 0);

audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC,

(int) SAMPLE_RATE, PLAY_CHANNELS,

AudioFormat.ENCODING_PCM_16BIT, byteBufferFinal.length,

AudioTrack.MODE_STATIC);

BandPass bandpass = new BandPass(19000,2000,44100);

float[][] signals = deinterleaveData(byteToFloat(byteBufferFinal), 2);

bandpass.process(signals[0], signals[1]);

audioTrack.write(interleaveData(signals), 0, count, WRITE_NON_BLOCKING);

audioTrack.play();

//audioTrack.write(byteBufferFinal, 0, byteBufferFinal.length); //Original

audioTrack.setPlaybackRate(RECORDER_SAMPLERATE);

recorder.startRecording();

isRecording = true;

recordingThread = new Thread(new Runnable() {

@Override

public void run() {

try {

writeAudioDataToFile();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}, "AudioRecorder Thread");

recordingThread.start();

}

请问它是否被认为是经过过滤的?为了确保正确过滤,我应该注意哪些特征。

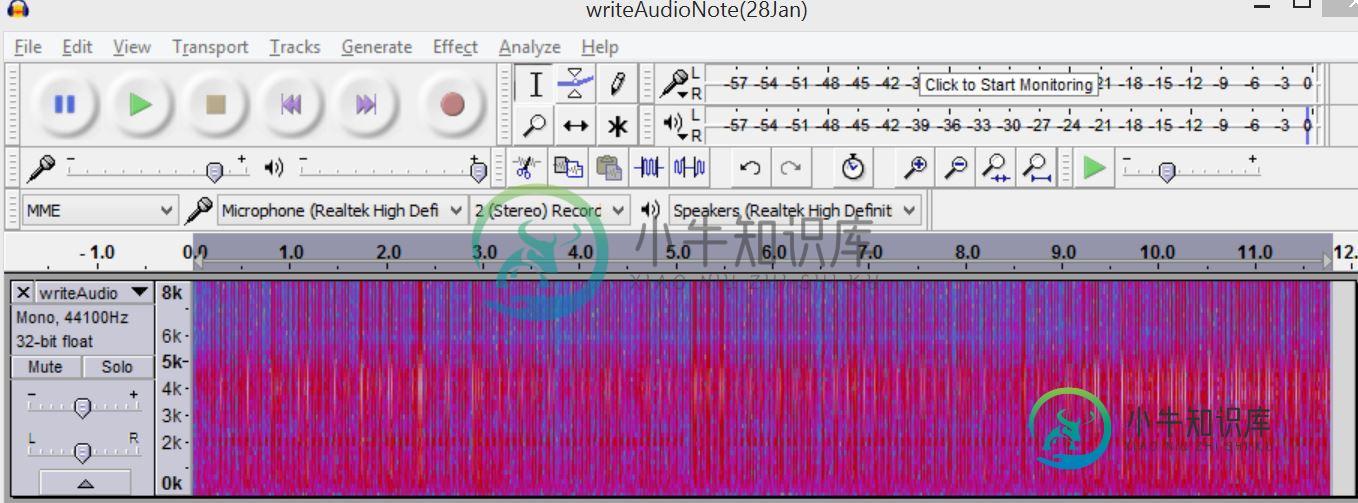

上面的图片是由按下黑色三角形产生的

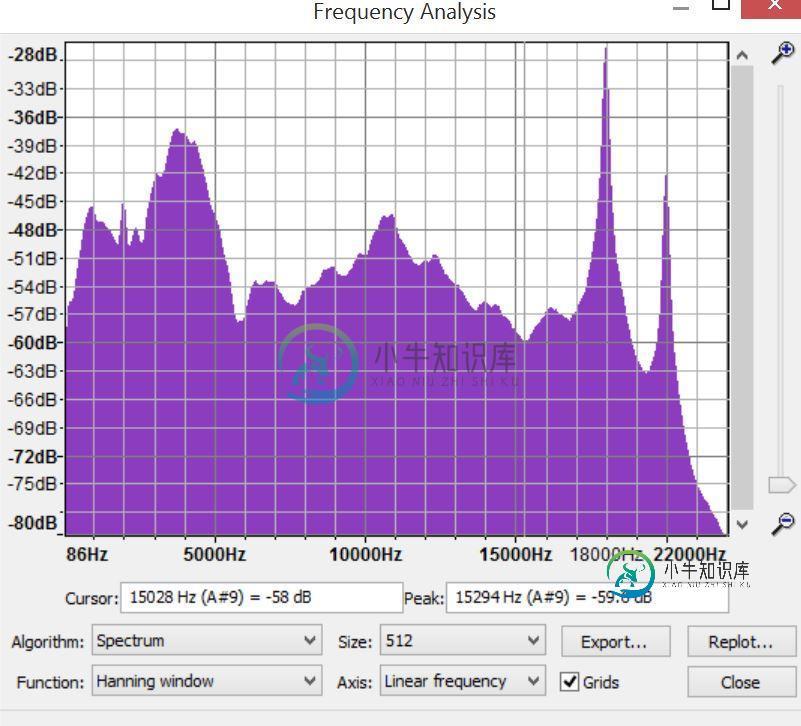

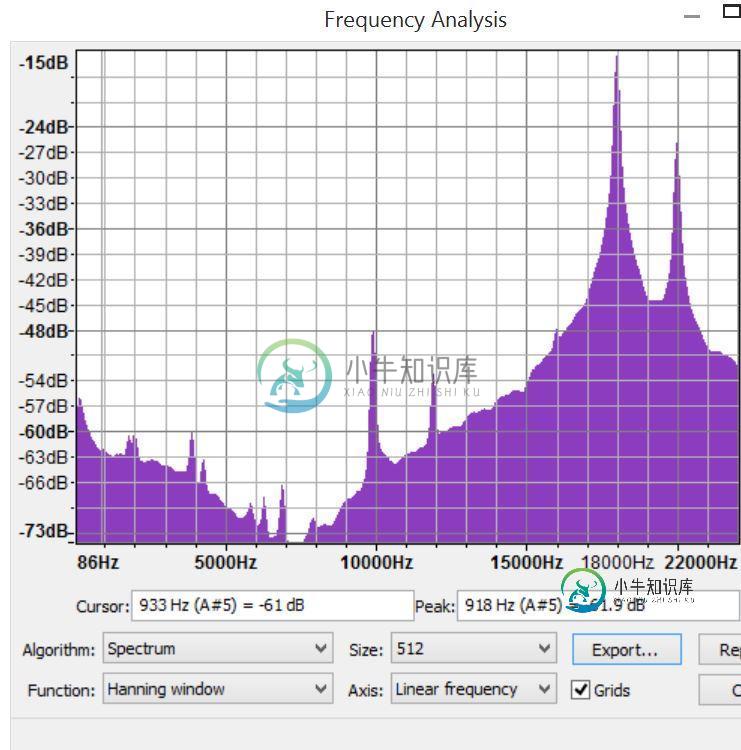

上面的图像是由分析软件生成的-

这个图表呢?它是否成功实现了带通滤波器?感谢

共有2个答案

可能会有一些问题...

>

您正在尝试过滤的音频文件在您正在尝试过滤的带通范围内可能没有太多让耳朵注意到的内容。尝试过滤一个更大的范围来验证过滤器是否有效。尝试过滤大部分歌曲。

如果你不能让上面的工作,那么滤波器没有被正确地实施。你可能没有正确地实施滤波器。对于一个非常简单的滤波器,你可以尝试Y[n]=X[n]-.5*X[n-1],这是一个高通FIR滤波器,在0赫兹时为零。

祝你好运

如何与带通接口存在问题。java源代码,可能是因为似乎有点误解:IIR滤波器不处理频率,而是处理时域数据样本(可能表现出振荡行为)。

因此,您必须提供这些时域样本作为带通的输入。process()。因为您正在从文件中读取原始字节,所以需要将这些字节转换为浮点。你可以通过以下方式实现:

/**

* Convert byte[] raw audio to 16 bit int format.

* @param rawdata

*/

private int[] byteToShort(byte[] rawdata) {

int[] converted = new int[rawdata.length / 2];

for (int i = 0; i < converted.length; i++) {

// Wave file data are stored in little-endian order

int lo = rawdata[2*i];

int hi = rawdata[2*i+1];

converted[i] = ((hi&0xFF)<<8) | (lo&0xFF);

}

return converted;

}

private float[] byteToFloat(byte[] audio) {

return shortToFloat(byteToShort(audio));

}

同样对于立体波文件,您将从交错的波文件中获取样本。因此您还需要对样本进行解交错。这可以以类似于您对deinterleaveData所做的方式来实现,除了您需要将变体转换为浮动[][]而不是双[][],因为Bandpass.process期望浮动数组。

当然,您还需要在过滤后重新组合两个通道,但在将过滤后的信号反馈回audioTrack之前:

float[] interleaveData(float[][] data) {

int numChannels = data.length;

int numFrames = data[0].length;

float[] result = new float[numFrames*numChannels];

for (int i = 0; i < numFrames; i++) {

for (int ch = 0; ch < numChannels; ch++) {

result[numChannels * i + ch] = data[ch][i];

}

}

return result;

}

现在,您应该具备过滤音频所需的构建块:

BandPass bandpass = new BandPass(19000,2000,44100);

while ((count = dataInputStream.read(data, 0, bufferSize)) > -1) {

// decode and deinterleave stereo 16-bit per sample data

float[][] signals = deinterleaveData(byteToFloat(data), 2);

// filter data samples, updating the buffers with the filtered samples.

bandpass.process(signals[0], signals[1]);

// recombine signals for playback

audioTrack.write(interleaveData(signals), 0, count, WRITE_NON_BLOCKING);

}

附注:最后,您目前正在读取所有的波形文件作为数据样本,包括头文件。这将导致开始时出现短暂的嘈杂突发。为了避免这种情况,您应该跳过头文件。

-

我有Android和Windows 7设置,并且我的音频插座在Windows 7机器上不起作用,我想用我的Android替代我的音频插座,使我能够使用蓝牙或USB连接连接耳机或扬声器等外部音频设备。这可能吗?我看过一些关于将手机用作麦克风(输入)的文章,但到目前为止还没有关于将其用作输入/输出的文章。

-

我想分析麦克风输入的当前频率,以使我的发光二极管与音乐播放同步。我知道如何从麦克风捕捉声音,但我不知道FFT,这是我在寻找获得频率的解决方案时经常看到的。 我想测试特定频率的当前音量是否大于设定值。代码应该如下所示: 我的问题是如何在Java中实现FFT。为了更好地理解,这里有一个YouTube视频的链接,它展示了我正在努力实现的目标。 整个代码: 编辑:我尝试使用MediaPlayer的Java

-

你知道它是否有可能实现吗?

-

我必须实现创建暂停和恢复状态音频的应用程序,当我的应用程序作为启动时,音频是启动的,当我按下模拟器上的后退按钮时,音频音乐处于暂停状态,但当我的活动从停止状态返回前台时,我的音频音乐不会恢复。这是我的密码。

-

The Audio High Pass Filter passes high frequencies of an AudioSource and cuts off signals with frequencies lower than the Cutoff Frequency. The Highpass resonance Q (known as Highpass Resonance Qualit