eclipse中的Kafka生产者不向主题发送消息

我无法将KafkaProducer使用java从Windows(主机操作系统)上的eclipse发送到运行在Hortonworks沙箱上的kafka主题。我的java代码如下所示

import java.util.Properties;

import java.util.concurrent.Future;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

public class Producer {

public static void main(String[] args) {

Properties properties = new Properties();

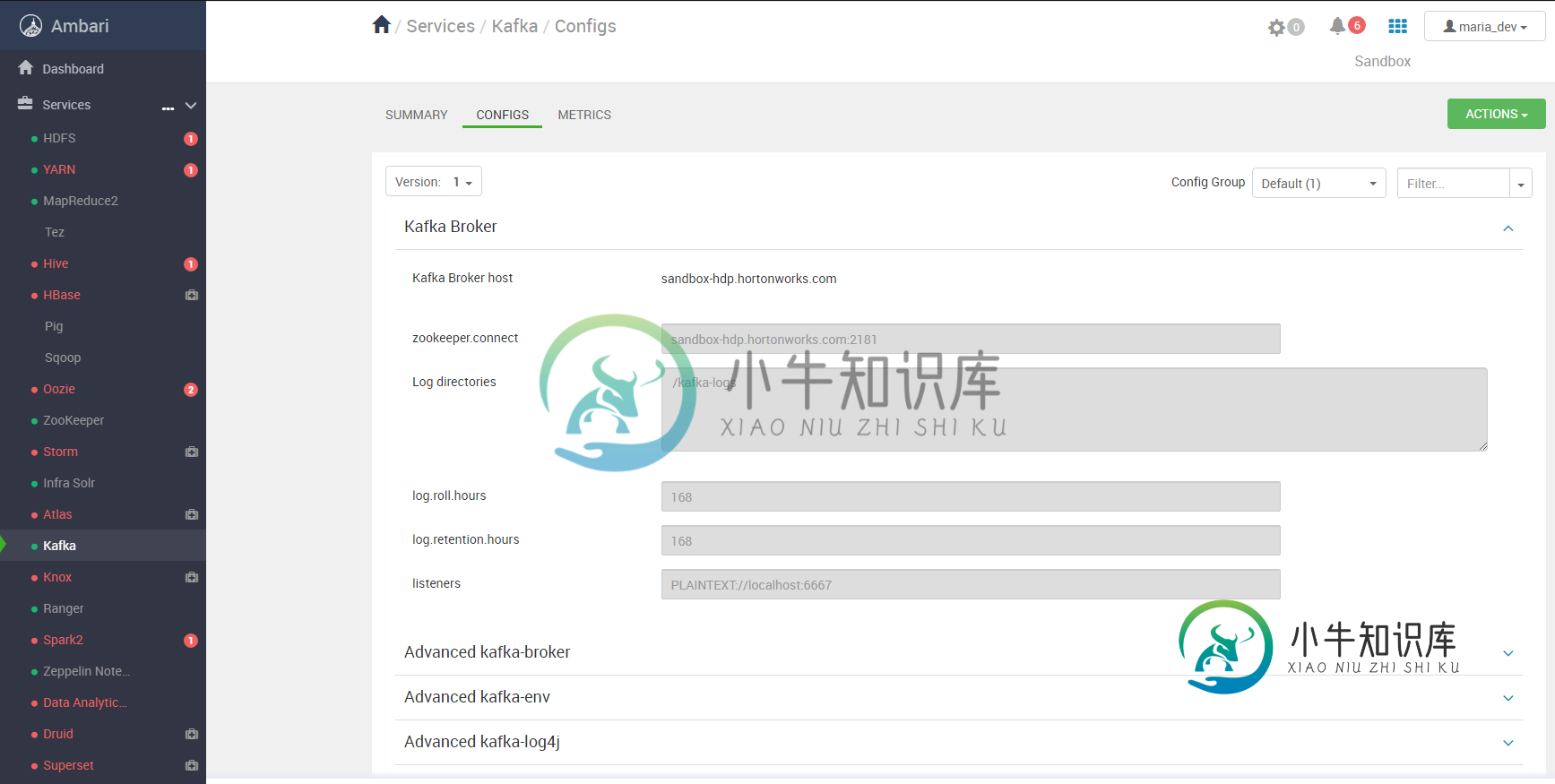

properties.put("bootstrap.servers", "sandbox-hdp.hortonworks.com:6667");

properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Future<RecordMetadata> ck = null;

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(properties);

try {

for (int i = 0; i < 1; i++) {

System.out.println(i);

ck = kafkaProducer.send(

new ProducerRecord<String, String>("kafkatopic", Integer.toString(i), "test message - " + i));

kafkaProducer.flush();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

System.out.println(ck.toString());

// System.out.println(ck.get().toString()); ->gives null

kafkaProducer.close();

}

}

}

当我运行这个java代码时没有错误,它只是打印消息的索引,在本例中只有0,然后终止,我无法在hortonworks沙箱的cmd接口上的console-consumer中看到0。

这是pom.xml依赖项

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.9.0.1</version>

</dependency>

# Copyright (c) 1993-2009 Microsoft Corp.

#

# This is a sample HOSTS file used by Microsoft TCP/IP for Windows.

#

# This file contains the mappings of IP addresses to host names. Each

# entry should be kept on an individual line. The IP address should

# be placed in the first column followed by the corresponding host name.

# The IP address and the host name should be separated by at least one

# space.

#

# Additionally, comments (such as these) may be inserted on individual

# lines or following the machine name denoted by a '#' symbol.

#

# For example:

#

# 102.54.94.97 rhino.acme.com # source server

# 38.25.63.10 x.acme.com # x client host

# localhost name resolution is handled within DNS itself.

# 127.0.0.1 localhost

# ::1 localhost

127.0.0.1 sandbox-hdp.hortonworks.com

/usr/hdp/3.0.1.0-187/kafka/bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic kafkatopic

我可以从制片人那里发送消息

[root@sandbox-hdp ~]# /usr/hdp/3.0.1.0-187/kafka/bin/kafka-console-producer.sh --broker-list sandbox-hdp.hortonworks.com:6667 --topic kafkatopic

>statement1

>statement2

>statement3

>statement4

>statement5

同时也可以在其他选项卡中看到来自消费者的消息

[root@sandbox-hdp ~]# /usr/hdp/3.0.1.0-187/kafka/bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic kafkatopic --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

this is statement1

this is statement2

this is statement3

this is statement4

this is statement5

我可以看到消息被发送到主题从生产者到消费者在cmd接口,但我无法发送消息从Java在Windows(主机OS)到kafka主题在hortonworks沙箱。

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see org.apache.kafka.clients.consumer.ConsumerConfig for more details

# list of brokers used for bootstrapping knowledge about the rest of the cluster

# format: host1:port1,host2:port2 ...

bootstrap.servers=localhost:9092

# consumer group id

group.id=test-consumer-group

# What to do when there is no initial offset in Kafka or if the current

# offset does not exist any more on the server: latest, earliest, none

#auto.offset.reset=

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see org.apache.kafka.clients.producer.ProducerConfig for more details

############################# Producer Basics #############################

# list of brokers used for bootstrapping knowledge about the rest of the cluster

# format: host1:port1,host2:port2 ...

bootstrap.servers=localhost:9092

# specify the compression codec for all data generated: none, gzip, snappy, lz4

compression.type=none

# name of the partitioner class for partitioning events; default partition spreads data randomly

#partitioner.class=

# the maximum amount of time the client will wait for the response of a request

#request.timeout.ms=

# how long `KafkaProducer.send` and `KafkaProducer.partitionsFor` will block for

#max.block.ms=

# the producer will wait for up to the given delay to allow other records to be sent so that the sends can be batched together

#linger.ms=

# the maximum size of a request in bytes

#max.request.size=

# the default batch size in bytes when batching multiple records sent to a partition

#batch.size=

# the total bytes of memory the producer can use to buffer records waiting to be sent to the server

#buffer.memory=

# Generated by Apache Ambari. Sun May 3 19:25:08 2020

auto.create.topics.enable=true

auto.leader.rebalance.enable=true

compression.type=producer

controlled.shutdown.enable=true

controlled.shutdown.max.retries=3

controlled.shutdown.retry.backoff.ms=5000

controller.message.queue.size=10

controller.socket.timeout.ms=30000

default.replication.factor=1

delete.topic.enable=true

external.kafka.metrics.exclude.prefix=kafka.network.RequestMetrics,kafka.server.DelayedOperationPurgatory,kafka.server.BrokerTopicMetrics.BytesRejectedPerSec

external.kafka.metrics.include.prefix=kafka.network.RequestMetrics.ResponseQueueTimeMs.request.OffsetCommit.98percentile,kafka.network.RequestMetrics.ResponseQueueTimeMs.request.Offsets.95percentile,kafka.network.RequestMetrics.ResponseSendTimeMs.request.Fetch.95percentile,kafka.network.RequestMetrics.RequestsPerSec.request

fetch.purgatory.purge.interval.requests=10000

kafka.ganglia.metrics.group=kafka

kafka.ganglia.metrics.host=localhost

kafka.ganglia.metrics.port=8671

kafka.ganglia.metrics.reporter.enabled=true

kafka.metrics.reporters=

kafka.timeline.metrics.host_in_memory_aggregation=

kafka.timeline.metrics.host_in_memory_aggregation_port=

kafka.timeline.metrics.host_in_memory_aggregation_protocol=

kafka.timeline.metrics.hosts=

kafka.timeline.metrics.maxRowCacheSize=10000

kafka.timeline.metrics.port=

kafka.timeline.metrics.protocol=

kafka.timeline.metrics.reporter.enabled=true

kafka.timeline.metrics.reporter.sendInterval=5900

kafka.timeline.metrics.truststore.password=

kafka.timeline.metrics.truststore.path=

kafka.timeline.metrics.truststore.type=

leader.imbalance.check.interval.seconds=300

leader.imbalance.per.broker.percentage=10

listeners=PLAINTEXT://sandbox-hdp.hortonworks.com:6667

log.cleanup.interval.mins=10

log.dirs=/kafka-logs

log.index.interval.bytes=4096

log.index.size.max.bytes=10485760

log.retention.bytes=-1

log.retention.check.interval.ms=600000

log.retention.hours=168

log.roll.hours=168

log.segment.bytes=1073741824

message.max.bytes=1000000

min.insync.replicas=1

num.io.threads=8

num.network.threads=3

num.partitions=1

num.recovery.threads.per.data.dir=1

num.replica.fetchers=1

offset.metadata.max.bytes=4096

offsets.commit.required.acks=-1

offsets.commit.timeout.ms=5000

offsets.load.buffer.size=5242880

offsets.retention.check.interval.ms=600000

offsets.retention.minutes=86400000

offsets.topic.compression.codec=0

offsets.topic.num.partitions=50

offsets.topic.replication.factor=1

offsets.topic.segment.bytes=104857600

port=6667

producer.metrics.enable=false

producer.purgatory.purge.interval.requests=10000

queued.max.requests=500

replica.fetch.max.bytes=1048576

replica.fetch.min.bytes=1

replica.fetch.wait.max.ms=500

replica.high.watermark.checkpoint.interval.ms=5000

replica.lag.max.messages=4000

replica.lag.time.max.ms=10000

replica.socket.receive.buffer.bytes=65536

replica.socket.timeout.ms=30000

sasl.enabled.mechanisms=GSSAPI

sasl.mechanism.inter.broker.protocol=GSSAPI

security.inter.broker.protocol=PLAINTEXT

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

socket.send.buffer.bytes=102400

ssl.client.auth=none

ssl.key.password=

ssl.keystore.location=

ssl.keystore.password=

ssl.truststore.location=

ssl.truststore.password=

zookeeper.connect=sandbox-hdp.hortonworks.com:2181

zookeeper.connection.timeout.ms=25000

zookeeper.session.timeout.ms=30000

zookeeper.sync.time.ms=2000

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# the directory where the snapshot is stored.

dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

# disable the per-ip limit on the number of connections since this is a non-production config

maxClientCnxns=0

共有1个答案

send实际上是将消息添加到缓冲内存中并立即返回,稍后生产者为了提高效率而批量发送消息

生成器由一个缓冲空间池和一个后台I/O线程组成,缓冲空间池保存尚未传输到服务器的记录,后台I/O线程负责将这些记录转换为请求并将它们传输到集群。使用后未能关闭生产者会泄漏这些资源。

send()方法是异步的。调用时,它将记录添加到挂起记录的缓冲区中,发送并立即返回。这使得生产者可以将单个记录批在一起以提高效率。

-

我有一个用例“XML文件==>Kafka主题==>Build REST API to Query”来自Kafka主题的数据。我熟悉将数据转换为Avro格式,并编写到kafka主题。 您能建议如何发布XML吗?

-

我有一个问题与产生的消息Kafka的主题。 我使用来自外部供应商的Kafka管理服务,所以我问他经纪人的状况,他说一切都好。顺便说一句,它发生在三个不同的Kafka实例上。Kafka客户端版本也无关紧要-0.11.0.0和2.0.1都有。

-

我是Kafka的新手,当我试图发送信息到我得到的主题下面的错误。有人能帮我一下吗? [2018-09-23 13:37:56,613]警告[Producer Clientid=Console-Producer]无法建立到节点-1的连接。代理可能不可用。(org.apache.kafka.clients.NetworkClient)

-

我在向我的Kafka主题发送序列化XML时遇到问题。每当我运行我的代码时,我都不会收到任何异常或错误消息,但我仍然无法在Kafka主题中看到我的任何消息。 我的Kafka制作人设置如下: 当我运行代码时,我得到: 知道怎么做吗?提前谢谢!

-

我们使用sping-cloud-stream-binder-kafka(3.0.3.RELEASE)向我们的Kafka集群(2.4.1)发送消息。时不时地,其中一个生产者线程会收到NOT_LEADER_FOR_PARTITION异常,甚至超过重试(当前设置为12,由依赖sping-retry激活)。我们限制了重试,因为我们发送了大约1kmsg/s(每个生产者实例),并且担心缓冲区的大小。这样我们会

-

向Kafka推送100,000条消息 在使用者使用所有100,000条消息之前,使用Ctrl-C关闭zookeeper和kafka服务(这是通过在consumer方法中使用来模拟的)。 发现 在zookeeper和kafka服务被关闭后,消费者继续在控制台上写消息。 问题 我如何使消费者从上次消费的消息的索引+1继续。 向Kafka推送100,000条消息 在使用者使用所有100,000条消息之前