Boilerplate for a basic AWS infrastructure with EKS cluster

Advantages of this boilerplate

- Infrastructure as Code (IaC): using Terraform, you get an infrastructure that’s smooth and efficient

- State management: Terraform saves the current infrastructure state, so you can review further changes without applying them. Also, state can be stored remotely, so you can work on the infrastructure in a team

- Scalability and flexibility: the infrastructure built based on this boilerplate can be expanded and updated anytime

- Comprehensiveness: you get scaling and monitoring instruments along with the basic infrastructure. You don’t need to manually modify anything in the infrastructure; you can simply make changes in Terraform as needed and deploy them to AWS and Kubernetes

- Control over resources: the IaC approach makes the infrastructure more observable and prevents waste of resources

- Clear documentation: your Terraform code effectively becomes your project documentation. It means that you can add new members to the team, and it won’t take them too much time to figure out how the infrastructure works

Why you should use this boilerplate

- Safe and polished: we’ve used these solutions in our own large-scale, high-load projects. We’ve been perfecting this infrastructure building process for months, making sure that it results in a system that is safe to use, secure, and reliable

- Saves time: you can spend weeks doing your own research and making the unavoidable mistakes to build an infrastructure like this. Instead, you can rely on this boilerplate and create the infrastructure you need within a day

- It’s free: we’re happy to share the results of our work

Description

This repository contains terraform modules and configuration of the Mad Devs team for the rapid deployment of a Kubernetes cluster, supporting services, and the underlying infrastructure in the AWS.

In our company’s work, we have tried many infrastructure solutions and services and traveled the path from on-premise hardware to serverless. As of today, Kubernetes has become our standard platform for deploying applications, and AWS has become the main cloud.

It is worth noting here that although 90% of our and our clients’ projects are hosted on AWS and AWS EKS is used as the Kubernetes platform, we do not insist, do not drag everything to Kubernetes, and do not force anyone to be hosted on AWS. Kubernetes is offered only after the collection and analysis of service architecture requirements.

And then, when choosing Kubernetes, it makes almost no difference to applications how the cluster itself is created—manually, through kops or using managed services from cloud providers—in essence, the Kubernetes platform is the same everywhere. So the choice of a particular provider is then made based on additional requirements, expertise, etc.

We know that the current implementation is far from being perfect. For example, we deploy services to the cluster using terraform: it is rather clumsy and against the Kuber approaches, but it is convenient for bootstrap because, by using state and interpolation, we convey proper IDs, ARNs, and other attributes to resources and names or secrets to templates and generate values from them for the required charts all within terraform.

There are more specific drawbacks: the data "template_file" resources that we used for most templates are extremely inconvenient for development and debugging, especially if there are 500+ line rolls like terraform/layer2-k8s/templates/elk-values.yaml. Also, despite helm3 got rid of the tiller, a large number of helm releases still at some point leads to plan hanging. Partially, but not always, it can be solved by terraform apply -target, but for the consistency of the state, it is desirable to execute plan and apply on the entire configuration. If you are going to use this boilerplate, it is advisable to split the terraform/layer2-k8s layer into several ones, taking out large and complex releases into separate modules.

You may reasonably question the number of .tf files. This monolith certainly should be refactored and split into many micro-modules adopting terragrunt approach. This is exactly what we will do in the near future, solving along the way the problems described above.

You can find more about this project in Anton Babenko stream:

Table of contents

- Boilerplate for a basic AWS infrastructure with EKS cluster

- Advantages of this boilerplate

- Why you should use this boilerplate

- Description

- Table of contents

- Architecture diagram

- Current infrastructure cost

- EKS Upgrading

- Namespace structure in the K8S cluster

- Useful tools

- Useful VSCode extensions

- AWS account

- How to use this repo

- What to do after deployment

- Update terraform version

- Updated terraform providers

- TFSEC

- Contributing

Architecture diagram

This diagram describes the default infrastructure:

- We use three availability Zones

- VPC

- Three public subnets for resources that can be accessible from the Internet

- Elastic load balancing - entry point to the k8s cluster

- Internet gateway - entry point to the created VPC

- Single Nat Gateway - service for organizing access for instances from private networks to public ones.

- Three private subnets with Internet access via Nat Gateway

- Three intra subnets without Internet access

- Three private subnets for RDS

- Route tables for private networks

- Route tables for public networks

- Three public subnets for resources that can be accessible from the Internet

- Autoscaling groups

- On-demand - a group with 1-5 on-demand instances for resources with continuous uptime requirements

- Spot - a group with 1-6 spot instances for resources where interruption of work is not critical

- CI - a group with 0-3 spot instances created based on gitlab-runner requests; located in the public network

- EKS control plane - nodes of the k8s clusters’ control plane

- Route53 - DNS management service

- Cloudwatch - service for obtaining the metrics about resources’ state of operation in the AWS cloud

- AWS Certificate manager - service for AWS certificate management

- SSM parameter store - service for storing, retrieving, and controlling configuration values

- S3 bucket - this bucket is used to store terraform state

- Elastic container registry - service for storing docker images

Current infrastructure cost

| Resource | Type/size | Price per hour $ | Price per GB $ | Number | Monthly cost |

|---|---|---|---|---|---|

| EKS | 0.1 | 1 | 73 | ||

| EC2 ondemand | t3.medium | 0.0456 | 1 | 33,288 | |

| EC2 Spot | t3.medium/t3a.medium | 0.0137/0.0125 | 1 | 10 | |

| EC2 Spot Ci | t3.medium/t3a.medium | 0.0137/0.0125 | 0 | 10 | |

| EBS | 100 Gb | 0.11 | 2 | 22 | |

| NAT gateway | 0.048 | 0.048 | 1 | 35 | |

| Load Balancer | Classic | 0.028 | 0.008 | 1 | 20.44 |

| S3 | Standart | 1 | 1 | ||

| ECR | 10 Gb | 2 | 1.00 | ||

| Route53 | 1 Hosted Zone | 1 | 0.50 | ||

| Cloudwatch | First 10 Metrics - free | 0 | |||

| Total | 216.8 |

The cost is indicated without counting the amount of traffic for Nat Gateway Load Balancer and S3

EKS Upgrading

To upgrade k8s cluster to a new version, please use official guide and check changelog/breaking changes.Starting from v1.18 EKS supports K8S add-ons. We use them to update things like vpc-cni, kube-proxy, coredns. To get the latest add-ons versions, run:

aws eks describe-addon-versions --kubernetes-version 1.21 --query 'addons[].[addonName, addonVersions[0].addonVersion]'

where 1.21 - is a k8s version on which we are updating.DO NOT FORGET!!! to update cluster-autoscaler too. It's version must be the same as the cluster version.Also IT'S VERY RECOMMENDED to check that deployed objects have actual apiVersions that won't be deleted after upgrading. There is a tool pluto that can help to do it.

Switch to the correct cluster

Run `pluto detect-helm -o markdown --target-versions k8s=v1.22.0`, where `k8s=v1.22.0` is a k8s version we want to update to.

Namespace structure in the K8S cluster

This diagram shows the namespaces used in the cluster and the services deployed there

| Namespace | service | Description |

|---|---|---|

| kube-system | core-DNS | DNS server used in the cluster |

| certmanager | cert-manager | Service for automation of management and reception of TLS certificates |

| certmanager | cluster-issuer | Resource representing a certification center that can generate signed certificates using different CA |

| ing | nginx-ingress | Ingress controller that uses nginx as a reverse proxy |

| ing | Certificate | The certificate object used for nginx-ingress |

| dns | external-dns | Service for organizing access to external DNS from the cluster |

| ci | gitlab-runner | Gitlab runner used to launch gitlab-ci agents |

| sys | aws-node-termination-handler | Service for controlling the correct termination of EC2 |

| sys | autoscaler | Service that automatically adjusts the size of the k8s cluster depending on the requirements |

| sys | kubernetes-external-secrets | Service for working with external secret stores, such as secret-manager, ssm parameter store, etc |

| sys | Reloader | Service that monitors changes in external secrets and updates them in the cluster |

| monitoring | kube-prometheus-stack | Umbrella chart including a group of services used to monitor cluster performance and visualize data |

| monitoring | loki-stack | Umbrella chart including a service used to collect container logs and visualize data |

| elk | elk | Umbrella chart including a group of services for collecting logs and metrics and visualizing this data |

Useful tools

- tfenv - tool for managing different versions of terraform; the required version can be specified directly as an argument or via

.terraform-version - tgenv - tool for managing different versions of terragrunt; the required version can be specified directly as an argument or via

.terragrunt-version - terraform - terraform itself, our main development tool:

tfenv install - awscli - console utility to work with AWS API

- kubectl - conssole utility to work with Kubernetes API

- kubectx + kubens - power tools for kubectl help you switch between Kubernetes clusters and namespaces

- helm - tool to create application packages and deploy them into k8s

- helmfile - "docker compose" for helm

- terragrunt - small terraform wrapper providing DRY approach in some cases:

tgenv install - awsudo - simple console utility that allows running awscli commands assuming specific roles

- aws-vault - tool for securely managing AWS keys and running console commands

- aws-mfa - utility for automating the reception of temporary STS tockens when MFA is enabled

- vscode - our main IDE

Optionally, a pre-commit hook can be set up and configured for terraform: pre-commit-terraform, this will allow formatting and validating code at the commit stage

Useful VSCode extensions

- editorconfig

- terraform

- drawio

- yaml

- embrace

- js-beautify

- docker

- git-extension-pack

- githistory

- kubernetes-tools

- markdown-preview-enhanced

- markdownlint

- file-tree-generator

- gotemplate-syntax

AWS account

We will not go deep into security settings since everyone has different requirements. However, there are the simplest and most basic steps worth following to move on. If you have everything in place, feel free to skip this section.

It is highly recommended not to use a root account to work with AWS. Make an extra effort of creating users with required/limited rights.

IAM settings

So, you have created an account, passed confirmation, perhaps even created Access Keys for the console. In any case, go to your account security settings and be sure to follow these steps:

- Set a strong password

- Activate MFA for the root account

- Delete and do not create access keys of the root account

Further in the IAM console:

In the Policies menu, create

MFASecuritypolicy that prohibits users from using services without activating MFAIn the Roles menu, create new role

administrator. Select Another AWS Account - and enter your account number in the Account ID field. Check the Require MFA checkbox. In the next Permissions window, attach theAdministratorAccesspolicy to it.In the Policies menu, create

assumeAdminRolepolicy:{ "Version": "2012-10-17", "Statement": { "Effect": "Allow", "Action": "sts:AssumeRole", "Resource": "arn:aws:iam::<your-account-id>:role/administrator" } }In the Groups menu, create the

admingroup; in the next window, attachassumeAdminRoleandMFASecuritypolicy to it. Finish creating the group.In the Users menu, create a user to work with AWS by selecting both checkboxes in Select AWS access type. In the next window, add the user to the

admingroup. Finish and download CSV with credentials.

In this doc, we haven't considered a more secure and correct method of user management that uses external Identity providers. These include G-suite, Okta, and others

Setting up awscli

Terraform can work with environment variables for AWS access key ID and a secret access key or AWS profile; in this example, we will create an aws profile:

$ aws configure --profile maddevs AWS Access Key ID [None]: ***************** AWS Secret Access Key [None]: ********************* Default region name [None]: us-east-1 Default output format [None]: json

$ export AWS_PROFILE=maddevsGo here to learn how to get temporary session tokens and assume role

Alternatively, to use your

awscli,terraformand other CLI utils with MFA and roles, you can useaws-mfa,aws-vaultandawsudo

How to use this repo

Getting ready

S3 state backend

S3 is used as a backend for storing terraform state and for exchanging data between layers. You can manually create s3 bucket and then put backend setting into backend.tf file in each layer. Alternatively you can run from terraform/ directory:

$ export TF_REMOTE_STATE_BUCKET=my-new-state-bucket

$ terragrunt run-all init

Inputs

File terraform/layer1-aws/demo.tfvars.example contains example values. Copy this file to terraform/layer1-aws/terraform.tfvars and set you values:

$ cp terraform/layer1-aws/demo.tfvars.example terraform/layer1-aws/terraform.tfvars

You can find all possible variables in each layer's Readme.

Secrets

In the root of layer2-k8s is the aws-sm-secrets.tf where several local variables expect AWS Secrets Manager secret with the pattern /${local.name_wo_region}/infra/layer2-k8s. These secrets are used for authentication with Kibana and Grafana using GitLab and register gitlab runner.

{

"kibana_gitlab_client_id": "access key token",

"kibana_gitlab_client_secret": "secret key token",

"kibana_gitlab_group": "gitlab group",

"grafana_gitlab_client_id": "access key token",

"grafana_gitlab_client_secret": "secret key token",

"gitlab_registration_token": "gitlab-runner token",

"grafana_gitlab_group": "gitlab group",

"alertmanager_slack_url": "slack url",

"alertmanager_slack_channel": "slack channel"

}

Set proper secrets; you can set empty/mock values. If you won't use these secrets, delete this

.tffile from thelayer2-k8sroot.

Domain and SSL

You will need to purchase or use an already purchased domain in Route53. The domain name and zone ID will need to be set in the domain_name and zone_id variables in layer1.

By default, the variable create_acm_certificate is set to false. Which instructs terraform to search ARN of an existing ACM certificate. Set to true if you want terraform to create a new ACM SSL certificate.

Working with terraform

init

The terraform init command is used to initialize the state and its backend, downloads providers, plugins, and modules. This is the first command to be executed in layer1 and layer2:

$ terraform init

Correct output:

* provider.aws: version = "~> 2.10"

* provider.local: version = "~> 1.2"

* provider.null: version = "~> 2.1"

* provider.random: version = "~> 2.1"

* provider.template: version = "~> 2.1"

Terraform has been successfully initialized!

plan

The terraform plan command reads terraform state and configuration files and displays a list of changes and actions that need to be performed to bring the state in line with the configuration. It's a convenient way to test changes before applying them. When used with the -out parameter, it saves a batch of changes to a specified file that can later be used with terraform apply. Call example:

$ terraform plan

# ~600 rows skipped

Plan: 82 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

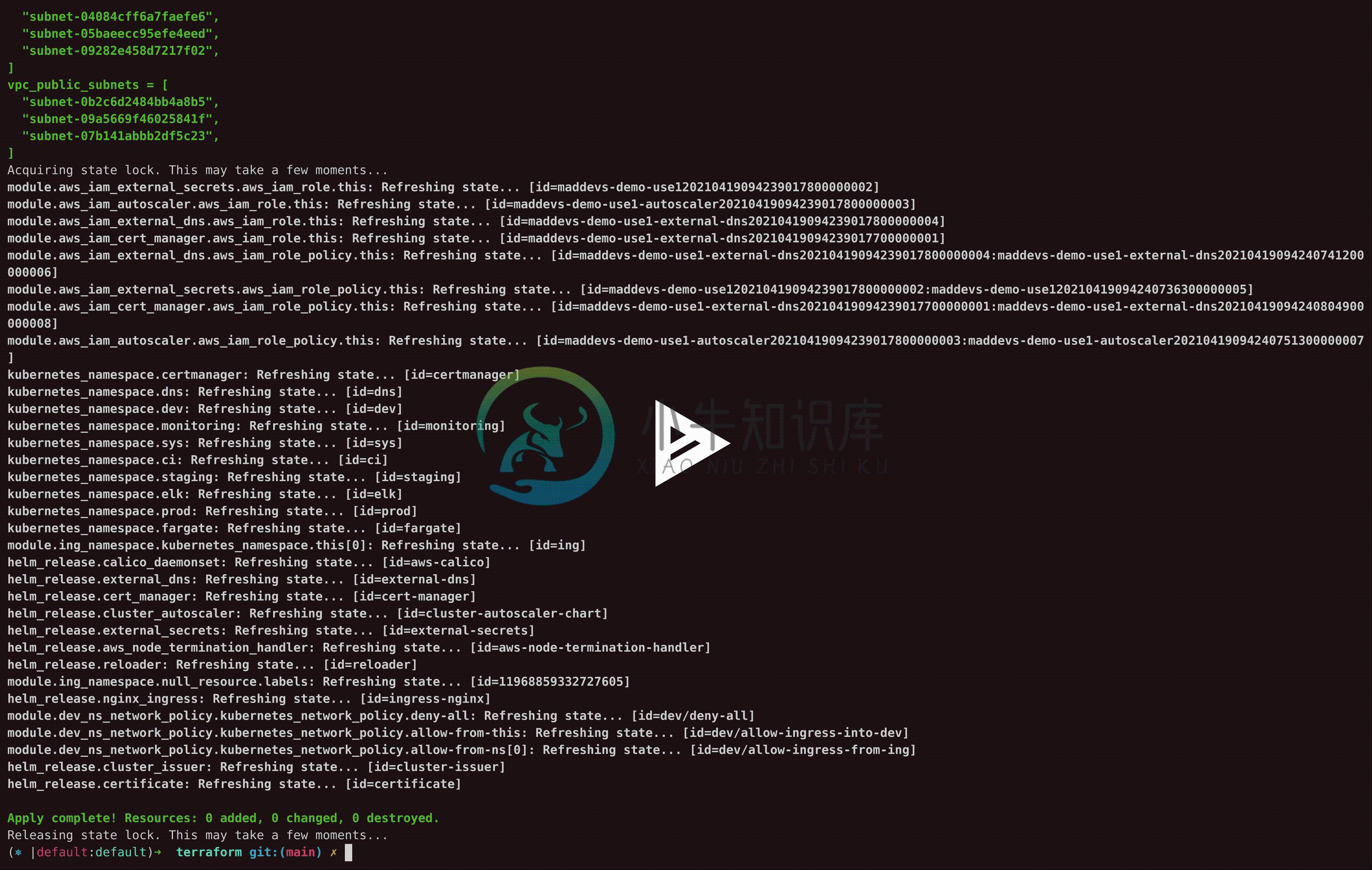

apply

The terraform apply command scans .tf in the current directory and brings the state to the configuration described in them by making changes in the infrastructure. By default, plan with a continuation dialog is performed before applying. Optionally, you can specify a saved plan file as input:

$ terraform apply

# ~600 rows skipped

Plan: 82 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

Apply complete! Resources: 82 added, 0 changed, 0 destroyed.

We do not always need to re-read and compare the entire state if small changes have been added that do not affect the entire infrastructure. For this, you can use targeted apply; for example:

$ terraform apply -target helm_release.kibana

Details can be found here

The first time, the

applycommand must be executed in the layers in order: first layer1, then layer2. Infrastructuredestroyshould be done in the reverse order.

terragrunt

- Terragrunt version:

0.29.2

Terragrunt version pinned in

terragrunt.hclfile.

We've also used terragrunt to simplify s3 bucket creation and terraform backend configuration. All you need to do is to set s3 bucket name in the TF_REMOTE_STATE_BUCKET env variable and run terragrunt command in the terraform/ directory:

$ export TF_REMOTE_STATE_BUCKET=my-new-state-bucket

$ terragrunt run-all init

$ terragrunt run-all apply

By running this terragrunt will create s3 bucket, configure terraform backend and then will run terraform init and terraform apply in layer-1 and layer-2 sequentially.

Apply infrastructure by layers with terragrunt

Go to layer folder terraform/layer1-aws/ or terraform/layer2-k8s/ and run this command:

terragrunt apply

The

layer2-k8shas a dependence onlayer1-aws.

Target apply by terragrunt

Go to layer folder terraform/layer1-aws/ or terraform/layer2-k8s/ and run this command:

terragrunt apply -target=module.eks

The

-targetis formed from the following partsresource typeandresource name.For example:-target=module.eks,-target=helm_release.loki_stack

Destroy infrastructure by terragrunt

To destroy both layers, run this command from terraform/ folder:

terragrant run-all destroy

To destroy layer2-k8s, run this command from terraform/layare2-k8s folder:

terragrunt destroy

The

layer2-k8shas dependence fromlayer1-awsand when you destroylayer1-aws,layer2-k8sdestroyed automatically.

What to do after deployment

After applying this configuration, you will get the infrastructure described and outlined at the beginning of the document. In AWS and within the EKS cluster, the basic resources and services necessary for the operation of the EKS k8s cluster will be created.

You can get access to the cluster using this command:

aws eks update-kubeconfig --name maddevs-demo-use1 --region us-east-1

Update terraform version

Change terraform version in this files

terraform/.terraform-version - the main terraform version for tfenv tool

.github/workflows/terraform-ci.yml - the terraform version for github actions need for terraform-validate and terraform-format.

Terraform version in each layer.

terraform/layer1-aws/main.tf

terraform/layer2-k8s/main.tf

Updated terraform providers

Change terraform providers version in this files

terraform/layer1-aws/main.tf

terraform/layer2-k8s/main.tf

When we changed terraform provider versions, we need to update terraform state. For update terraform state in layers we need to run this command:

terragrunt run-all init -upgrade

Or in each layer run command:

terragrunt init -upgrade

examples

Each layer has an examples/ directory that contains working examples that expand the basic configuration. The files’ names and contents are in accordance with our coding conventions, so no additional description is required. If you need to use something, just move it from this folder to the root of the layer.

This will allow you to expand your basic functionality by launching a monitoring system based on ELK or Prometheus Stack, etc.

TFSEC

We use GitHub Actions and tfsec to check our terraform code using static analysis to spot potential security issues. However, we needed to skip some checks. The list of those checks is below:

| Layer | Security issue | Description | Why skipped? |

|---|---|---|---|

| layer1-aws/aws-eks.tf | aws-vpc-no-public-egress-sgr | Resource 'module.eks:aws_security_group_rule.cluster_egress_internet[0]' defines a fully open egress security group rule. | We use recommended option. More info |

| layer1-aws/aws-eks.tf | aws-eks-enable-control-plane-logging | Resource 'module.eks:aws_eks_cluster.this[0]' is missing the control plane log type 'scheduler' | By default we enable only audit logs. Can be changed via variable eks_cluster_enabled_log_types |

| layer1-aws/aws-eks.tf | aws-eks-encrypt-secrets | Resource 'module.eks:aws_eks_cluster.this[0]' has no encryptionConfigBlock block | By default encryption is disabled, but can be enabled via setting eks_cluster_encryption_config_enable = true in your tfvars file. |

| layer1-aws/aws-eks.tf | aws-eks-no-public-cluster-access | Resource 'module.eks:aws_eks_cluster.this[0]' has public access is explicitly set to enabled | By default we create public accessible EKS cluster from anywhere |

| layer1-aws/aws-eks.tf | aws-eks-no-public-cluster-access-to-cidr | Resource 'module.eks:aws_eks_cluster.this[0]' has public access cidr explicitly set to wide open | By default we create public accessible EKS cluster from anywhere |

| layer1-aws/aws-eks.tf | aws-vpc-no-public-egress-sgr | Resource 'module.eks:aws_security_group_rule.workers_egress_internet[0]' defines a fully open egress security group rule | We use recommended option. More info |

| modules/aws-iam-ssm/iam.tf | aws-iam-no-policy-wildcards | Resource 'module.aws_iam_external_secrets:data.aws_iam_policy_document.this' defines a policy with wildcarded resources. | We use aws-iam-ssm module for external-secrets and grant it access to all secrets. |

| modules/aws-iam-autoscaler/iam.tf | aws-iam-no-policy-wildcards | Resource 'module.aws_iam_autoscaler:data.aws_iam_policy_document.this' defines a policy with wildcarded resources | We use condition to allow run actions only for certain autoscaling groups |

| modules/kubernetes-network-policy-namespace/main.tf | kubernetes-network-no-public-ingress | Resource 'module.dev_ns_network_policy:kubernetes_network_policy.deny-all' allows all ingress traffic by default | We deny all ingress trafic by default, but tfsec doesn't work as expected (bug) |

| modules/kubernetes-network-policy-namespace/main.tf | kubernetes-network-no-public-egress | Resource 'module.dev_ns_network_policy:kubernetes_network_policy.deny-all' allows all egress traffic by default | We don't want to deny egress traffic in a default installation |

| kubernetes-network-policy-namespace/main.tf | kubernetes-network-no-public-egress | Resource 'module.dev_ns_network_policy:kubernetes_network_policy.allow-from-this' allows all egress traffic by default | We don't want to deny egress traffic in a default installation |

| modules/kubernetes-network-policy-namespace/main.tf | kubernetes-network-no-public-egress | Resource 'module.dev_ns_network_policy:kubernetes_network_policy.allow-from-ns[0]' allows all egress traffic by default | We don't want to deny egress traffic in a default installation |

| modules/aws-iam-aws-loadbalancer-controller/iam.tf | aws-iam-no-policy-wildcards | Resource 'module.eks_alb_ingress[0]:module.aws_iam_aws_loadbalancer_controller:aws_iam_role_policy.this' defines a policy with wildcarded resources | We use recommended policy |

| layer2-k8s/locals.tf | general-secrets-sensitive-in-local | Local 'locals.' includes a potentially sensitive value which is defined within the project | tfsec complains on helm_repo_external_secrets url because it contains the word secret |

| modules/aws-iam-external-dns/main.tf | aws-iam-no-policy-wildcards | Resource 'module.aws_iam_external_dns:aws_iam_role_policy.this' defines a policy with wildcarded resources | We use the policy from the documentation |

| modules/aws-iam-external-dns/main.tf | aws-iam-no-policy-wildcards | Resource 'module.aws_iam_cert_manager:aws_iam_role_policy.this' defines a policy with wildcarded resources | Certmanager uses Route53 to create DNS records and validate wildcard certificates. By default we allow it to manage all zones |

Contributing

If you're interested in contributing to the project:

- Start by reading the Contributing guide

- Explore current issues.

-

目录 应用背景 准备步骤 了解生成config文件的两种方式 请求 api 的一般步骤 安装 AWS CLI和生成原生config 安装 jq 工具 执行步骤 根据 serviceaccount 生成 kubeconfig 为 SA 绑定 RBAC 测试config以及合并 总结 应用背景 第一个场景是由于 aws 控制台的 eks 产品并不具备导出 kubeconfig 功能,所以需要我们手

-

前言 使用 AWS lambda,我们可以在不考虑服务器的情况下上传代码并运行,但是这种方式最大的缺点就是代码包的大小限制,每一个 lambda 函数的代码包不能高于几十M。 现在,AWS lambda 允许以 docker 容器的方式运行,每一个 docker image 的大小允许高达 10G。通过这种方式,还可以轻松构建和部署依赖于较大依赖的工作任务,例如机器学习或数据密集型工作任务。与打包

-

部署Prometheus kubectl create namespace prometheus helm install prometheus prometheus-community/prometheus \ --namespace prometheus \ --set alertmanager.persistentVolume.storageClass="gp2" \

-

安装awscli2: AWS CLI 安装包:https://awscli.amazonaws.com/AWSCLIV2.pkg aws configure 输入aws的Access key ID 和 Secret access key 安装kubectl: brew install kubernetes-cli 创建kubeconfig: 参考 https://docs.aws.amazon.c

-

AWS EKS Terraform module Terraform module which creates Kubernetes cluster resources on AWS EKS. Features Create an EKS cluster All node types are supported: Managed Node Groups Self-managed Nodes Far

-

terraform-aws-eks-cluster Terraform module to provision an EKS cluster on AWS. This project is part of our comprehensive "SweetOps" approach towards DevOps. It's 100% Open Source and licensed under th

-

本文介绍了如何在 AWS EKS (Elastic Kubernetes Service) 上部署 TiDB 集群。 环境配置准备 部署前,请确认已完成以下环境准备: 安装 Helm:用于安装 TiDB Operator。 完成 AWS eksctl 入门 中所有操作。 该教程包含以下内容: 安装并配置 AWS 的命令行工具 awscli 安装并配置创建 Kubernetes 集群的命令行工具 e

-

我创建了一个新的AWS SSO(使用内部IDP作为标识源,因此不使用Active Directory)。 我可以登录到AWS CLI、AWS GUI,但不能执行任何kubectl操作。 AWS-Auth team-sso-devops用户的clusterrole绑定:

-

我有一个简单的任务,但即使研究了几十篇文章也没有解决。 有一个简单的AWS EKS集群,它是使用eksctl、ElasticIP从演示模板创建的,安装时未做任何更改https://bitnami.com/stack/nginx-ingress-controller/helm 有一个域https://stage.mydomain.com我想转发到ElasticIP,使用DNS A记录,在AWS EK

-

我正在云中的AWS EKS服务上运行我的工作负载。我可以看到没有默认的入口控制器可用(因为它可用于GKE),我们必须选择第三方。 我决定使用Traefik。在跟踪文档和其他资源(像这样)之后,我觉得使用Traefik作为IngresController不会自动在云中创建LoadBalancer。我们必须手动完成它来设置所有内容。 如何使用Traefik作为Kubernetes入口,就像其他入口控制