Hazelcast 3.5.2本机内存中格式

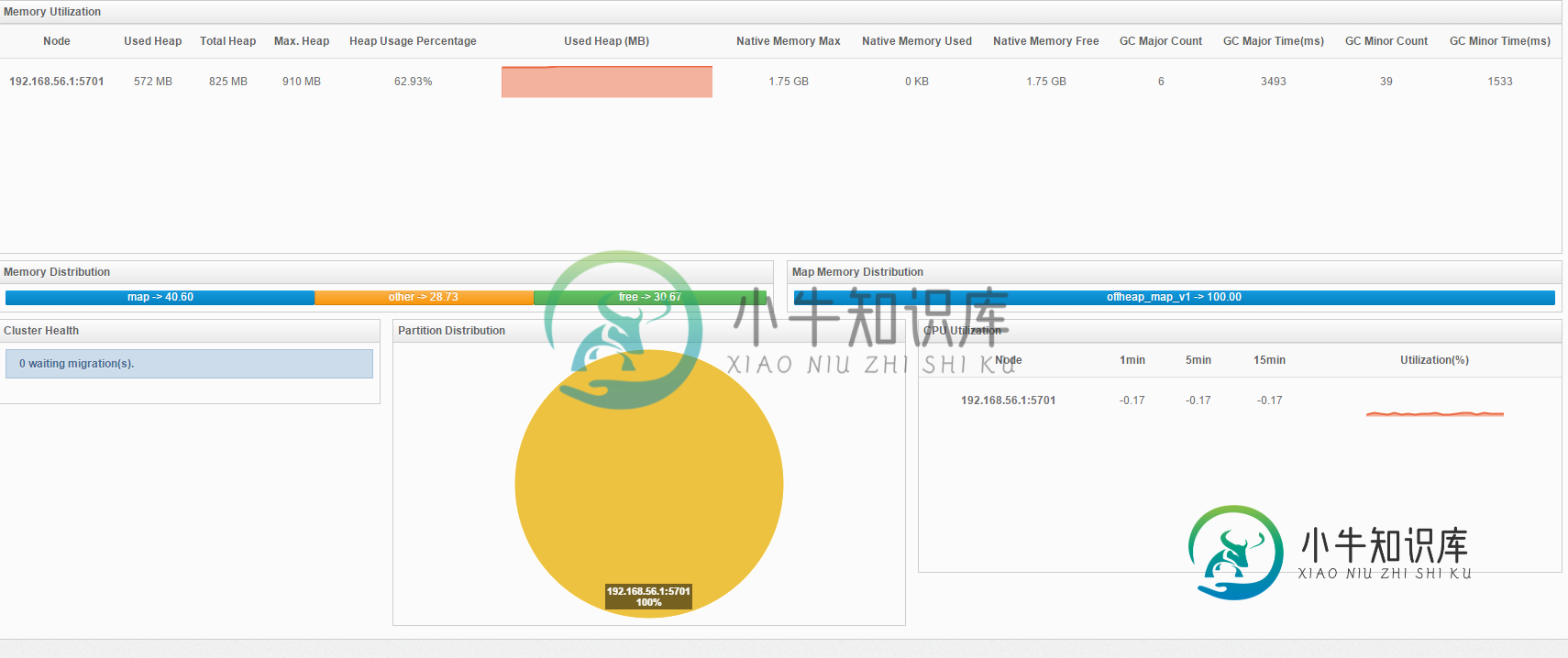

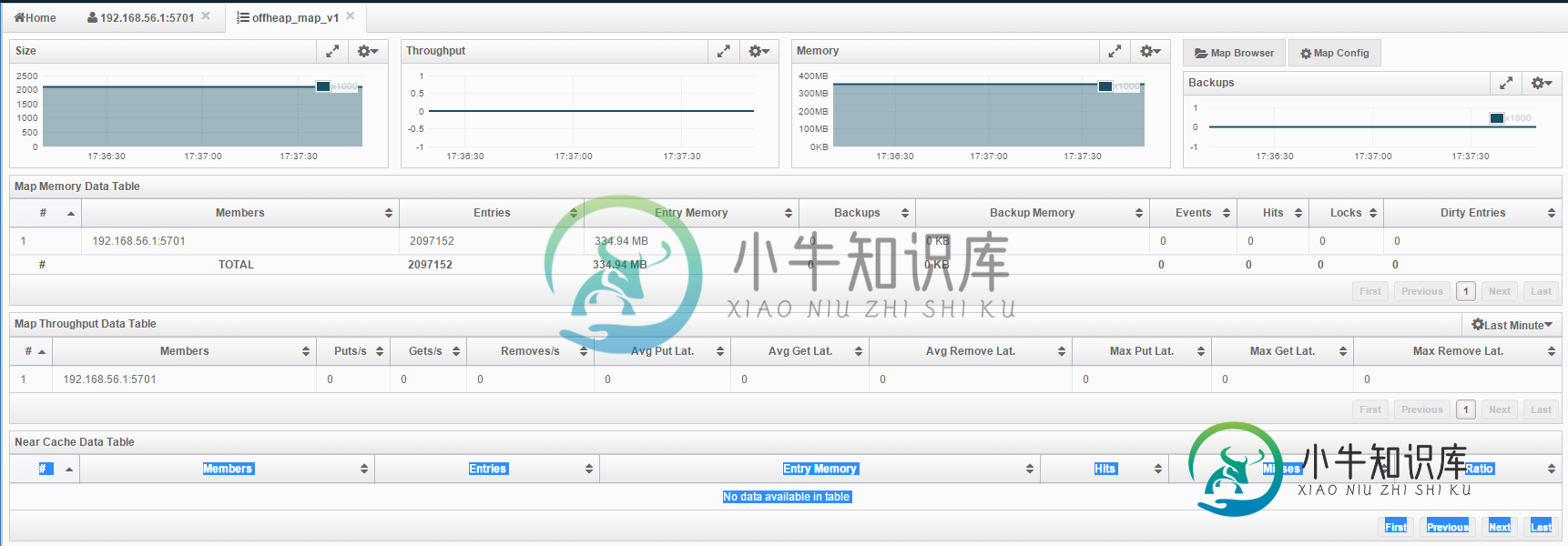

我想使用Hazelcast 3.5.2高密度内存存储功能。然而,我不能使它工作。

根据Hazelcast文档,我配置了我的Hazelcast服务器。

<?xml version="1.0" encoding="UTF-8"?>

<!--

~ Copyright (c) 2008-2015, Hazelcast, Inc. All Rights Reserved.

~

~ Licensed under the Apache License, Version 2.0 (the "License");

~ you may not use this file except in compliance with the License.

~ You may obtain a copy of the License at

~

~ http://www.apache.org/licenses/LICENSE-2.0

~

~ Unless required by applicable law or agreed to in writing, software

~ distributed under the License is distributed on an "AS IS" BASIS,

~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

~ See the License for the specific language governing permissions and

~ limitations under the License.

-->

<!--

The default Hazelcast configuration. This is used when:

- no hazelcast.xml if present

-->

<hazelcast xsi:schemaLocation="http://www.hazelcast.com/schema/config hazelcast-config-3.5.xsd"

xmlns="http://www.hazelcast.com/schema/config"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<group>

<name>dev</name>

<password>dev-pass</password>

</group>

<license-key>HazelcastEnterprise#10Nodes#HDMemory:10GB#bjERNiaUAyS1V7IOl530KTrf13W4x30oz1Q010909911h090MQ00100060gL</license-key>

<management-center enabled="true">http://192.168.56.120:8080/mancenter-3.5.2</management-center>

<network>

<port auto-increment="true" port-count="100">5701</port>

<outbound-ports>

<!--

Allowed port range when connecting to other nodes.

0 or * means use system provided port.

-->

<ports>0</ports>

</outbound-ports>

<join>

<multicast enabled="true">

<multicast-group>224.2.2.3</multicast-group>

<multicast-port>54327</multicast-port>

</multicast>

<tcp-ip enabled="false">

<interface>127.0.0.1</interface>

<member-list>

<member>127.0.0.1</member>

</member-list>

</tcp-ip>

<aws enabled="false">

<access-key>my-access-key</access-key>

<secret-key>my-secret-key</secret-key>

<!--optional, default is us-east-1 -->

<region>us-west-1</region>

<!--optional, default is ec2.amazonaws.com. If set, region shouldn't be set as it will override this property -->

<host-header>ec2.amazonaws.com</host-header>

<!-- optional, only instances belonging to this group will be discovered, default will try all running instances -->

<security-group-name>hazelcast-sg</security-group-name>

<tag-key>type</tag-key>

<tag-value>hz-nodes</tag-value>

</aws>

</join>

<interfaces enabled="true">

<interface>192.168.56.1</interface>

</interfaces>

<ssl enabled="false"/>

<socket-interceptor enabled="false"/>

<symmetric-encryption enabled="false">

<!--

encryption algorithm such as

DES/ECB/PKCS5Padding,

PBEWithMD5AndDES,

AES/CBC/PKCS5Padding,

Blowfish,

DESede

-->

<algorithm>PBEWithMD5AndDES</algorithm>

<!-- salt value to use when generating the secret key -->

<salt>thesalt</salt>

<!-- pass phrase to use when generating the secret key -->

<password>thepass</password>

<!-- iteration count to use when generating the secret key -->

<iteration-count>19</iteration-count>

</symmetric-encryption>

</network>

<partition-group enabled="false"/>

<executor-service name="default">

<pool-size>16</pool-size>

<!--Queue capacity. 0 means Integer.MAX_VALUE.-->

<queue-capacity>0</queue-capacity>

</executor-service>

<queue name="default">

<!--

Maximum size of the queue. When a JVM's local queue size reaches the maximum,

all put/offer operations will get blocked until the queue size

of the JVM goes down below the maximum.

Any integer between 0 and Integer.MAX_VALUE. 0 means

Integer.MAX_VALUE. Default is 0.

-->

<max-size>0</max-size>

<!--

Number of backups. If 1 is set as the backup-count for example,

then all entries of the map will be copied to another JVM for

fail-safety. 0 means no backup.

-->

<backup-count>1</backup-count>

<!--

Number of async backups. 0 means no backup.

-->

<async-backup-count>0</async-backup-count>

<empty-queue-ttl>-1</empty-queue-ttl>

</queue>

<map name="default">

<!--

Data type that will be used for storing recordMap.

Possible values:

BINARY (default): keys and values will be stored as binary data

OBJECT : values will be stored in their object forms

NATIVE : values will be stored in non-heap region of JVM

-->

<in-memory-format>NATIVE</in-memory-format>

<!--

Number of backups. If 1 is set as the backup-count for example,

then all entries of the map will be copied to another JVM for

fail-safety. 0 means no backup.

-->

<backup-count>1</backup-count>

<!--

Number of async backups. 0 means no backup.

-->

<async-backup-count>0</async-backup-count>

<!--

Maximum number of seconds for each entry to stay in the map. Entries that are

older than <time-to-live-seconds> and not updated for <time-to-live-seconds>

will get automatically evicted from the map.

Any integer between 0 and Integer.MAX_VALUE. 0 means infinite. Default is 0.

-->

<time-to-live-seconds>0</time-to-live-seconds>

<!--

Maximum number of seconds for each entry to stay idle in the map. Entries that are

idle(not touched) for more than <max-idle-seconds> will get

automatically evicted from the map. Entry is touched if get, put or containsKey is called.

Any integer between 0 and Integer.MAX_VALUE. 0 means infinite. Default is 0.

-->

<max-idle-seconds>0</max-idle-seconds>

<!--

Valid values are:

NONE (no eviction),

LRU (Least Recently Used),

LFU (Least Frequently Used).

NONE is the default.

-->

<eviction-policy>NONE</eviction-policy>

<!--

Maximum size of the map. When max size is reached,

map is evicted based on the policy defined.

Any integer between 0 and Integer.MAX_VALUE. 0 means

Integer.MAX_VALUE. Default is 0.

-->

<max-size policy="PER_NODE">0</max-size>

<!--

When max. size is reached, specified percentage of

the map will be evicted. Any integer between 0 and 100.

If 25 is set for example, 25% of the entries will

get evicted.

-->

<eviction-percentage>25</eviction-percentage>

<!--

Minimum time in milliseconds which should pass before checking

if a partition of this map is evictable or not.

Default value is 100 millis.

-->

<min-eviction-check-millis>100</min-eviction-check-millis>

<!--

While recovering from split-brain (network partitioning),

map entries in the small cluster will merge into the bigger cluster

based on the policy set here. When an entry merge into the

cluster, there might an existing entry with the same key already.

Values of these entries might be different for that same key.

Which value should be set for the key? Conflict is resolved by

the policy set here. Default policy is PutIfAbsentMapMergePolicy

There are built-in merge policies such as

com.hazelcast.map.merge.PassThroughMergePolicy; entry will be overwritten if merging entry exists for the key.

com.hazelcast.map.merge.PutIfAbsentMapMergePolicy ; entry will be added if the merging entry doesn't exist in the cluster.

com.hazelcast.map.merge.HigherHitsMapMergePolicy ; entry with the higher hits wins.

com.hazelcast.map.merge.LatestUpdateMapMergePolicy ; entry with the latest update wins.

-->

<merge-policy>com.hazelcast.map.merge.PutIfAbsentMapMergePolicy</merge-policy>

</map>

<map name="offheap_map_v1">

<in-memory-format>NATIVE</in-memory-format>

<backup-count>1</backup-count>

<time-to-live-seconds>0</time-to-live-seconds>

<max-idle-seconds>0</max-idle-seconds>

<eviction-policy>NONE</eviction-policy>

<max-size policy="PER_NODE">0</max-size>

<eviction-percentage>25</eviction-percentage>

<merge-policy>com.hazelcast.map.merge.LatestUpdateMapMergePolicy</merge-policy>

</map>

<map name="__vertx.subs">

<backup-count>1</backup-count>

<time-to-live-seconds>0</time-to-live-seconds>

<max-idle-seconds>0</max-idle-seconds>

<eviction-policy>NONE</eviction-policy>

<max-size policy="PER_NODE">0</max-size>

<eviction-percentage>25</eviction-percentage>

<merge-policy>com.hazelcast.map.merge.LatestUpdateMapMergePolicy</merge-policy>

</map>

<multimap name="default">

<backup-count>1</backup-count>

<value-collection-type>SET</value-collection-type>

</multimap>

<list name="default">

<backup-count>1</backup-count>

</list>

<set name="default">

<backup-count>1</backup-count>

</set>

<jobtracker name="default">

<max-thread-size>0</max-thread-size>

<!-- Queue size 0 means number of partitions * 2 -->

<queue-size>0</queue-size>

<retry-count>0</retry-count>

<chunk-size>1000</chunk-size>

<communicate-stats>true</communicate-stats>

<topology-changed-strategy>CANCEL_RUNNING_OPERATION</topology-changed-strategy>

</jobtracker>

<semaphore name="default">

<initial-permits>0</initial-permits>

<backup-count>1</backup-count>

<async-backup-count>0</async-backup-count>

</semaphore>

<semaphore name="__vertx.*">

<initial-permits>1</initial-permits>

</semaphore>

<reliable-topic name="default">

<read-batch-size>10</read-batch-size>

<topic-overload-policy>BLOCK</topic-overload-policy>

<statistics-enabled>true</statistics-enabled>

</reliable-topic>

<ringbuffer name="default">

<capacity>10000</capacity>

<backup-count>1</backup-count>

<async-backup-count>0</async-backup-count>

<time-to-live-seconds>30</time-to-live-seconds>

<in-memory-format>BINARY</in-memory-format>

</ringbuffer>

<serialization>

<portable-version>0</portable-version>

</serialization>

<services enable-defaults="true"/>

<native-memory enabled="true" allocator-type="POOLED">

<size value="2" unit="GIGABYTES"/>

</native-memory>

<properties>

<property name="hazelcast.version.check.enabled">false</property>

<property name="hazelcast.elastic.memory.unsafe.enabled">true</property>

<property name="hazelcast.elastic.memory.enabled">true</property>

<property name="hazelcast.elastic.memory.total.size">2G</property>

</properties>

</hazelcast>

我的Java源代码:

import com.hazelcast.client.HazelcastClient;

import com.hazelcast.client.config.ClientConfig;

import com.hazelcast.config.NativeMemoryConfig;

import com.hazelcast.core.HazelcastInstance;

import com.hazelcast.core.IMap;

import com.hazelcast.memory.MemorySize;

import com.hazelcast.memory.MemoryUnit;

public class HazelcastDemo {

public static void main(String[] args) {

ClientConfig clientConfig = new ClientConfig();

clientConfig.getGroupConfig().setName("dev").setPassword("dev-pass");

clientConfig.getNetworkConfig().addAddress("192.168.56.1:5701");

HazelcastInstance client = HazelcastClient.newHazelcastClient(clientConfig);

IMap<String, String> testMap = client.getMap("offheap_map_v1");

System.out.print ("Starting ....");

for (int i = 0; i < 1000000000; i++) {

testMap.put("key-" + i, "value is " + i);

}

System.out.println ("Done!");

}

}

即使我选择以编程方式配置为

import com.hazelcast.client.HazelcastClient;

import com.hazelcast.client.config.ClientConfig;

import com.hazelcast.config.NativeMemoryConfig;

import com.hazelcast.core.HazelcastInstance;

import com.hazelcast.core.IMap;

import com.hazelcast.memory.MemorySize;

import com.hazelcast.memory.MemoryUnit;

public class HazelcastDemo {

public static void main(String[] args) {

MemorySize memorySize = new MemorySize(4, MemoryUnit.GIGABYTES);

NativeMemoryConfig nativeMemoryConfig =

new NativeMemoryConfig()

.setAllocatorType(NativeMemoryConfig.MemoryAllocatorType.POOLED)

.setSize(memorySize)

.setEnabled(true)

.setMinBlockSize(16)

.setPageSize(1 << 20);

ClientConfig clientConfig = new ClientConfig();

clientConfig.setNativeMemoryConfig(nativeMemoryConfig);

clientConfig.getGroupConfig().setName("dev").setPassword("dev-pass");

clientConfig.getNetworkConfig().addAddress("192.168.56.1:5701");

HazelcastInstance client = HazelcastClient.newHazelcastClient(clientConfig);

IMap<String, String> testMap = client.getMap("offheap_map_v1");

System.out.print ("Starting ....");

for (int i = 0; i < 1000000000; i++) {

testMap.put("key-" + i, "value is " + i);

}

System.out.println ("Done!");

}

}

另一个问题是,在放置了大约2.00.000个条目后,Hazelcast enterprise server被异常停止:

com.hazelcast.memory.NativeOutOfMemoryError: Queue has 0 available chunks. Data requires 1 chunks. Storage is full!

at com.hazelcast.elasticmemory.IntegerQueue.poll(IntegerQueue.java:50)

at com.hazelcast.elasticmemory.UnsafeStorage.reserve(UnsafeStorage.java:176)

at com.hazelcast.elasticmemory.UnsafeStorage.put(UnsafeStorage.java:97)

at com.hazelcast.elasticmemory.UnsafeStorage.put(UnsafeStorage.java:33)

at com.hazelcast.map.impl.record.NativeRecordWithStats.setValue(NativeRecordWithStats.java:59)

at com.hazelcast.map.impl.record.NativeRecordWithStats.<init>(NativeRecordWithStats.java:34)

at com.hazelcast.map.impl.record.NativeRecordFactory.newRecord(NativeRecordFactory.java:50)

at com.hazelcast.map.impl.MapContainer.createRecord(MapContainer.java:194)

at com.hazelcast.map.impl.AbstractRecordStore.createRecord(AbstractRecordStore.java:97)

at com.hazelcast.map.impl.DefaultRecordStore.put(DefaultRecordStore.java:793)

at com.hazelcast.map.impl.operation.PutOperation.run(PutOperation.java:34)

at com.hazelcast.spi.impl.operationservice.impl.OperationRunnerImpl.run(OperationRunnerImpl.java:137)

at com.hazelcast.spi.impl.operationexecutor.classic.ClassicOperationExecutor.runOnCallingThread(ClassicOperationExecutor.java:380)

at com.hazelcast.spi.impl.operationexecutor.classic.ClassicOperationExecutor.runOnCallingThreadIfPossible(ClassicOperationExecutor.java:342)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.doInvokeLocal(Invocation.java:247)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.doInvoke(Invocation.java:231)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.invokeInternal(Invocation.java:198)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.invoke(Invocation.java:168)

at com.hazelcast.spi.impl.operationservice.impl.InvocationBuilderImpl.invoke(InvocationBuilderImpl.java:51)

at com.hazelcast.client.impl.client.PartitionClientRequest.process(PartitionClientRequest.java:58)

at com.hazelcast.client.impl.ClientEngineImpl$ClientPacketProcessor.processRequest(ClientEngineImpl.java:468)

at com.hazelcast.client.impl.ClientEngineImpl$ClientPacketProcessor.run(ClientEngineImpl.java:384)

at com.hazelcast.spi.impl.operationservice.impl.OperationRunnerImpl.run(OperationRunnerImpl.java:100)

at com.hazelcast.spi.impl.operationexecutor.classic.OperationThread.processPartitionSpecificRunnable(OperationThread.java:130)

at com.hazelcast.spi.impl.operationexecutor.classic.OperationThread.process(OperationThread.java:120)

at com.hazelcast.spi.impl.operationexecutor.classic.OperationThread.doRun(OperationThread.java:101)

at com.hazelcast.spi.impl.operationexecutor.classic.OperationThread.run(OperationThread.java:76)

有没有人遇到这个案子能给我个建议?

感谢并致以最良好的问候,

共有1个答案

为了真实地回答这个问题:Hazelcast 3.6不支持IMap的HD内存存储,只支持JCache。从3.6开始,IMap也是可用的。

-

问题内容: 对于我的10,000点,我决定在这个很酷的网站上做出一些贡献:一种将位图缓存在本机内存中的机制。 背景 Android设备为每个应用程序分配的内存非常有限-堆的范围从16MB到128MB,具体取决于各种参数。 如果超过此限制,则会得到OOM,并且在使用位图时可能会发生多次。 很多时候,应用可能需要克服这些限制,对巨大的位图执行繁重的操作,或者只是将其存储以备后用,而您需要 我想出的是一

-

本文向大家介绍详解JVM中的本机内存跟踪,包括了详解JVM中的本机内存跟踪的使用技巧和注意事项,需要的朋友参考一下 1.概述 有没有想过为什么Java应用程序通过众所周知的-Xms和-Xmx调优标志消耗的内存比指定数量多得多?出于各种原因和可能的优化,JVM可以分配额外的本机内存。这些额外的分配最终会使消耗的内存超出-Xmx限制。 在本教程中,我们将列举JVM中的一些常见内存分配源,以及它们的大小

-

问题内容: 有什么工具可以知道我的Java应用程序已使用了多少个本机内存?我的应用程序内存不足:当前设置是:-Xmx900m 计算机,Windows 2003 Server 32位,RAM 4GB。 还在Windows上将boot.ini更改为/ 3GB,会有什么不同吗?如果设置为Xmx900m,则可以为此进程分配多少最大本机内存?是1100m吗? 问题答案: (就我而言,我使用的是Java 8)

-

我在哪里可以找到libc_malloc_debug_leak。还有libc_malloc_debug_qemu。那么对于不同的Android版本(冰淇淋三明治、果冻豆、KitKat)和不同的设备(Galaxy Nexus、Nexus 7、Nexus 10)呢?

-

问题内容: 如果您运行的代码调用Java中的本机库,那么当内存分配应在对象的生存期内持续时,释放这些库分配的内存的常用方法是什么?在C ++中,我将使用析构函数,但是Java从来没有真正使用过析构函数,现在更少了。 我最感兴趣的特定情况是JOCL,其中有一个对象,该对象包装了已编译的OpenCL内核及其所有始终相同的参数。表示已编译内核和参数的结构都在库侧分配,JOCL提供了一种方法,您可以调用该

-

我们有一个java应用程序,它是通过运行主功能启动的,我们还在springboot中启动嵌入式jetty作为webcontainer。我发现java head达到了最大大小,但堆使用率很低,java耗尽了被OS杀死的本机内存 pmap中有许多64MB内存。我转储了一些内存块,发现其中有许多日志。日志时间各不相同,即使几天过去了,日志似乎仍在内存中。对于exmaple 我们使用log4j2和slf4