使用OCR将表格图像中的单个字段提取到excel

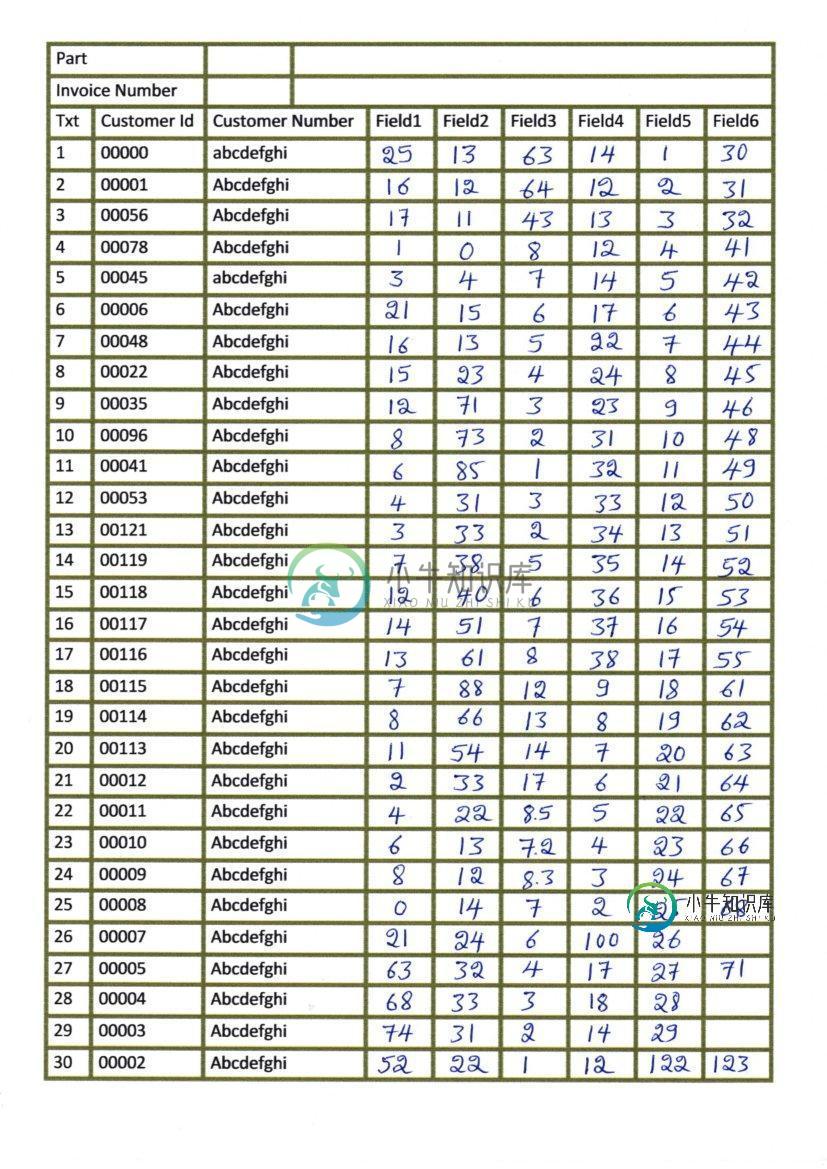

我扫描了一些图像,这些图像有如下所示的表格:

我试图分别提取每个框并执行OCR,但当我尝试检测水平线和垂直线,然后检测框时,它会返回以下图像:

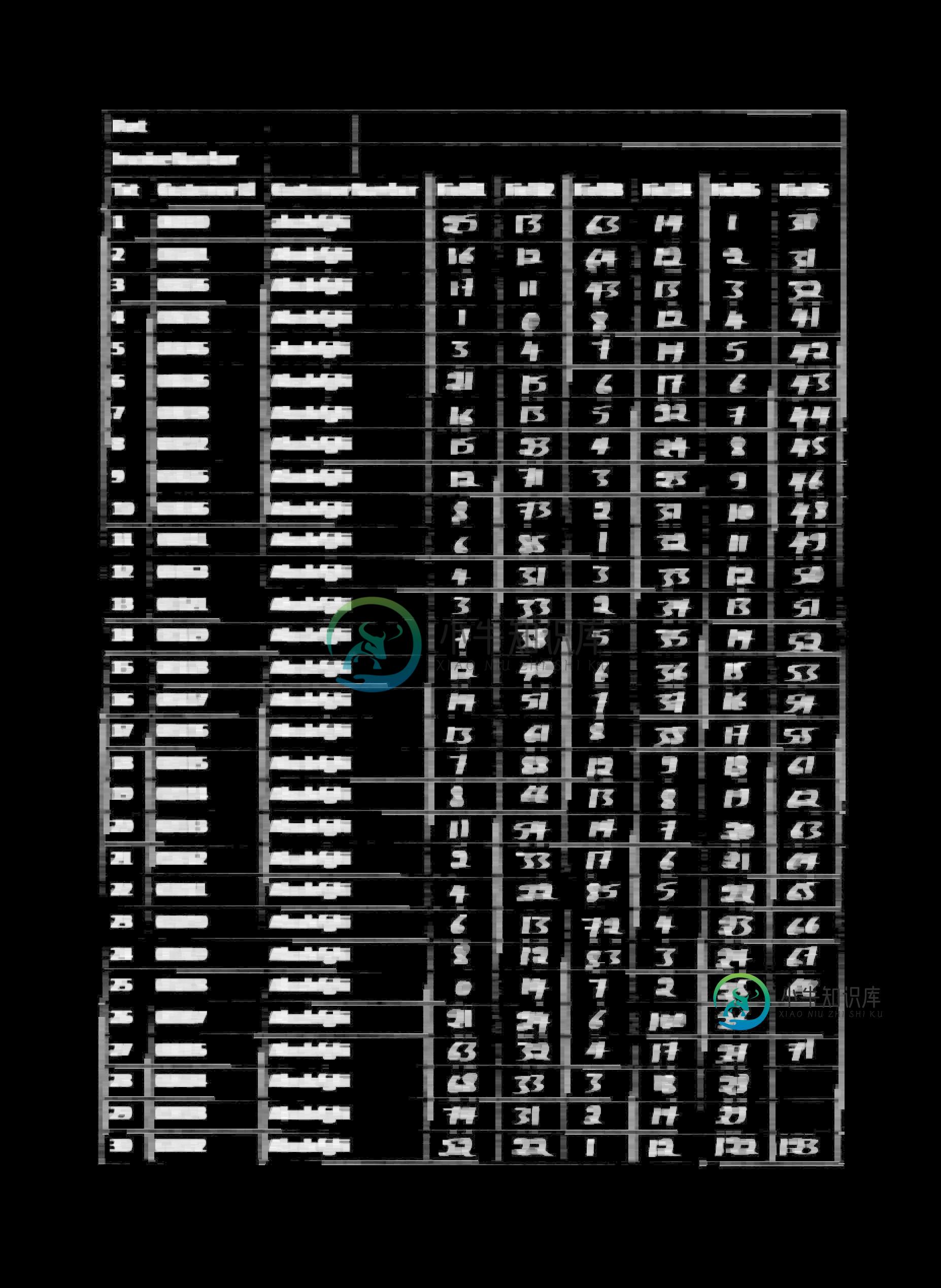

当我尝试执行其他转换来检测文本(侵蚀和扩张)时,一些残留的线条仍然伴随着文本出现,如下所示:

我不能检测文本只执行OCR和正确的边界框不会生成如下所示:

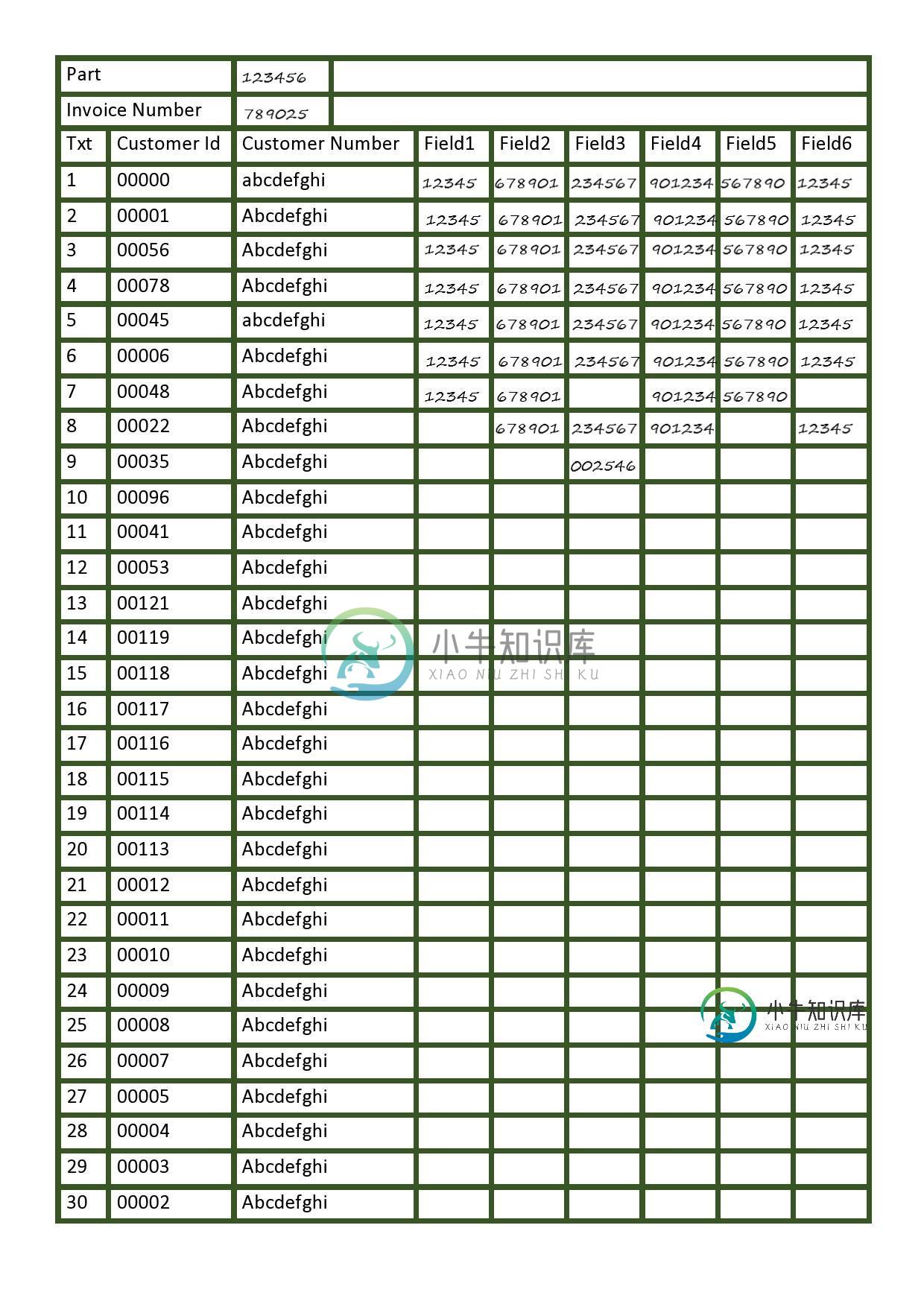

我不能使用真正的线条清晰地分开盒子,我已经在一个用油漆编辑的图像上尝试过了(如下所示),以添加数字,并且它是有效的。

我不知道哪一部分我做错了,但如果有什么我应该尝试或可能改变/添加我的问题,请告诉我。

#Loading all required libraries

%pylab inline

import cv2

import numpy as np

import pandas as pd

import pytesseract

import matplotlib.pyplot as plt

import statistics

from time import sleep

import random

img = cv2.imread('images/scan1.jpg',0)

# for adding border to an image

img1= cv2.copyMakeBorder(img,50,50,50,50,cv2.BORDER_CONSTANT,value=[255,255])

# Thresholding the image

(thresh, th3) = cv2.threshold(img1, 255, 255,cv2.THRESH_BINARY|cv2.THRESH_OTSU)

# to flip image pixel values

th3 = 255-th3

# initialize kernels for table boundaries detections

if(th3.shape[0]<1000):

ver = np.array([[1],

[1],

[1],

[1],

[1],

[1],

[1]])

hor = np.array([[1,1,1,1,1,1]])

else:

ver = np.array([[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[1]])

hor = np.array([[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1]])

# to detect vertical lines of table borders

img_temp1 = cv2.erode(th3, ver, iterations=3)

verticle_lines_img = cv2.dilate(img_temp1, ver, iterations=3)

# to detect horizontal lines of table borders

img_hor = cv2.erode(th3, hor, iterations=3)

hor_lines_img = cv2.dilate(img_hor, hor, iterations=4)

# adding horizontal and vertical lines

hor_ver = cv2.add(hor_lines_img,verticle_lines_img)

hor_ver = 255-hor_ver

# subtracting table borders from image

temp = cv2.subtract(th3,hor_ver)

temp = 255-temp

#Doing xor operation for erasing table boundaries

tt = cv2.bitwise_xor(img1,temp)

iii = cv2.bitwise_not(tt)

tt1=iii.copy()

#kernel initialization

ver1 = np.array([[1,1],

[1,1],

[1,1],

[1,1],

[1,1],

[1,1],

[1,1],

[1,1],

[1,1]])

hor1 = np.array([[1,1,1,1,1,1,1,1,1,1],

[1,1,1,1,1,1,1,1,1,1]])

#morphological operation

temp1 = cv2.erode(tt1, ver1, iterations=2)

verticle_lines_img1 = cv2.dilate(temp1, ver1, iterations=1)

temp12 = cv2.erode(tt1, hor1, iterations=1)

hor_lines_img2 = cv2.dilate(temp12, hor1, iterations=1)

# doing or operation for detecting only text part and removing rest all

hor_ver = cv2.add(hor_lines_img2,verticle_lines_img1)

dim1 = (hor_ver.shape[1],hor_ver.shape[0])

dim = (hor_ver.shape[1]*2,hor_ver.shape[0]*2)

# resizing image to its double size to increase the text size

resized = cv2.resize(hor_ver, dim, interpolation = cv2.INTER_AREA)

#bitwise not operation for fliping the pixel values so as to apply morphological operation such as dilation and erode

want = cv2.bitwise_not(resized)

if(want.shape[0]<1000):

kernel1 = np.array([[1,1,1]])

kernel2 = np.array([[1,1],

[1,1]])

kernel3 = np.array([[1,0,1],[0,1,0],

[1,0,1]])

else:

kernel1 = np.array([[1,1,1,1,1,1]])

kernel2 = np.array([[1,1,1,1,1],

[1,1,1,1,1],

[1,1,1,1,1],

[1,1,1,1,1]])

tt1 = cv2.dilate(want,kernel1,iterations=2)

# getting image back to its original size

resized1 = cv2.resize(tt1, dim1, interpolation = cv2.INTER_AREA)

# Find contours for image, which will detect all the boxes

contours1, hierarchy1 = cv2.findContours(resized1, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#function to sort contours by its x-axis (top to bottom)

def sort_contours(cnts, method="left-to-right"):

# initialize the reverse flag and sort index

reverse = False

i = 0

# handle if we need to sort in reverse

if method == "right-to-left" or method == "bottom-to-top":

reverse = True

# handle if we are sorting against the y-coordinate rather than

# the x-coordinate of the bounding box

if method == "top-to-bottom" or method == "bottom-to-top":

i = 1

# construct the list of bounding boxes and sort them from top to

# bottom

boundingBoxes = [cv2.boundingRect(c) for c in cnts]

(cnts, boundingBoxes) = zip(*sorted(zip(cnts, boundingBoxes),

key=lambda b:b[1][i], reverse=reverse))

# return the list of sorted contours and bounding boxes

return (cnts, boundingBoxes)

#sorting contours by calling fuction

(cnts, boundingBoxes) = sort_contours(contours1, method="top-to-bottom")

#storing value of all bouding box height

heightlist=[]

for i in range(len(boundingBoxes)):

heightlist.append(boundingBoxes[i][3])

#sorting height values

heightlist.sort()

sportion = int(.5*len(heightlist))

eportion = int(0.05*len(heightlist))

#taking 50% to 95% values of heights and calculate their mean

#this will neglect small bounding box which are basically noise

try:

medianheight = statistics.mean(heightlist[-sportion:-eportion])

except:

medianheight = statistics.mean(heightlist[-sportion:-2])

#keeping bounding box which are having height more then 70% of the mean height and deleting all those value where

# ratio of width to height is less then 0.9

box =[]

imag = iii.copy()

for i in range(len(cnts)):

cnt = cnts[i]

x,y,w,h = cv2.boundingRect(cnt)

if(h>=.7*medianheight and w/h > 0.9):

image = cv2.rectangle(imag,(x+4,y-2),(x+w-5,y+h),(0,255,0),1)

box.append([x,y,w,h])

# to show image

###Now we have badly detected boxes image as shown

共有3个答案

这是一个使用tesseract ocr进行版面检测的函数。您可以尝试使用不同的RIL级别和PSM。有关更多详细信息,请查看此处:https://github.com/sirfz/tesserocr

import os

import platform

from typing import List, Tuple

from tesserocr import PyTessBaseAPI, iterate_level, RIL

system = platform.system()

if system == 'Linux':

tessdata_folder_default = ''

elif system == 'Windows':

tessdata_folder_default = r'C:\Program Files (x86)\Tesseract-OCR\tessdata'

else:

raise NotImplementedError

# this tesseract specific env variable takes precedence for tessdata folder location selection

# especially important for windows, as we don't know if we're running 32 or 64bit tesseract

tessdata_folder = os.getenv('TESSDATA_PREFIX', tessdata_folder_default)

def get_layout_boxes(input_image, # PIL image object

level: RIL,

include_text: bool,

include_boxes: bool,

language: str,

psm: int,

tessdata_path='') -> List[Tuple]:

"""

Get image components coordinates. It will return also text if include_text is True.

:param input_image: input PIL image

:param level: page iterator level, please see "RIL" enum

:param include_text: if True return boxes texts

:param include_boxes: if True return boxes coordinates

:param language: language for OCR

:param psm: page segmentation mode, by default it is PSM.AUTO which is 3

:param tessdata_path: the path to the tessdata folder

:return: list of tuples: [((x1, y1, x2, y2), text)), ...]

"""

assert any((include_text, include_boxes)), (

'Both include_text and include_boxes can not be False.')

if not tessdata_path:

tessdata_path = tessdata_folder

try:

with PyTessBaseAPI(path=tessdata_path, lang=language) as api:

api.SetImage(input_image)

api.SetPageSegMode(psm)

api.Recognize()

page_iterator = api.GetIterator()

data = []

for pi in iterate_level(page_iterator, level):

bounding_box = pi.BoundingBox(level)

if bounding_box is not None:

text = pi.GetUTF8Text(level) if include_text else None

box = bounding_box if include_boxes else None

data.append((box, text))

return data

except RuntimeError:

print('Please specify correct path to tessdata.')

nanthancy的答案也很准确,我使用下面的脚本获取每个框,并按列和行对其进行排序。

注意:大部分代码来自Kanan Vyas的一个中型博客:https://medium.com/coinmonks/a-box-detection-algorithm-for-any-image-containing-boxes-756c15d7ed26

#most of this code is take from blog by Kanan Vyas here:

#https://medium.com/coinmonks/a-box-detection-algorithm-for-any-image-containing-boxes-756c15d7ed26

import cv2

import numpy as np

img = cv2.imread('images/scan2.jpg',0)

#fn to show np images with cv2 and close on any key press

def imshow(img, label='default'):

cv2.imshow(label, img)

cv2.waitKey(0)

cv2.destroyAllWindows()

# Thresholding the image

(thresh, img_bin) = cv2.threshold(img, 250, 255,cv2.THRESH_BINARY|cv2.THRESH_OTSU)

#inverting the image

img_bin = 255-img_bin

# Defining a kernel length

kernel_length = np.array(img).shape[1]//80

# A verticle kernel of (1 X kernel_length), which will detect all the verticle lines from the image.

verticle_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1, kernel_length))# A horizontal kernel of (kernel_length X 1), which will help to detect all the horizontal line from the image.

hori_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (kernel_length, 1))# A kernel of (3 X 3) ones.

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3))

# Morphological operation to detect vertical lines from an image

img_temp1 = cv2.erode(img_bin, verticle_kernel, iterations=3)

verticle_lines_img = cv2.dilate(img_temp1, verticle_kernel, iterations=3)

#cv2.imwrite("verticle_lines.jpg",verticle_lines_img)

# Morphological operation to detect horizontal lines from an image

img_temp2 = cv2.erode(img_bin, hori_kernel, iterations=3)

horizontal_lines_img = cv2.dilate(img_temp2, hori_kernel, iterations=3)

#cv2.imwrite("horizontal_lines.jpg",horizontal_lines_img)

# Weighting parameters, this will decide the quantity of an image to be added to make a new image.

alpha = 0.5

beta = 1.0 - alpha# This function helps to add two image with specific weight parameter to get a third image as summation of two image.

img_final_bin = cv2.addWeighted(verticle_lines_img, alpha, horizontal_lines_img, beta, 0.0)

img_final_bin = cv2.erode(~img_final_bin, kernel, iterations=2)

(thresh, img_final_bin) = cv2.threshold(img_final_bin, 128,255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

cv2.imwrite("img_final_bin.jpg",img_final_bin)

# Find contours for image, which will detect all the boxes

contours, hierarchy = cv2.findContours(img_final_bin, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

""" this section saves each extracted box as a seperate image.

idx = 0

for c in contours:

# Returns the location and width,height for every contour

x, y, w, h = cv2.boundingRect(c)

#only selecting boxes within certain width height range

if (w > 10 and h > 15 and h < 50):

idx += 1

new_img = img[y:y+h, x:x+w]

#cv2.imwrite("kanan/1/"+ "{}-{}-{}-{}".format(x, y, w, h) + '.jpg', new_img)

"""

#get set of all y-coordinates to sort boxes row wise

def getsety(boxes):

ally = []

for b in boxes:

ally.append(b[1])

ally = set(ally)

ally = sorted(ally)

return ally

#sort boxes by y in certain range, because if image is tilted than same row boxes

#could have different Ys but within certain range

def sort_boxes(boxes, y, row_column):

l = []

for b in boxes:

if (b[2] > 10 and b[3] > 15 and b[3] < 50):

if b[1] >= y - 7 and b[1] <= y + 7:

l.append(b)

if l in row_column:

return row_column

else:

row_column.append(l)

return row_column

#sort each row using X of each box to sort it column wise

def sortrows(rc):

new_rc = []

for row in rc:

r_new = sorted(row, key = lambda cell: cell[0])

new_rc.append(r_new)

return new_rc

row_column = []

for i in getsety(boundingBoxes):

row_column = sort_boxes(boundingBoxes, i, row_column)

row_column = [i for i in row_column if i != []]

#final np array with sorted boxes from top left to bottom right

row_column = sortrows(row_column)

我在木星笔记本上做了这个,并复制粘贴在这里,如果出现任何错误,让我知道。

谢谢大家的回答

你走在正确的轨道上。这是你的方法的延续,略有修改。想法是:

>

删除所有字符文本轮廓。我们创建一个矩形内核,并执行打开操作,只保留水平/垂直线条。这将有效地使文本变成微小的噪声,因此我们找到轮廓并使用轮廓区域过滤来删除它们。

修复水平/垂直线并提取每个ROI。我们变形接近于修复和断开的线条,并使桌子平滑。从这里,我们使用imutils对长方体场轮廓进行排序。使用自上而下参数对轮廓进行排序()。接下来,我们找到轮廓并使用轮廓区域进行过滤,然后提取每个ROI。

下面是每个框字段和提取的ROI的可视化

代码

import cv2

import numpy as np

from imutils import contours

# Load image, grayscale, Otsu's threshold

image = cv2.imread('1.jpg')

original = image.copy()

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1]

# Remove text characters with morph open and contour filtering

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3,3))

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel, iterations=1)

cnts = cv2.findContours(opening, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

area = cv2.contourArea(c)

if area < 500:

cv2.drawContours(opening, [c], -1, (0,0,0), -1)

# Repair table lines, sort contours, and extract ROI

close = 255 - cv2.morphologyEx(opening, cv2.MORPH_CLOSE, kernel, iterations=1)

cnts = cv2.findContours(close, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

(cnts, _) = contours.sort_contours(cnts, method="top-to-bottom")

for c in cnts:

area = cv2.contourArea(c)

if area < 25000:

x,y,w,h = cv2.boundingRect(c)

cv2.rectangle(image, (x, y), (x + w, y + h), (36,255,12), -1)

ROI = original[y:y+h, x:x+w]

# Visualization

cv2.imshow('image', image)

cv2.imshow('ROI', ROI)

cv2.waitKey(20)

cv2.imshow('opening', opening)

cv2.imshow('close', close)

cv2.imshow('image', image)

cv2.waitKey()

-

我只是从图像中提取文本,但当我试图处理表单时,由于表单边界,该程序无法用于字符提取。如何从包含边界的表单中提取字符?

-

我的目标是使用基于OCR服务器的解决方案,以键值格式从护照图像中提取数据,以便数据保留在本地。我尝试了Azure表单识别器容器(认知服务表单识别器API V1预览版)。但结果并不令人满意,因为根据训练数据创建的模型无法提取任何键值对。我尝试了各种训练样本数据,也参考了https://docs.microsoft.com/en-us/azure/cognitive-services/form-rec

-

我正在尝试从图像中提取文本。目前我得到的输出是空字符串。以下是我的pytesseract代码,尽管我也对Keras OCR持开放态度:- 我不确定如何使用svg图像,所以我将它们转换为png。下面是一些示例图像:- 编辑1 (2021-05-19):我可以使用cairosvg将svg转换为png。仍然无法读取验证码文本 编辑2(2021-05-20):Keras OCR也不会为这些图像返回任何内容

-

我想提取只有蓝色文本图像uisng tesseract ocr.请帮助我关于这一点。 我试过的基本代码: 导入PIL. Image导入cv导入pytesseract, remy_image=PIL. Image.open(r"C:\User\sony\Desktop\Cap_sample\MicrosoftTeams-Image(4). png")pytesseract.pytesseract.t

-

我想上传两个或更多的图片,使用一个表单在不同的领域到数据库使用Codeigniter。 但是这里只有一个正在上传...有人能帮我吗... 我的控制器 这是我的模型 这是我的看法 我的数据库 请帮我上传到单独的数据库字段作为两个单独的文件