Data.Array.Accelerate defines an embedded language of array computations for high-performance computing in Haskell. Computations on multi-dimensional, regular arrays are expressed in the form of parameterised collective operations (such as maps, reductions, and permutations). These computations are online-compiled and executed on a range of architectures.

For more details, see our papers:

- Accelerating Haskell Array Codes with Multicore GPUs

- Optimising Purely Functional GPU Programs (slides)

- Embedding Foreign Code

- Type-safe Runtime Code Generation: Accelerate to LLVM (slides) (video)

- Streaming Irregular Arrays (video)

There are also slides from some fairly recent presentations:

- Embedded Languages for High-Performance Computing in Haskell

- GPGPU Programming in Haskell with Accelerate (video) (workshop)

Chapter 6 of Simon Marlow's book Parallel and Concurrent Programming in Haskell contains a tutorial introduction to Accelerate.

Trevor's PhD thesis details the design and implementation of frontend optimisations and CUDA backend.

Table of Contents

A simple example

As a simple example, consider the computation of a dot product of two vectors of single-precision floating-point numbers:

dotp :: Acc (Vector Float) -> Acc (Vector Float) -> Acc (Scalar Float)

dotp xs ys = fold (+) 0 (zipWith (*) xs ys)

Except for the type, this code is almost the same as the corresponding Haskell code on lists of floats. The types indicate that the computation may be online-compiled for performance; for example, using Data.Array.Accelerate.LLVM.PTX.run it may be on-the-fly off-loaded to a GPU.

Availability

Package accelerate is available from

- Hackage: accelerate - install with

cabal install accelerate - GitHub: AccelerateHS/accelerate - get the source with

git clone https://github.com/AccelerateHS/accelerate.git. The easiest way to compile the source distributions is via the Haskell stack tool.

Additional components

The following supported add-ons are available as separate packages:

- accelerate-llvm-native: Backend targeting multicore CPUs

- accelerate-llvm-ptx: Backend targeting CUDA-enabled NVIDIA GPUs. Requires a GPU with compute capability 2.0 or greater (see the table on Wikipedia)

- accelerate-examples: Computational kernels and applications showcasing the use of Accelerate as well as a regression test suite (supporting function and performance testing)

- Conversion between various formats:

- accelerate-io: For copying data directly between raw pointers

- accelerate-io-array: Immutable arrays

- accelerate-io-bmp: Uncompressed BMP image files

- accelerate-io-bytestring: Compact, immutable binary data

- accelerate-io-cereal: Binary serialisation of arrays using cereal

- accelerate-io-JuicyPixels: Images in various pixel formats

- accelerate-io-repa: Another Haskell library for high-performance parallel arrays

- accelerate-io-serialise: Binary serialisation of arrays using serialise

- accelerate-io-vector: Efficient boxed and unboxed one-dimensional arrays

- accelerate-fft: Fast Fourier transform implementation, with FFI bindings to optimised implementations

- accelerate-blas: BLAS and LAPACK operations, with FFI bindings to optimised implementations

- accelerate-bignum: Fixed-width large integer arithmetic

- colour-accelerate: Colour representations in Accelerate (RGB, sRGB, HSV, and HSL)

- containers-accelerate: Hashing-based container types

- gloss-accelerate: Generate gloss pictures from Accelerate

- gloss-raster-accelerate: Parallel rendering of raster images and animations

- hashable-accelerate: A class for types which can be converted into a hash value

- lens-accelerate: Lens operators for Accelerate types

- linear-accelerate: Linear vector spaces in Accelerate

- mwc-random-accelerate: Generate Accelerate arrays filled with high quality pseudorandom numbers

- numeric-prelude-accelerate: Lifting the numeric-prelude to Accelerate

- wigner-ville-accelerate: Wigner-Ville time-frequency distribution.

Install them from Hackage with cabal install PACKAGENAME.

Documentation

- Haddock documentation is included and linked with the individual package releases on Hackage.

- Haddock documentation for in-development components can be found here.

- The idea behind the HOAS (higher-order abstract syntax) to de-Bruijn conversion used in the library is described separately.

Examples

accelerate-examples

The accelerate-examples package provides a range of computational kernels and a few complete applications. To install these from Hackage, issue cabal install accelerate-examples. The examples include:

- An implementation of canny edge detection

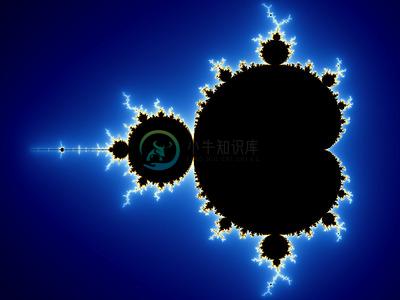

- An interactive mandelbrot set generator

- An N-body simulation of gravitational attraction between solid particles

- An implementation of the PageRank algorithm

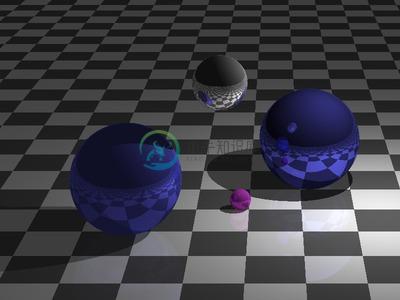

- A simple ray-tracer

- A particle based simulation of stable fluid flows

- A cellular automata simulation

- A "password recovery" tool, for dictionary lookup of MD5 hashes

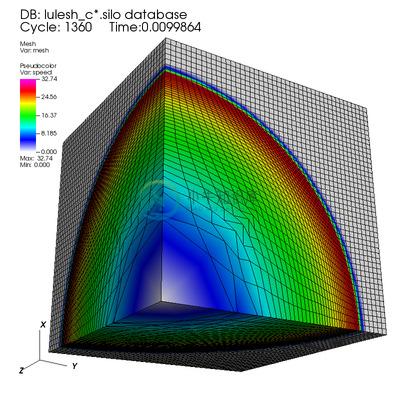

LULESH

LULESH-accelerate is in implementation of the Livermore Unstructured Lagrangian Explicit Shock Hydrodynamics (LULESH) mini-app. LULESH represents a typical hydrodynamics code such as ALE3D, but is a highly simplified application, hard-coded to solve the Sedov blast problem on an unstructured hexahedron mesh.

Additional examples

Accelerate users have also built some substantial applications of their own.Please feel free to add your own examples!

- Jonathan Fraser, GPUVAC: An explicit advection magnetohydrodynamics simulation

- David van Balen, Sudokus: A sudoku solver

- Trevor L. McDonell, lol-accelerate: A backend to the Λ ○ λ (Lol) library for ring-based lattice cryptography

- Henning Thielemann, patch-image: Combine a collage of overlapping images

- apunktbau, bildpunkt: A ray-marching distance field renderer

- klarh, hasdy: Molecular dynamics in Haskell using Accelerate

- Alexandros Gremm used Accelerate as part of the 2014 CSCS summer school (code)

Who are we?

The Accelerate team (past and present) consists of:

- Manuel M T Chakravarty (@mchakravarty)

- Gabriele Keller (@gckeller)

- Trevor L. McDonell (@tmcdonell)

- Robert Clifton-Everest (@robeverest)

- Frederik M. Madsen (@fmma)

- Ryan R. Newton (@rrnewton)

- Joshua Meredith (@JoshMeredith)

- Ben Lever (@blever)

- Sean Seefried (@sseefried)

- Ivo Gabe de Wolff (@ivogabe)

The maintainer and principal developer of Accelerate is Trevor L.McDonell trevor.mcdonell@gmail.com.

Mailing list and contacts

- Mailing list:

accelerate-haskell@googlegroups.com(discussions on both use and development are welcome) - Sign up for the mailing list at the Accelerate Google Groups page

- Bug reports and issues tracking: GitHub project page

- Chat with us on gitter

Citing Accelerate

If you use Accelerate for academic research, you are encouraged (though notrequired) to cite the following papers:

Manuel M. T. Chakravarty, Gabriele Keller, Sean Lee, Trevor L. McDonell, and Vinod Grover.Accelerating Haskell Array Codes with Multicore GPUs.In DAMP '11: Declarative Aspects of Multicore Programming, ACM, 2011.

Trevor L. McDonell, Manuel M. T. Chakravarty, Gabriele Keller, and Ben Lippmeier.Optimising Purely Functional GPU Programs.In ICFP '13: The 18th ACM SIGPLAN International Conference on Functional Programming, ACM, 2013.

Robert Clifton-Everest, Trevor L. McDonell, Manuel M. T. Chakravarty, and Gabriele Keller.Embedding Foreign Code.In PADL '14: The 16th International Symposium on Practical Aspects of Declarative Languages, Springer-Verlag, LNCS, 2014.

Trevor L. McDonell, Manuel M. T. Chakravarty, Vinod Grover, and Ryan R. Newton.Type-safe Runtime Code Generation: Accelerate to LLVM.In Haskell '15: The 8th ACM SIGPLAN Symposium on Haskell, ACM, 2015.

Robert Clifton-Everest, Trevor L. McDonell, Manuel M. T. Chakravarty, and Gabriele Keller.Streaming Irregular Arrays.In Haskell '17: The 10th ACM SIGPLAN Symposium on Haskell, ACM, 2017.

Accelerate is primarily developed by academics, so citations matter a lot to us.As an added benefit, you increase Accelerate's exposure and potential user (anddeveloper!) base, which is a benefit to all users of Accelerate. Thanks in advance!

What's missing?

Here is a list of features that are currently missing:

- Preliminary API (parts of the API may still change in subsequent releases)

- Many more features... contact us!

-

假如你的服务器有 4 GPUs. 首先,确保安装了accelerate命令。没有安装的话执行 pip install accelerate 第二,确保CUDA_VISIBLE_DEVICES命令存在。 第三,直接指定GPU命令 指定任务1为卡0 CUDA_VISIBLE_DEVICES=0 nohup accelerate launch a.py >log.txt & 指定任务2为卡1 CUD

-

Overview 珞 Accelerate is a library that enables the same PyTorch code to be run across any distributed configuration by adding just four lines of code! In short, training and inference at scale made s

-

1. 前言 Accelerate 能帮助我们: 方便用户在不同设备上 run Pytorch training script. mixed precision 不同的分布式训练场景, e.g., multi-GPU, TPUs, … 提供了一些 CLI 工具方便用户更快的 configure & test 训练环境,launch the scripts. 方便使用: 用一个例子感受一下。传统的 P

-

由于项目代码比较复杂且可读性差…,尝试使用Hugging Face的Accelerate实现多卡的分布式训练。 1/ 为什么使用HuggingFace Accelerate Accelerate主要解决的问题是分布式训练(distributed training),在项目的开始阶段,可能要在单个GPU上跑起来,但是为了加速训练,考虑多卡训练。当然,如果想要debug代码,推荐在CPU上运行调试,因

-

0,简介 Accelerate提供了一个简单的 API,将与多 GPU 、 TPU 、 fp16 相关的样板代码抽离了出来,保持其余代码不变。PyTorch 用户无须使用不便控制和调整的抽象类或编写、维护样板代码,就可以直接上手多 GPU 或 TPU。 1,安装 pip install accelerate 2,应用 from accelerate import Accelerator

-

这是使用百度云的时候accelerate插件自动生成的,就是百度云的网页下载功能。当点击“下载”按钮就会出现这个文件夹。 .accelerate文件夹作用和处理方法 作用:这个文件夹应该是用来作为上传和下载的高速缓存,用来存放临时文件用的,p2p加速缓存文件夹。 处理方法:设置百度云下载的默认文件夹。如果你设置下载到桌面就会在桌面有一个名为.accelerate的加速文件夹。修改你百度云默认下载位

-

这个是transformers版本问题 一些垃圾比赛,评委安装的版本有问题就直接取消参赛资格实在曹丹 pip3 install transformers==4.19.2

-

苹果框架学习(一)Accelerate.framework 1. Accelerate简介 Accelerate框架能做什么? 进行大规模的数学计算和图像计算,优化为高性能和低能耗。 通过利用其向量处理能力,Accelerate在CPU上提供高性能、节能的计算。下面的加速库抽象了这种能力,使为它们编写的代码在运行时执行适当的处理器指令: BNNS. 为训练和推理而构造和运行神经网络的子程序。 vI

-

New in version 1.3. 你也许不需要这个!¶ 你在使用 Ansible 1.5 或者 之后的版本吗? 如果是的话,因为被称之为 “SSH pipelining” 的新特性,你也许就不需要加速模式了.详情请阅读:ref:pipelining 部分的章节. 对于使用 1.5 及之后版本的用户,加速模式只在以下情况下有用处: (A) 管理红帽企业版 Linux 6 或者更早的那些依然使用

-

Source code https://github.com/openstack/networking-ovs-dpdk Install yum install -y kernel-headers kernel-core kernel-modules kernel kernel-devel kernel-modules-extra https://github.com/openstack/netw

-

AMP HTML 是一种为静态内容构建 web 页面,提供可靠和快速的渲染,加快页面加载的时间,特别是在移动 Web 端查看内容的时间。 AMP HTML 完全是基于现有 web 技术构建的,通过限制一些 HTML,CSS 和 JavaScript 部分来提供可靠的性能。这些限制是通过 AMP HTML 一个验证器强制执行的。为了弥补这些限制,AMP HTML 定义了一系列超出基础 HTML 的自

-

LLVM backends for the Accelerate array language This package compiles Accelerate code to LLVM IR, and executes that code onmulticore CPUs as well as NVIDIA GPUs. This avoids the need to go through nvc

-

A picture is worth a thousand words. Or is it?Tables, charts, pictures are all useful in understanding our data but often we need a description – a story to tell us what are we looking at.Accelerated

-

Accelerated Container Image 是一种基于块设备的容器镜像服务,由阿里巴巴开源,目前是containerd的子项目。 该项目的核心是overlaybd镜像格式,该镜像格式在阿里巴巴集团大量应用,支撑多年双11,支持阿里云多个serverless服务。overlaybd有别于传统基于文件系统的加速镜像格式,具有如下特性: 1、按需加载: 无需提前下载和解压镜像,容器启动时按需