SDN Load Balancing

Note: Project is no longer being maintained. Have pushed a recent fix to fix the parsing issues. The script will work as long as the environment is setup correctly. The script is not at all optimized and was written to get a proof of concept.

Goal: To perform load balancing on any fat tree topology using SDN Controller i.e. Floodlight and OpenDaylight.

Note: The goal is to perform load balancing but at the same time ensure that the latency is minimum. We are using dijkstra's algorithm to find multiple paths of same length which enables us to reduce the search to a small region in the fat tree topology. It is also important to note that OpenDaylight by default forwards traffic to all ports. So specific rules might need to be pushed to get a proper load balancing output. Currently the program simply finds the path with least load and forwards traffic on that path.

Code is be a bit un-optimized, especially for the flow rules being pushed in ODL.

System Details

- SDN Controller - Floodlight v1.2 or OpenDaylight Beryllium SR1

- Virtual Network Topology - Mininet

- Evaluation & Analysis - Wireshark, iPerf

- OS - Ubuntu 14.04 LTS

Implementation Approach

Base Idea: Make use of REST APIs to collect operational information of the topology and its devices.

- Enable statistics collection in case of Floodlight (TX i.e. Transmission Rate, RX i.e. Receiving Rate, etc). This step is not applicable for OpenDaylight

- Find information about hosts connected such as their IP, Switch to which they are connected, MAC Addresses, Port mapping, etc

- Obtain path/route information (using Dijkstra thereby limiting search to shortest paths and only one segment of fat tree topology) from Host 1 to Host 2 i.e. the hosts between load balancing has to be performed.

- Find total link cost for all these paths between Host 1 and Host 2. In case of Floodlight it is the TX and RX but for OpenDaylight it gives only transmitted data. So subsequent REST requests are made to compute this. This adds latency to the application (when using OpenDaylight).

- The flows are created depending on the minimum transmission cost of the links at the given time.

- Based on the cost, the best path is decided and static flows are pushed into each switch in the current best path. Information such as In-Port, Out-Port, Source IP, Destination IP, Source MAC, Destination MAC is fed to the flows.

- The program continues to update this information every minute thereby making it dynamic.

Results We Achieved

| Transfer (Gbytes) - BLB | B/W(Gbits) - BLB | Transfer (Gbytes) - ALB | B/W(Gbits) - ALB |

|---|---|---|---|

| 15.7 | 13.5 | 38.2 | 32.8 |

| 21.9 | 18.8 | 27.6 | 32.3 |

| 24.6 | 21.1 | 40.5 | 34.8 |

| 22.3 | 19.1 | 40.8 | 35.1 |

| 39.8 | 34.2 | 16.5 | 14.2 |

| Average = 24.86 | Average = 21.34 | Average = 32.72 | Average = 29.84 |

iPerf H1 to H3 Before Load Balancing (BLB) and After Load Balancing (ALB)

| Transfer (Gbytes) - BLB | B/W(Gbits) - BLB | Transfer (Gbytes) - ALB | B/W(Gbits) - ALB |

|---|---|---|---|

| 18.5 | 15.9 | 37.2 | 31.9 |

| 18.1 | 15.5 | 39.9 | 34.3 |

| 23.8 | 20.2 | 40.2 | 34.5 |

| 17.8 | 15.3 | 40.3 | 34.6 |

| 38.4 | 32.9 | 18.4 | 15.8 |

| Average = 23.32 | Average = 19.96 | Average = 35.2 | Average = 30.22 |

iPerf H1 to H4 Before Load Balancing (BLB) and After Load Balancing (ALB)

| Min | Avg | Max | Mdev |

|---|---|---|---|

| 0.049 | 0.245 | 4.407 | 0.807 |

| 0.050 | 0.155 | 4.523 | 0.575 |

| 0.041 | 0.068 | 0.112 | 0.019 |

| 0.041 | 0.086 | 0.416 | 0.066 |

| 0.018 | 0.231 | 4.093 | 0.759 |

| Avg:0.0398 | Avg:0.157 | Avg:2.7102 | Avg:0.4452 |

Ping from H1 to H4 Before Load Balancing

| Min | Avg | Max | Mdev |

|---|---|---|---|

| 0.039 | 0.075 | 0.407 | 0.068 |

| 0.048 | 0.078 | 0.471 | 0.091 |

| 0.04 | 0.072 | 0.064 | 0.199 |

| 0.038 | 0.074 | 0.283 | 0.039 |

| 0.048 | 0.099 | 0.509 | 0.108 |

| Avg:0.0426 | Avg:0.0796 | Avg:0.3468 | Avg:0.101 |

Ping from H1 to H4 After Load Balancing

How To Use It?

Video Demo

Requirements

- Download Floodlight (v1.2) / OpenDaylight (Beryllium SR1)

- Install Mininet (v2.2.1)

- Install OpenVSwitch

Running The Program (OpenDaylight)

- Download the distribution package from OpenDaylight. Next unzip the folder.

- In Terminal, change directory to the distribution folder and run

./bin/karaf. - Run Mininet

sudo mn --custom topology.py --topo mytopo --controller=remote,ip=127.0.0.1,port=6633 - Perform

pingalla couple of times till no packet loss occurs. - Next run the odl.py script and specify the input. Read the Floodlight instructions to get better insight on what is happening and what input to feed in.

Running The Program (Floodlight)

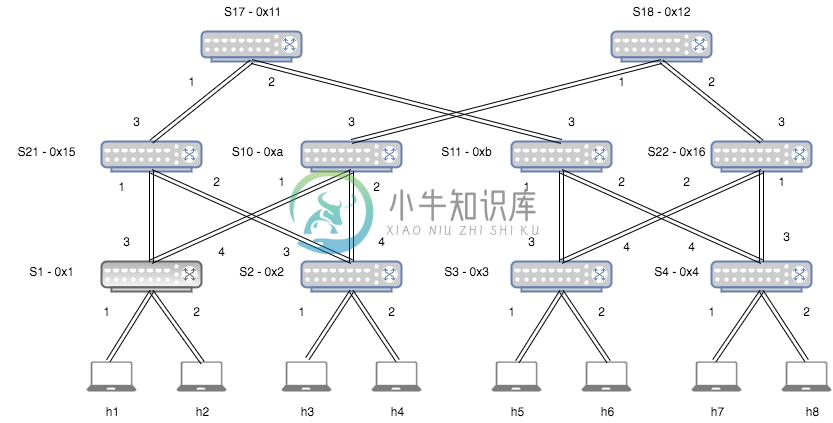

Note: We are performing load balancing between h1, h3 and h4 at the moment. The best path for both is via Switch 1 Port 4. This is the best path selected by OpenFlow protocol. Please see the topology and why is it Port 4.

Remove the official Floodlight Load Balancer

Run the floodlight.sh shell script

Run Floodlight

Run the fat tree topology i.e. topology.py using Mininet

sudo mn --custom topology.py --topo mytopo --controller=remote,ip=127.0.0.1,port=6653Note: Provide correct path to topology.py in command

Note: Switch ID have been added next to Switches. Numbers near the links are the port numbers. These port numbers may change when you run mininet. For us this is the port numbers. From now on all references in the code will be with respect to this topology

Type the following command in Mininet

xterm h1 h1In first console of h1 type,

ping 10.0.0.3In second console of h1 type,

ping 10.0.0.4On Terminal open a new tab

Ctrl + Shift + Tand typesudo wiresharkIn wireshark, go to Capture->Interfaces and select

s1-eth4and start the capture.In filters section in wireshark type

ip.addr==10.0.0.3and check if you are receiving packets for h1 -> h3. Do same thing for h1->h4. Once you see packets, you can figure that this is the best path.But to confirm it is, repeat the above two steps for

s1-eth3and you will find that no packets are transmitted to this port. Only packets it will receive will be broadcast and multicast. Ignore them.Now in the second console of xterm of h1, stop pinging h4. Our goal is to create congestion on the best path of h1->h3, h1->h4 and vice versa and h1 pinging h3 is enough for that

Go to your Terminal and open a new tab and run the loadbalancer.py script

Provide input arguments such as host 1, host 2 and host 2's neighbor in integer format like for example 1,4,3 where 1 is host 1, 4 is host 2 and 3 is host 2's neighbor. Look at the topology above and you will find that these hosts are nothing but h1, h4 and h3 respectively.

The loadbalancer.py performs REST requests, so initially the link costs will be 0. Re-run the script few times. This may range from 1-10 times. This is because statistics need to be enabled. After enabling statistics, it takes some time to gather information. Once it starts updating the transmission rates, you will get the best path and the flows for best path will be statically pushed to all the switches in the new best route. Here the best route is for h1->h4 and vice versa

To check the flows, perform a REST GET request to http://127.0.0.1:8080/wm/core/switch/all/flow/json

Now on second console of h1 type

ping 10.0.0.4Go to wireshark and monitor interface

s1-eth4with the filterip.addr==10.0.0.xwhere x is 3 and 4. You will find 10.0.0.3 packets but no 10.0.0.4 packetsStop the above capture and now do the capture on

s1-eth3, s21-eth1, s21-eth2, s2-eth3with the filterip.addr==10.0.0.xwhere x is 3 and 4. You will find 10.0.0.4 packets but no 10.0.0.3 packets

Load Balancing Works!

-

SDN全称为“软件定义网络”(Software-Defined Networking),是一种新型的网络架构,通过将网络的控制面和数据面分离,将网络控制集中到控制器中进行统一管理和配置,以提高网络的灵活性和可管理性。传统网络的路由器和交换机等设备通常是硬件设备,控制面和数据面是绑定在一起的,而SDN则将这两个面分离,将控制面放在一个集中的控制器中,交换机和路由器等设备只需要提供数据转发功能即可,而

-

目录 一、SDN定义 二、SDN与传统网络的区别与意义 三、ONF组织的SDN体系架构 四、ONF组织定义意义 五、SDN的数据控制分离的可行性 六、传统控制平面和数据平面概述 七、传统控制平面 八、传统数据平面 九、网络弊病和数控分离重要性 十、SDN的理想模式 十一、网络可编程性理解 一、SDN定义 SDN是一种数据控制分离、软件可编程的新型网络架构,采用了集中(逻辑集中)式的控制平面和分布

-

目录 Tungsten Fabric 的网络架构设计思路 — EVPN on the Host 在硬件 SDN 解决方案中,存在三方面的问题: SDN 功能和设备锁定; 功能扩展上受制于厂家设备; 做 NFV 和服务链集成时,安全设备引流只能局限在同一厂家。 解决硬件 SDN 问题的思路就是软件 SDN 方案,软件和硬件网络完全解耦或部

-

SDN起源于2006年斯坦福大学的Clean Slate研究课题。2009年,Mckeown教授正式提出了SDN概念。 为了解决传统网络发展滞后、运维成本高的问题,服务提供商开始探索新的网络架构,希望能够将控制面(操作系统和各种软件)与硬件解耦,实现底层操作系统、基础软件协议以及增值业务软件的开源自研,这就诞生了SDN技术。 SDN,软件定义网络(S

-

软件定义网络(Software Defined Network, SDN ),是由美国斯坦福大学clean slate研究组提出的一种新型网络创新架构,其核心技术OpenFlow通过将网络设备控制面与数据面分离开来,从而实现了网络流量的灵活控制,为核心 网络及应用的创新提供了良好的平台。 从路由器的设计上看,它由软件控制和硬件数据通道组成。软件控制包括管理(CLI,SNMP)以及路由协议(OSPF

-

控制器是整个SDN网络的核心大脑,负责数据平面资源的编排、维护网络拓扑和状态信息等,并向应用层提供北向API接口。其核心技术包括 链路发现和拓扑管理 高可用和分布式状态管理 自动化部署以及无丢包升级 链路发现和拓扑管理 在SDN中通常使用LLDP发现其所控制的交换机并形成控制层面的网络拓扑。 LLDP(Link Layer Discovery Protocol,链路层发现协议)定义在802.1ab

-

SDN(Software Defined Network)自诞生以来就非常火热,它是一种新的网络设计理念,即控制与转发分离、集中控制并且开放API。一般称控制器开放的API为北向接口,而控制器与底层网络之间的接口为南向接口。南北向接口目前都还没有统一的标准,但南向接口用的比较多的是OpenFlow,使其成为事实上的标准(曾经也有人认为SDN=OpenFlow)。 与SDN相辅相成的NFV,即网络功

-

SDN网络指南 SDN (Software Defined Networking)作为当前最重要的热门技术之一,目前已经普遍得到大家的共识。有关SDN的资料和书籍非常丰富,但入门和学习SDN依然是非常困难。本书整理了SDN实践中的一些基本理论和实践案例心得,希望能给大家带来启发,也欢迎大家关注和贡献。 本书内容包括 网络基础 SDN网络 容器网络 Linux网络 OVS以及DPDK SD-WAN

-

Lantern是盛科推出的业界首款基于硬件的SDN开源项目,基于开放性的硬件SDN交换机,集成了Linux Debian 7.2 OS系统,Open vSwitch(OVS)与芯片SDK,和适配层作为一个开源的整体解决方案。用户可以在GitHub中下载所有源代码,该源代码基于Apache 2.0 许可。SDN是一种新的网络趋势,而OpenFlow是关键技术之一。通过开放标准去实现SDN可以提高灵活

-

许多人声称SDN版本是3.3.1或4.0.0。RC1应该与neo4j 2.2. x工作,但我不能使它工作。 我有以下spring配置: 这将生成此异常: 查看代码可以清楚地看到:SDN指的是neo4j库中的一个类,它在2.2中被删除。x: 在这种情况下,我有什么选择?