得到续集。js库将在Amazon Lambda上工作

因此,我尝试在amazon上运行lambda,并最终通过在amazon测试控制台中测试lambda来缩小错误范围。

我得到的错误是这个。

{

"errorMessage": "Please install mysql2 package manually",

"errorType": "Error",

"stackTrace": [

"new MysqlDialect (/var/task/node_modules/sequelize/lib/dialects/mysql/index.js:14:30)",

"new Sequelize (/var/task/node_modules/sequelize/lib/sequelize.js:234:20)",

"Object.exports.getSequelizeConnection (/var/task/src/twilio/twilio.js:858:20)",

"Object.<anonymous> (/var/task/src/twilio/twilio.js:679:25)",

"__webpack_require__ (/var/task/src/twilio/twilio.js:20:30)",

"/var/task/src/twilio/twilio.js:63:18",

"Object.<anonymous> (/var/task/src/twilio/twilio.js:66:10)",

"Module._compile (module.js:570:32)",

"Object.Module._extensions..js (module.js:579:10)",

"Module.load (module.js:487:32)",

"tryModuleLoad (module.js:446:12)",

"Function.Module._load (module.js:438:3)",

"Module.require (module.js:497:17)",

"require (internal/module.js:20:19)"

]

}

很简单,所以我必须安装mysql2。所以我把它添加到我的package.json文件中。

{

"name": "test-api",

"version": "1.0.0",

"description": "",

"main": "handler.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 0"

},

"keywords": [],

"author": "",

"license": "ISC",

"devDependencies": {

"aws-sdk": "^2.153.0",

"babel-core": "^6.26.0",

"babel-loader": "^7.1.2",

"babel-plugin-transform-runtime": "^6.23.0",

"babel-preset-es2015": "^6.24.1",

"babel-preset-stage-3": "^6.24.1",

"serverless-domain-manager": "^1.1.20",

"serverless-dynamodb-autoscaling": "^0.6.2",

"serverless-webpack": "^4.0.0",

"webpack": "^3.8.1",

"webpack-node-externals": "^1.6.0"

},

"dependencies": {

"babel-runtime": "^6.26.0",

"mailgun-js": "^0.13.1",

"minimist": "^1.2.0",

"mysql": "^2.15.0",

"mysql2": "^1.5.1",

"qs": "^6.5.1",

"sequelize": "^4.31.2",

"serverless": "^1.26.0",

"serverless-plugin-scripts": "^1.0.2",

"twilio": "^3.10.0",

"uuid": "^3.1.0"

}

}

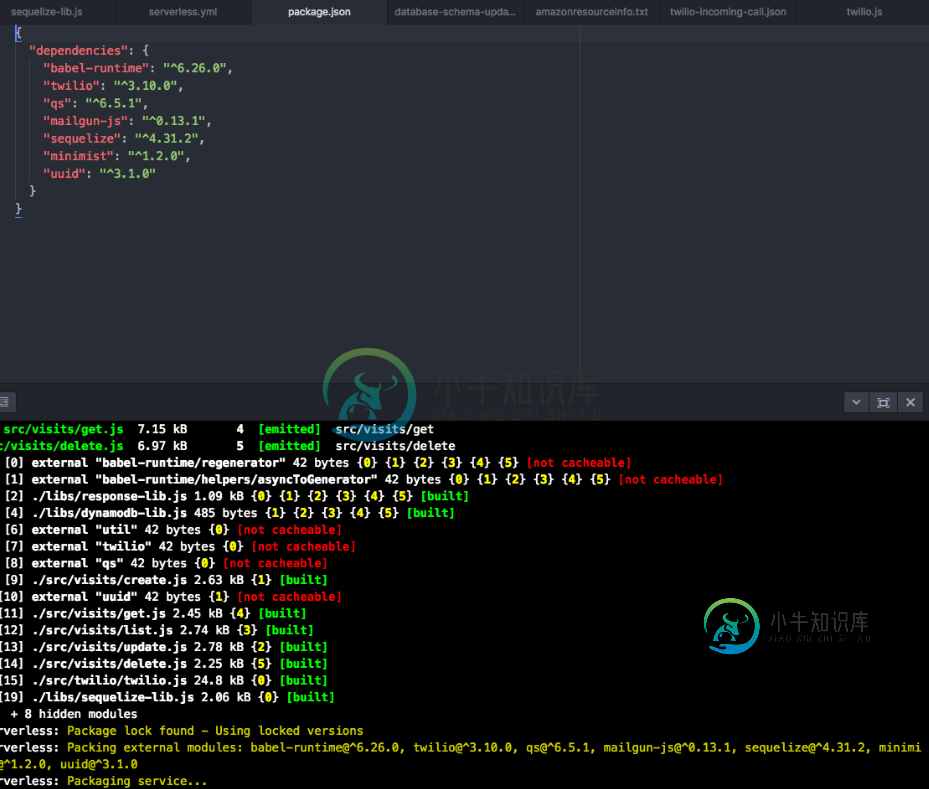

我注意到当我部署sls时,它似乎只打包了一些模块?

Serverless: Package lock found - Using locked versions

Serverless: Packing external modules: babel-runtime@^6.26.0, twilio@^3.10.0, qs@^6.5.1, mailgun-js@^0.13.1, sequelize@^4.31.2, minimi

st@^1.2.0, uuid@^3.1.0

Serverless: Packaging service...

Serverless: Uploading CloudFormation file to S3...

Serverless: Uploading artifacts...

Serverless: Validating template...

Serverless: Updating Stack...

Serverless: Checking Stack update progress...

................................

Serverless: Stack update finished...

我想这就是它不起作用的原因。简言之,我如何让mysql2库正确地与serverless打包,以便我的lambda函数与sequelize库一起工作?

请注意,当我在本地测试时,我的代码运行良好。

下面是我的无服务器文件

service: testapi

# Use serverless-webpack plugin to transpile ES6/ES7

plugins:

- serverless-webpack

- serverless-plugin-scripts

# - serverless-domain-manager

custom:

#Define the Stage or default to Staging.

stage: ${opt:stage, self:provider.stage}

webpackIncludeModules: true

#Define Databases Here

databaseName: "${self:service}-${self:custom.stage}"

#Define Bucket Names Here

uploadBucket: "${self:service}-uploads-${self:custom.stage}"

#Custom Script setup

scripts:

hooks:

#Script below will run schema changes to the database as neccesary and update according to stage.

'deploy:finalize': node database-schema-update.js --stage ${self:custom.stage}

#Domain Setup

# customDomain:

# basePath: "/"

# domainName: "api-${self:custom.stage}.test.com"

# stage: "${self:custom.stage}"

# certificateName: "*.test.com"

# createRoute53Record: true

provider:

name: aws

runtime: nodejs6.10

stage: staging

region: us-east-1

environment:

DOMAIN_NAME: "api-${self:custom.stage}.test.com"

DATABASE_NAME: ${self:custom.databaseName}

DATABASE_USERNAME: ${env:RDS_USERNAME}

DATABASE_PASSWORD: ${env:RDS_PASSWORD}

UPLOAD_BUCKET: ${self:custom.uploadBucket}

TWILIO_ACCOUNT_SID: ""

TWILIO_AUTH_TOKEN: ""

USER_POOL_ID: ""

APP_CLIENT_ID: ""

REGION: "us-east-1"

IDENTITY_POOL_ID: ""

RACKSPACE_API_KEY: ""

#Below controls permissions for lambda functions.

iamRoleStatements:

- Effect: Allow

Action:

- dynamodb:DescribeTable

- dynamodb:UpdateTable

- dynamodb:Query

- dynamodb:Scan

- dynamodb:GetItem

- dynamodb:PutItem

- dynamodb:UpdateItem

- dynamodb:DeleteItem

Resource: "arn:aws:dynamodb:us-east-1:*:*"

functions:

create_visit:

handler: src/visits/create.main

events:

- http:

path: visits

method: post

cors: true

authorizer: aws_iam

get_visit:

handler: src/visits/get.main

events:

- http:

path: visits/{id}

method: get

cors: true

authorizer: aws_iam

list_visit:

handler: src/visits/list.main

events:

- http:

path: visits

method: get

cors: true

authorizer: aws_iam

update_visit:

handler: src/visits/update.main

events:

- http:

path: visits/{id}

method: put

cors: true

authorizer: aws_iam

delete_visit:

handler: src/visits/delete.main

events:

- http:

path: visits/{id}

method: delete

cors: true

authorizer: aws_iam

twilio_send_text_message:

handler: src/twilio/twilio.send_text_message

events:

- http:

path: twilio/sendtextmessage

method: post

cors: true

authorizer: aws_iam

#This function handles incoming calls and where to route it to.

twilio_incoming_call:

handler: src/twilio/twilio.incoming_calls

events:

- http:

path: twilio/calls

method: post

twilio_failure:

handler: src/twilio/twilio.twilio_failure

events:

- http:

path: twilio/failure

method: post

twilio_statuschange:

handler: src/twilio/twilio.statuschange

events:

- http:

path: twilio/statuschange

method: post

twilio_incoming_message:

handler: src/twilio/twilio.incoming_message

events:

- http:

path: twilio/messages

method: post

twilio_whisper:

handler: src/twilio/twilio.whisper

events:

- http:

path: twilio/whisper

method: post

- http:

path: twilio/whisper

method: get

twilio_start_call:

handler: src/twilio/twilio.start_call

events:

- http:

path: twilio/startcall

method: post

- http:

path: twilio/startcall

method: get

resources:

Resources:

uploadBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: ${self:custom.uploadBucket}

RDSDatabase:

Type: AWS::RDS::DBInstance

Properties:

Engine : mysql

MasterUsername: ${env:RDS_USERNAME}

MasterUserPassword: ${env:RDS_PASSWORD}

DBInstanceClass : db.t2.micro

AllocatedStorage: '5'

PubliclyAccessible: true

#TODO: The Value of Stage is also available as a TAG automatically which I may use to replace this manually being put here..

Tags:

-

Key: "Name"

Value: ${self:custom.databaseName}

DeletionPolicy: Snapshot

DNSRecordSet:

Type: AWS::Route53::RecordSet

Properties:

HostedZoneName: test.com.

Name: database-${self:custom.stage}.test.com

Type: CNAME

TTL: '300'

ResourceRecords:

- {"Fn::GetAtt": ["RDSDatabase","Endpoint.Address"]}

DependsOn: RDSDatabase

UPDATE:: 所以我确认运行sls包-阶段开发似乎在最终上传到AWS的zip文件夹中创建了这个。这证实了无服务器由于某种原因没有正确地使用mysql2引用创建包?这是为什么呢?

Webpack配置文件按要求

const slsw = require("serverless-webpack");

const nodeExternals = require("webpack-node-externals");

module.exports = {

entry: slsw.lib.entries,

target: "node",

// Since 'aws-sdk' is not compatible with webpack,

// we exclude all node dependencies

externals: [nodeExternals()],

// Run babel on all .js files and skip those in node_modules

module: {

rules: [

{

test: /\.js$/,

loader: "babel-loader",

include: __dirname,

exclude: /node_modules/

}

]

}

};

共有2个答案

之前的所有方法都没有帮助我,我使用了以下解决方案:https://github.com/sequelize/sequelize/issues/9489#issuecomment-493304014

诀窍是使用方言模块属性并覆盖sequelize。

import Sequelize from 'sequelize';

import mysql2 from 'mysql2'; // Needed to fix sequelize issues with WebPack

const sequelize = new Sequelize(

process.env.DB_NAME,

process.env.DB_USER,

process.env.DB_PASSWORD,

{

dialect: 'mysql',

dialectModule: mysql2, // Needed to fix sequelize issues with WebPack

host: process.env.DB_HOST,

port: process.env.DB_PORT

}

)

export async function connectToDatabase() {

console.log('Trying to connect via sequelize')

await sequelize.sync()

await sequelize.authenticate()

console.log('=> Created a new connection.')

// Do something

}

以前的到目前为止在MySql上工作,但不适用于Postgres

感谢dashmugs在本页进行调查后发表的评论(https://github.com/serverless-heaven/serverless-webpack),有一节是关于强制包含的。我在这里解释一下。

强制包含有时您可能会在代码中使用动态要求,即您需要只有在运行时才知道的模块。Webpack无法检测这样的外部,编译后的包将丢失所需的依赖项。在这种情况下,您可以通过在force包含数组属性中设置某些模块来强制插件包含某些模块。但是,该模块必须出现在服务的生产依赖项中package.json.

# serverless.yml

custom:

webpackIncludeModules:

forceInclude:

- module1

- module2

所以我就这么做了。。。

webpackIncludeModules:

forceInclude:

- mysql

- mysql2

现在它工作了!希望这能帮助其他人解决同样的问题。

-

我的项目代码托管在git和gerrit上。我想把gerrit和jenkins结合起来。当我在源代码管理部分(Jenkins)中输入SSH路径或HTTP路径时,我得到下面的错误 错误: 在主工作区/var/lib/jenkins/workspace/demo[WS-CLEANUP]删除项目工作区时,计时器在主工作区上构建启动。。。[WS-CLEANUP]已完成克隆远程Git存储库克隆存储库http:

-

(i)完成步骤(b)的正确方法是什么?我注意到Apache Avro项目提供了一个名为avro-tools-1.8.1.jar的jar来从模式文件生成java类。但是,我不确定如何在基于SBT的工作流中使用这个jar文件。 (ii)我注意到的另一个选择是有第三方sbt插件(如:sbt-avrohugger、scavro、sbt-avro等)。有推荐的sbt插件吗?因为这些是第三方插件,我不能确定哪

-

接受语言en-gb,EN;Q=0.5 接受编码gzip,放气 连接保持活动状态 X-Origin http://xxx.com X-Referer http://xxx.com X-goog-encode-response-如果...基64

-

问题内容: 我必须使用CDT,mingw和cdt管理的构建功能(没有外部makefiles或构建环境)来重用当前在eclipse中开发的主要C ++项目。该项目本身由许多子项目组成。 我想将该构建集成到一个连续集成服务器(即jenkins)中,因此必须能够自动化无头构建。 到目前为止,我设法签出了该项目(从jenkins轻松完成),并使用以下命令使用eclipse以无头模式构建它: 但是还不够:

-

问题内容: 如果要使用两个数据库,是否需要创建多个Sequelize实例?即,同一台计算机上的两个数据库。 如果没有,执行此操作的正确方法是什么?对我来说,必须两次连接才能使用两个数据库似乎有点过头了。 例如,对于不同的功能,我有不同的数据库,例如,假设一个数据库中有客户数据,而另一个数据库中有统计数据。 因此在MySQL中: 我有这个与续集联系 我试图在使用点表示法的模型定义中“欺骗”它 但这创

-

问题内容: 我正在运行,尽管要在我的下一个应用程序中集成angularjs。 我认为从服务器端最好的方法是在域和控制器类中使用grails 集成。 但是在这里我被困住了。 如何通过grails与angularjs进行通信?(通过?,如果可以的话,如何将其集成?)什么是好的架构? 非常感谢您的回答!!! PS .:我知道有一个grails angular js插件。但是我确实发现有使用它的任何理由!