在我的android上,使用opengl surfaces将一个H.264视频重新编码到另一个视频非常慢

我正在开发将一个视频转换成另一个视频的功能,每一帧都有额外的效果。我决定使用opengl es在每一帧上应用效果。我的输入和输出视频是使用H.264编解码器的MP4格式。我使用MediaCodec API(android API 18)将H.264解码为opengl纹理,然后使用我的着色器使用该纹理绘制表面。我认为使用MediaCodec和H.264可以在android上进行硬件解码,而且速度会很快。但事实似乎并非如此。重新编码小432x240 15秒视频消耗了28秒的总时间!

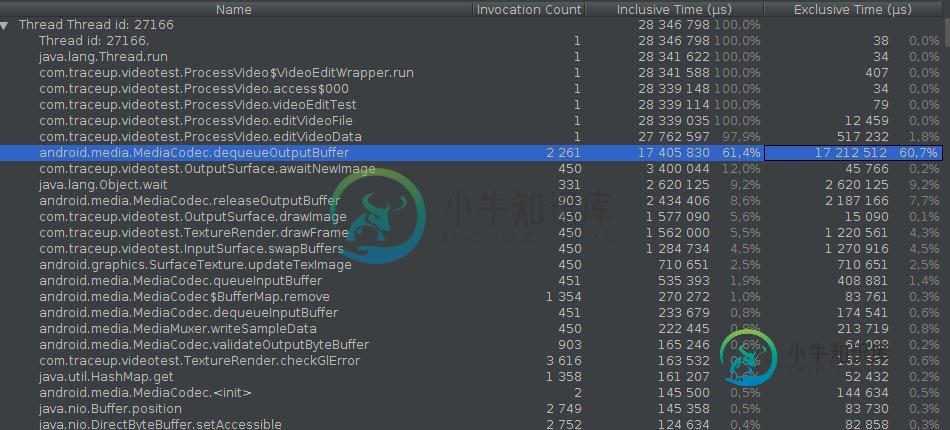

请查看我的代码配置文件信息并分享一些建议,如果我做错了什么,请批评我。

我的代码:

private void editVideoFile()

{

if (VERBOSE)

{

Log.d(TAG, "editVideoFile " + mWidth + "x" + mHeight);

}

MediaCodec decoder = null;

MediaCodec encoder = null;

InputSurface inputSurface = null;

OutputSurface outputSurface = null;

try

{

File inputFile = new File(FILES_DIR, INPUT_FILE); // must be an absolute path

// The MediaExtractor error messages aren't very useful. Check to see if the input

// file exists so we can throw a better one if it's not there.

if (!inputFile.canRead())

{

throw new FileNotFoundException("Unable to read " + inputFile);

}

extractor = new MediaExtractor();

extractor.setDataSource(inputFile.toString());

int trackIndex = inVideoTrackIndex = selectTrack(extractor);

if (trackIndex < 0)

{

throw new RuntimeException("No video track found in " + inputFile);

}

extractor.selectTrack(trackIndex);

MediaFormat inputFormat = extractor.getTrackFormat(trackIndex);

mWidth = inputFormat.getInteger(MediaFormat.KEY_WIDTH);

mHeight = inputFormat.getInteger(MediaFormat.KEY_HEIGHT);

if (VERBOSE)

{

Log.d(TAG, "Video size is " + mWidth + "x" + mHeight);

}

// Create an encoder format that matches the input format. (Might be able to just

// re-use the format used to generate the video, since we want it to be the same.)

MediaFormat outputFormat = MediaFormat.createVideoFormat(MIME_TYPE, mWidth, mHeight);

outputFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT,

MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

outputFormat.setInteger(MediaFormat.KEY_BIT_RATE,

getFormatValue(inputFormat, MediaFormat.KEY_BIT_RATE, BIT_RATE));

outputFormat.setInteger(MediaFormat.KEY_FRAME_RATE,

getFormatValue(inputFormat, MediaFormat.KEY_FRAME_RATE, FRAME_RATE));

outputFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL,

getFormatValue(inputFormat,MediaFormat.KEY_I_FRAME_INTERVAL, IFRAME_INTERVAL));

try

{

encoder = MediaCodec.createEncoderByType(MIME_TYPE);

}

catch (IOException iex)

{

throw new RuntimeException(iex);

}

encoder.configure(outputFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

inputSurface = new InputSurface(encoder.createInputSurface());

inputSurface.makeCurrent();

encoder.start();

// Output filename. Ideally this would use Context.getFilesDir() rather than a

// hard-coded output directory.

String outputPath = new File(OUTPUT_DIR,

"transformed-" + mWidth + "x" + mHeight + ".mp4").toString();

Log.d(TAG, "output file is " + outputPath);

// Create a MediaMuxer. We can't add the video track and start() the muxer here,

// because our MediaFormat doesn't have the Magic Goodies. These can only be

// obtained from the encoder after it has started processing data.

//

// We're not actually interested in multiplexing audio. We just want to convert

// the raw H.264 elementary stream we get from MediaCodec into a .mp4 file.

try

{

mMuxer = new MediaMuxer(outputPath, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

}

catch (IOException ioe)

{

throw new RuntimeException("MediaMuxer creation failed", ioe);

}

mTrackIndex = -1;

mMuxerStarted = false;

// OutputSurface uses the EGL context created by InputSurface.

try

{

decoder = MediaCodec.createDecoderByType(MIME_TYPE);

}

catch (IOException iex)

{

throw new RuntimeException(iex);

}

outputSurface = new OutputSurface();

outputSurface.changeFragmentShader(FRAGMENT_SHADER);

decoder.configure(inputFormat, outputSurface.getSurface(), null, 0);

decoder.start();

editVideoData(decoder, outputSurface, inputSurface, encoder);

}

catch (Exception ex)

{

Log.e(TAG, "Error processing", ex);

throw new RuntimeException(ex);

}

finally

{

if (VERBOSE)

{

Log.d(TAG, "shutting down encoder, decoder");

}

if (outputSurface != null)

{

outputSurface.release();

}

if (inputSurface != null)

{

inputSurface.release();

}

if (encoder != null)

{

encoder.stop();

encoder.release();

}

if (decoder != null)

{

decoder.stop();

decoder.release();

}

if (mMuxer != null)

{

mMuxer.stop();

mMuxer.release();

mMuxer = null;

}

}

}

/**

* Selects the video track, if any.

*

* @return the track index, or -1 if no video track is found.

*/

private int selectTrack(MediaExtractor extractor)

{

// Select the first video track we find, ignore the rest.

int numTracks = extractor.getTrackCount();

for (int i = 0; i < numTracks; i++)

{

MediaFormat format = extractor.getTrackFormat(i);

String mime = format.getString(MediaFormat.KEY_MIME);

if (mime.startsWith("video/"))

{

if (VERBOSE)

{

Log.d(TAG, "Extractor selected track " + i + " (" + mime + "): " + format);

}

return i;

}

}

return -1;

}

/**

* Edits a stream of video data.

*/

private void editVideoData(MediaCodec decoder,

OutputSurface outputSurface, InputSurface inputSurface, MediaCodec encoder)

{

final int TIMEOUT_USEC = 10000;

ByteBuffer[] decoderInputBuffers = decoder.getInputBuffers();

ByteBuffer[] encoderOutputBuffers = encoder.getOutputBuffers();

MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

int inputChunk = 0;

boolean outputDone = false;

boolean inputDone = false;

boolean decoderDone = false;

while (!outputDone)

{

if (VERBOSE)

{

Log.d(TAG, "edit loop");

}

// Feed more data to the decoder.

if (!inputDone)

{

int inputBufIndex = decoder.dequeueInputBuffer(TIMEOUT_USEC);

if (inputBufIndex >= 0)

{

ByteBuffer inputBuf = decoderInputBuffers[inputBufIndex];

// Read the sample data into the ByteBuffer. This neither respects nor

// updates inputBuf's position, limit, etc.

int chunkSize = extractor.readSampleData(inputBuf, 0);

if (chunkSize < 0)

{

// End of stream -- send empty frame with EOS flag set.

decoder.queueInputBuffer(inputBufIndex, 0, 0, 0L,

MediaCodec.BUFFER_FLAG_END_OF_STREAM);

inputDone = true;

if (VERBOSE)

{

Log.d(TAG, "sent input EOS");

}

}

else

{

if (extractor.getSampleTrackIndex() != inVideoTrackIndex)

{

Log.w(TAG, "WEIRD: got sample from track " +

extractor.getSampleTrackIndex() + ", expected " + inVideoTrackIndex);

}

long presentationTimeUs = extractor.getSampleTime();

decoder.queueInputBuffer(inputBufIndex, 0, chunkSize,

presentationTimeUs, 0 /*flags*/);

if (VERBOSE)

{

Log.d(TAG, "submitted frame " + inputChunk + " to dec, size=" +

chunkSize);

}

inputChunk++;

extractor.advance();

}

}

else

{

if (VERBOSE)

{

Log.d(TAG, "input buffer not available");

}

}

}

// Assume output is available. Loop until both assumptions are false.

boolean decoderOutputAvailable = !decoderDone;

boolean encoderOutputAvailable = true;

while (decoderOutputAvailable || encoderOutputAvailable)

{

// Start by draining any pending output from the encoder. It's important to

// do this before we try to stuff any more data in.

int encoderStatus = encoder.dequeueOutputBuffer(info, TIMEOUT_USEC);

if (encoderStatus == MediaCodec.INFO_TRY_AGAIN_LATER)

{

// no output available yet

if (VERBOSE)

{

Log.d(TAG, "no output from encoder available");

}

encoderOutputAvailable = false;

}

else if (encoderStatus == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED)

{

encoderOutputBuffers = encoder.getOutputBuffers();

if (VERBOSE)

{

Log.d(TAG, "encoder output buffers changed");

}

}

else if (encoderStatus == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED)

{

if (mMuxerStarted)

{

throw new RuntimeException("format changed twice");

}

MediaFormat newFormat = encoder.getOutputFormat();

Log.d(TAG, "encoder output format changed: " + newFormat);

// now that we have the Magic Goodies, start the muxer

mTrackIndex = mMuxer.addTrack(newFormat);

mMuxer.start();

mMuxerStarted = true;

}

else if (encoderStatus < 0)

{

throw new RuntimeException("unexpected result from encoder.dequeueOutputBuffer: " + encoderStatus);

}

else

{ // encoderStatus >= 0

ByteBuffer encodedData = encoderOutputBuffers[encoderStatus];

if (encodedData == null)

{

throw new RuntimeException("encoderOutputBuffer " + encoderStatus + " was null");

}

if ((info.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0)

{

// The codec config data was pulled out and fed to the muxer when we got

// the INFO_OUTPUT_FORMAT_CHANGED status. Ignore it.

if (VERBOSE)

{

Log.d(TAG, "ignoring BUFFER_FLAG_CODEC_CONFIG");

}

info.size = 0;

}

// Write the data to the output "file".

if (info.size != 0)

{

if (!mMuxerStarted)

{

throw new RuntimeException("muxer hasn't started");

}

// adjust the ByteBuffer values to match BufferInfo (not needed?)

encodedData.position(info.offset);

encodedData.limit(info.offset + info.size);

mMuxer.writeSampleData(mTrackIndex, encodedData, info);

if (VERBOSE)

{

Log.d(TAG, "sent " + info.size + " bytes to muxer");

}

}

outputDone = (info.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0;

encoder.releaseOutputBuffer(encoderStatus, false);

}

if (encoderStatus != MediaCodec.INFO_TRY_AGAIN_LATER)

{

// Continue attempts to drain output.

continue;

}

// Encoder is drained, check to see if we've got a new frame of output from

// the decoder. (The output is going to a Surface, rather than a ByteBuffer,

// but we still get information through BufferInfo.)

if (!decoderDone)

{

int decoderStatus = decoder.dequeueOutputBuffer(info, TIMEOUT_USEC);

if (decoderStatus == MediaCodec.INFO_TRY_AGAIN_LATER)

{

// no output available yet

if (VERBOSE)

{

Log.d(TAG, "no output from decoder available");

}

decoderOutputAvailable = false;

}

else if (decoderStatus == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED)

{

//decoderOutputBuffers = decoder.getOutputBuffers();

if (VERBOSE)

{

Log.d(TAG, "decoder output buffers changed (we don't care)");

}

}

else if (decoderStatus == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED)

{

// expected before first buffer of data

MediaFormat newFormat = decoder.getOutputFormat();

if (VERBOSE)

{

Log.d(TAG, "decoder output format changed: " + newFormat);

}

}

else if (decoderStatus < 0)

{

throw new RuntimeException("unexpected result from decoder.dequeueOutputBuffer: " + decoderStatus);

}

else

{ // decoderStatus >= 0

if (VERBOSE)

{

Log.d(TAG, "surface decoder given buffer "

+ decoderStatus + " (size=" + info.size + ")");

}

// The ByteBuffers are null references, but we still get a nonzero

// size for the decoded data.

boolean doRender = (info.size != 0);

// As soon as we call releaseOutputBuffer, the buffer will be forwarded

// to SurfaceTexture to convert to a texture. The API doesn't

// guarantee that the texture will be available before the call

// returns, so we need to wait for the onFrameAvailable callback to

// fire. If we don't wait, we risk rendering from the previous frame.

decoder.releaseOutputBuffer(decoderStatus, doRender);

if (doRender)

{

// This waits for the image and renders it after it arrives.

if (VERBOSE)

{

Log.d(TAG, "awaiting frame");

}

outputSurface.awaitNewImage();

outputSurface.drawImage();

// Send it to the encoder.

inputSurface.setPresentationTime(info.presentationTimeUs * 1000);

if (VERBOSE)

{

Log.d(TAG, "swapBuffers");

}

inputSurface.swapBuffers();

}

if ((info.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0)

{

// forward decoder EOS to encoder

if (VERBOSE)

{

Log.d(TAG, "signaling input EOS");

}

if (WORK_AROUND_BUGS)

{

// Bail early, possibly dropping a frame.

return;

}

else

{

encoder.signalEndOfInputStream();

}

}

}

}

}

}

}

在三星Galaxy Note3 Intl(Qualcom)上测试

共有1个答案

您的问题可能在于如何在一个线程上以非零超时同步等待事件。

如果你降低超时时间,你的吞吐量可能会更好。大多数硬件编解码器都有一定的延迟;您可以获得良好的总吞吐量,但不要期望立即得到结果(编码或解码的帧)。

理想情况下,您可以使用零超时来检查编码器和解码器的所有输入/输出,如果任一点上都没有空闲缓冲区,则在编码器输出或解码器输出上等待非零超时。

如果你可以将Android 5.0作为目标,在MediaCodec中使用异步模式,那么就更容易做到这一点。见例句。https://github.com/mstorsjo/android-decodeencodetest举个例子说明如何做到这一点。另见https://stackoverflow.com/a/35885471/3115956就这个问题进行更长时间的讨论。

你也可以看看一些类似的问题。

-

我需要验证视频文件是(在Java): 视频是H.264编码的 我调查过JMF和Xuggle。 Xuggle使加载和解码文件变得更容易,并将其转换为另一种格式,但我还不知道如何确定我加载的文件的编码。 所以我想知道Xuggle是否有能力简单地返回视频类型 如果我需要自己确定,有人能给我指一些H.264格式的文档吗

-

背景: 两天来,我一直在努力实现一个像Vine一样的录像机。首先,我试了MediaRecorder。但我需要的视频可能是由小视频剪辑组成的。此类不能用于录制短时视频剪辑。然后我找到了MediaCodec、FFmpeg和JavaCV。FFmpeg和JavaCV可以解决这个问题。但是我必须用许多库文件来编译我的项目。它将生成一个非常大的APK文件。所以我更喜欢用MediaCodec实现它,尽管这个类只

-

我已从安装ffmpeg 3.0https://github.com/FFmpeg/FFmpeg,我正在尝试将用mepeg4第2部分编码的视频转换为H264,但我得到未知编码器“libx264”错误 我试过h264, x264, libx264,都不管用) 我查看了支持的编解码器列表 这里是h264: 我使用的是ffmpeg 3.0,从列表中可以看出,似乎不支持h264编码,只支持h264解码,对吗

-

我有两个h264视频文件。一个是“Big buck Bunny”,另一个是我使用FFMPEG创建的。两者在大多数浏览器中都可以播放,但在Firefox31.1.0中,“Big buck Bunny”播放得很好,但我的视频给出了“损坏视频”的响应。 ffprobe在屯门的两个视频的输出如下(首先是兔子,然后是我的) 谁能明白为什么我的不玩...?

-

我正在使用Java API实现一个解码器,用于解码实时H.264远程流。我正在使用回调()从本机层接收H.264编码数据,并在的上解码和呈现。我的实现已经完成(使用回调、解码和呈现等方式检索编码流)。下面是我的解码器类: 现在的问题是-流正在解码和呈现在表面,但视频不清楚。看起来像是框架被打破了,场景被扭曲了/脏了。移动是破碎的和方形的碎片到处(我真的很抱歉,因为我没有截图现在)。 关于我的流-它

-

我正在尝试使用FFMPEG合并2个mp4文件。其中一个文件同时具有视频和音频(),而另一个只有音频()。这些文件的名称以以下方式列在名为的文本文件中: 然后执行下面的ffmpeg命令来合并它们。 但是,生成的连接文件只包含。也就是说,如果