Spark 2.2查询配置单元表时dataframe NumberFormatException上的Thrift服务器错误

我有运行Spark2(V2.2)的Hortonworks HDP 2.6.3。我的测试用例非常简单:

>

使用一些随机值创建配置单元表。端口10000上的配置单元

在10016打开Spark Thrift服务器

-

null

beeline> !connect jdbc:hive2://localhost:10000/default hive hive

create table test1 (id int, desc varchar(40));

insert into table test1 values (1,"aa"),(2,"bb");

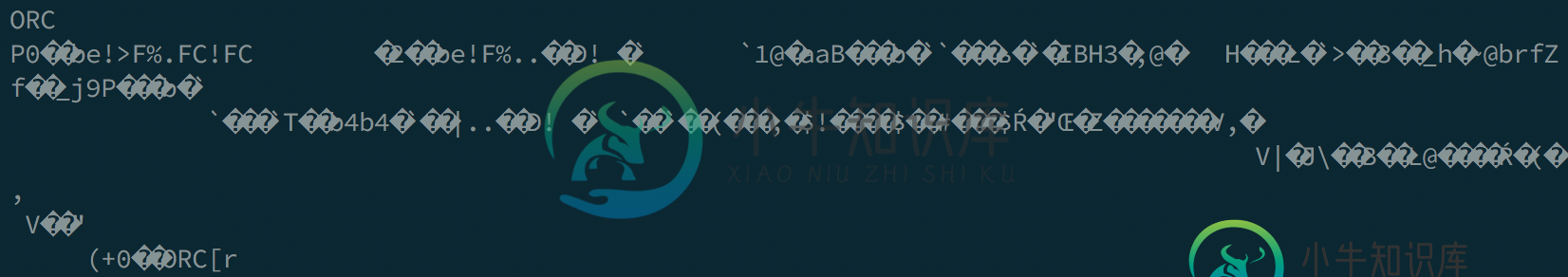

我有一个错误:

我怀疑它与id列有关,所以我更改为:df.select(“desc”).show()

然后我得到了这个奇怪的结果:

+----+

|desc|

+----+

|desc|

|desc|

+----+

beeline> !connect jdbc:hive2://localhost:10016/default hive hive

select * from test1;

+-----+-------+--+

| id | desc |

+-----+-------+--+

| 1 | aa |

| 2 | bb |

+-----+-------+--+

create table test4 (id String, desc String);

insert into table test4 values ("1","aa"),("2","bb");

select * from test4;

>>> df = sqlContext.read.format("jdbc").options(driver="org.apache.hive.jdbc.HiveDriver", url="jdbc:hive2://localhost:10016/default", dbtable="test4",user="hive", password="hive").option("fetchsize", "10").load()

>>> df.select("*").show()

+---+----+

| id|desc|

+---+----+

| id|desc|

| id|desc|

+---+----+

由于某种原因,它返回了列名?!

案例2

直线:

create table test5 (id int, desc varchar(40)) STORED AS ORC;

insert into table test5 values (1,"aa"),(2,"bb");

select * from test5;

beeline> !connect jdbc:hive2://localhost:10000/default hive hive

Connecting to jdbc:hive2://localhost:10000/default

Connected to: Apache Hive (version 1.2.1000.2.5.3.0-37)

Driver: Hive JDBC (version 1.2.1000.2.5.3.0-37)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://localhost:10000/default> create table test2 (id String, desc String) STORED AS ORC;

No rows affected (0.3 seconds)

0: jdbc:hive2://localhost:10000/default> insert into table test2 values ("1","aa"),("2","bb");

INFO : Session is already open

INFO : Dag name: insert into table tes..."1","aa"),("2","bb")(Stage-1)

INFO : Tez session was closed. Reopening...

INFO : Session re-established.

INFO :

INFO : Status: Running (Executing on YARN cluster with App id application_1514019042819_0006)

INFO : Map 1: -/-

INFO : Map 1: 0/1

INFO : Map 1: 0(+1)/1

INFO : Map 1: 1/1

INFO : Loading data to table default.test2 from webhdfs://demo.myapp.local:40070/apps/hive/warehouse/test2/.hive-staging_hive_2017-12-23_04-29-54_569_601147868480753216-3/-ext-10000

INFO : Table default.test2 stats: [numFiles=1, numRows=2, totalSize=317, rawDataSize=342]

No rows affected (15.414 seconds)

0: jdbc:hive2://localhost:10000/default> select * from table2;

Error: Error while compiling statement: FAILED: SemanticException [Error 10001]: Line 1:14 Table not found 'table2' (state=42S02,code=10001)

0: jdbc:hive2://localhost:10000/default> select * from test2;

+-----------+-------------+--+

| test2.id | test2.desc |

+-----------+-------------+--+

| 1 | aa |

| 2 | bb |

+-----------+-------------+--+

2 rows selected (0.364 seconds)

beeline> !connect jdbc:hive2://localhost:10016/default hive hive

Connecting to jdbc:hive2://localhost:10016/default

1: jdbc:hive2://localhost:10016/default> create table test3 (id String, desc String) STORED AS ORC;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.234 seconds)

1: jdbc:hive2://localhost:10016/default> insert into table test3 values ("1","aa"),("2","bb");

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (9.111 seconds)

1: jdbc:hive2://localhost:10016/default> select * from test3;

+-----+-------+--+

| id | desc |

+-----+-------+--+

| 1 | aa |

| 2 | bb |

+-----+-------+--+

2 rows selected (3.387 seconds)

>>> df = sqlContext.read.format("jdbc").options(driver="org.apache.hive.jdbc.HiveDriver", url="jdbc:hive2://localhost:10016/default", dbtable="test3",user="hive", password="hive").load()

>>> df.select("*").show()

+---+----+

| id|desc|

+---+----+

+---+----+

# Detailed Table Information | CatalogTable(

Table: `default`.`test3`

Owner: hive

Created: Sat Dec 23 04:37:14 PST 2017

Last Access: Wed Dec 31 16:00:00 PST 1969

Type: MANAGED

Schema: [`id` string, `desc` string]

Properties: [totalSize=620, numFiles=2, transient_lastDdlTime=1514032656, STATS_GENERATED_VIA_STATS_TASK=true]

Storage(Location: webhdfs://demo.myapp.local:40070/apps/hive/warehouse/test3, InputFormat: org.apache.hadoop.hive.ql.io.orc.OrcInputFormat, OutputFormat: org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat, Serde: org.apache.hadoop.hive.ql.io.orc.OrcSerde, Properties: [serialization.format=1]))

显示CREATE TABLE TEST3;

CREATE TABLE `test3`(`id` string, `desc` string)

ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.orc.OrcSerde'

WITH SERDEPROPERTIES (

'serialization.format' = '1'

)

STORED AS

INPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat'

TBLPROPERTIES (

'totalSize' = '620',

'numFiles' = '2',

'transient_lastDdlTime' = '1514032656',

'STATS_GENERATED_VIA_STATS_TASK' = 'true'

)

su-spark-c“hdfs dfs-cat webhdfs://demo.myapp.local:40070/apps/hive/warehouse/test3/part-00000”

共有1个答案

即使您使用特定的数据类型创建配置单元表,插入时表中的基础数据还是以字符串格式存储。

所以当spark试图读取数据时,它会使用转移子来查找数据类型。它在hive metaxore中以int形式出现,在文件中以string形式出现,并抛出cast异常。

解决方案

create table test1 (id String, desc String);

create table test1 (id int, desc varchar(40) STORED AS ORC);

-

我正在使用twitter cloudera示例创建一个表,虽然我已经成功地创建了表并获得了数据,但我遇到了一个问题。 我可以执行并返回数据,但当我进行更深入的操作(如)时,我会收到一个错误。 以下是错误和堆栈跟踪: hive>从tweets中选择计数(*);MapReduce作业总数=1启动作业1编译时确定的1个reduce任务中的1个:1为了更改还原器的平均负载(以字节为单位):set hive

-

当我运行以下配置单元命令时 hive-e‘选择msg,将(*)从表中计数为cnt,其中像“%abcd%”这样的msg按msg排序按cnt desc;’sed的/[\t]/,/g'>table.csv 失败:ParseException第1:89行无法识别表达式规范中“like”“%”“password”附近的输入 我知道在指定字符串“%abcd%”时有问题。该命令在配置单元环境中工作正常,但这里我

-

我正试图将数据从Oracle加载到Hive作为parquet。每次我将带有日期/时间戳列的表加载到hive时,它都会自动将这些列转换为BIGINT。可以使用sqoop将时间戳/日期格式加载到hive中,并作为一个parquet文件吗? 已经尝试过首先在hive中创建表,然后使用impala将数据加载到parquet文件中。< br >仍然失败,出现错误 "文件XX的第XX列有不兼容的拼花模式:时间

-

我在执行配置单元查询时遇到异常。我关注以下链接:http://www.thecloudavenue.com/2013/03/analysis-tweets-using-flume-hadoop-and.html 终端数据在这里:

-

我是Spark和Scala的新手;) 代码摘要: 从CSV文件读取数据-- 你能帮我找出哪里出了问题吗。代码并不复杂。该作业在集群上执行良好。所以,当我试图可视化写在配置单元表上的数据时,我面临着一个问题。 蜂箱 失败与异常java.io.IOException:java.io.IOException:hdfs://m01.itversity.com:9000/user/itv000666/war