Hadoop+Spark:有1个数据阳极正在运行,在此操作中排除了1个节点

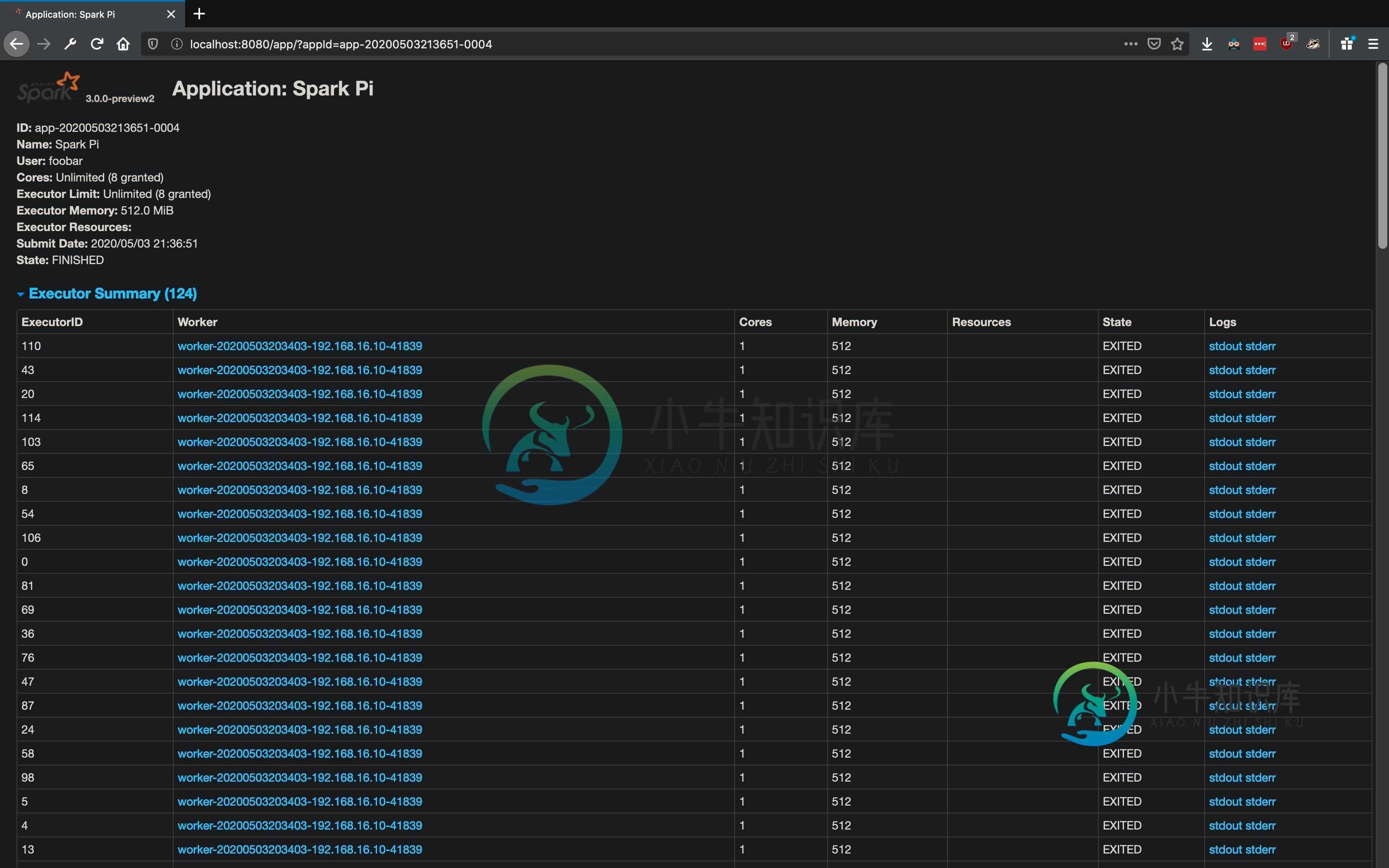

我目前正在试用Apache Spark。所有的组件(如HDFS、Spark、Yarn等)都运行良好。在任何这些程序的启动过程中,似乎都没有任何错误。我在一个Vagrant VM中运行它,并对spark/hdfs/yarn进行了对接。

tl;dr:通过Yarn提交作业会导致有1个数据阳极正在运行,并且在此操作中排除了1个节点。

用以下文件提交我的应用程序:$spark-submit--主纱--class org.apache.spark.examples.sparkpi--驱动程序--内存512M--执行器--内存512M--执行器--核心1/users/foobar/downloads/spark-3.0.0-preview2-bin-hadoop3.2/examples/jars/spark-examples2.12-3.0.0-preview2.jar 10

其结果如下:

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

20/05/03 17:45:26 INFO SparkContext: Running Spark version 2.4.5

20/05/03 17:45:26 INFO SparkContext: Submitted application: Spark Pi

20/05/03 17:45:26 INFO SecurityManager: Changing view acls to: foobar

20/05/03 17:45:26 INFO SecurityManager: Changing modify acls to: foobar

20/05/03 17:45:26 INFO SecurityManager: Changing view acls groups to:

20/05/03 17:45:26 INFO SecurityManager: Changing modify acls groups to:

20/05/03 17:45:26 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(foobar); groups with view permissions: Set(); users with modify permissions: Set(foobar); groups with modify permissions: Set()

20/05/03 17:45:26 INFO Utils: Successfully started service 'sparkDriver' on port 52142.

20/05/03 17:45:26 INFO SparkEnv: Registering MapOutputTracker

20/05/03 17:45:27 INFO SparkEnv: Registering BlockManagerMaster

20/05/03 17:45:27 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

20/05/03 17:45:27 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

20/05/03 17:45:27 INFO DiskBlockManager: Created local directory at /private/var/folders/1x/h0q3vtw17ddbys9bjcf41mtr0000gn/T/blockmgr-1a34b35e-d5c2-4c11-a637-364f86818b1a

20/05/03 17:45:27 INFO MemoryStore: MemoryStore started with capacity 93.3 MB

20/05/03 17:45:27 INFO SparkEnv: Registering OutputCommitCoordinator

20/05/03 17:45:27 INFO Utils: Successfully started service 'SparkUI' on port 4040.

20/05/03 17:45:27 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://foobars-mbp.box:4040

20/05/03 17:45:27 INFO SparkContext: Added JAR file:/Users/foobar/Downloads/spark-3.0.0-preview2-bin-hadoop3.2/examples/jars/spark-examples_2.12-3.0.0-preview2.jar at spark://foobars-mbp.box:52142/jars/spark-examples_2.12-3.0.0-preview2.jar with timestamp 1588545927208

20/05/03 17:45:27 INFO RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

20/05/03 17:45:27 INFO Client: Requesting a new application from cluster with 1 NodeManagers

20/05/03 17:45:27 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

20/05/03 17:45:27 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

20/05/03 17:45:27 INFO Client: Setting up container launch context for our AM

20/05/03 17:45:27 INFO Client: Setting up the launch environment for our AM container

20/05/03 17:45:27 INFO Client: Preparing resources for our AM container

20/05/03 17:45:27 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

20/05/03 17:45:29 INFO Client: Uploading resource file:/private/var/folders/1x/h0q3vtw17ddbys9bjcf41mtr0000gn/T/spark-5467a437-f3e2-4c23-9a15-9051aa89e222/__spark_libs__8443981124167043301.zip -> hdfs://0.0.0.0:9000/user/foobar/.sparkStaging/application_1588537985407_0007/__spark_libs__8443981124167043301.zip

20/05/03 17:46:29 INFO DFSClient: Exception in createBlockOutputStream

org.apache.hadoop.net.ConnectTimeoutException: 60000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=/192.168.16.6:9866]

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:534)

at org.apache.hadoop.hdfs.DFSOutputStream.createSocketForPipeline(DFSOutputStream.java:1533)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1309)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1262)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:448)

20/05/03 17:46:29 INFO DFSClient: Abandoning BP-1700972659-172.30.0.2-1588486994156:blk_1073741833_1009

20/05/03 17:46:29 INFO DFSClient: Excluding datanode DatanodeInfoWithStorage[192.168.16.6:9866,DS-6d0dcfb4-265a-4a8f-a86c-35fcc6e8ca70,DISK]

20/05/03 17:46:29 WARN DFSClient: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/foobar/.sparkStaging/application_1588537985407_0007/__spark_libs__8443981124167043301.zip could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanahtml" target="_blank">gement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678)

at org.apache.hadoop.ipc.Client.call(Client.java:1475)

at org.apache.hadoop.ipc.Client.call(Client.java:1412)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy13.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:418)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy14.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1455)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1251)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:448)

20/05/03 17:46:29 INFO Client: Deleted staging directory hdfs://0.0.0.0:9000/user/foobar/.sparkStaging/application_1588537985407_0007

20/05/03 17:46:29 ERROR SparkContext: Error initializing SparkContext.

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/foobar/.sparkStaging/application_1588537985407_0007/__spark_libs__8443981124167043301.zip could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678)

at org.apache.hadoop.ipc.Client.call(Client.java:1475)

at org.apache.hadoop.ipc.Client.call(Client.java:1412)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy13.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:418)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy14.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1455)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1251)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:448)

20/05/03 17:46:29 INFO SparkUI: Stopped Spark web UI at http://foobars-mbp.box:4040

20/05/03 17:46:29 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

20/05/03 17:46:29 INFO YarnClientSchedulerBackend: Stopped

20/05/03 17:46:29 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

20/05/03 17:46:29 INFO MemoryStore: MemoryStore cleared

20/05/03 17:46:29 INFO BlockManager: BlockManager stopped

20/05/03 17:46:29 INFO BlockManagerMaster: BlockManagerMaster stopped

20/05/03 17:46:29 WARN MetricsSystem: Stopping a MetricsSystem that is not running

20/05/03 17:46:29 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

20/05/03 17:46:29 INFO SparkContext: Successfully stopped SparkContext

Exception in thread "main" org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/foobar/.sparkStaging/application_1588537985407_0007/__spark_libs__8443981124167043301.zip could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678)

at org.apache.hadoop.ipc.Client.call(Client.java:1475)

at org.apache.hadoop.ipc.Client.call(Client.java:1412)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy13.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:418)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy14.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1455)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1251)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:448)

20/05/03 17:46:29 INFO ShutdownHookManager: Shutdown hook called

20/05/03 17:46:29 INFO ShutdownHookManager: Deleting directory /private/var/folders/1x/h0q3vtw17ddbys9bjcf41mtr0000gn/T/spark-e77adcce-715f-43d1-a01e-d4141349ed13

20/05/03 17:46:29 INFO ShutdownHookManager: Deleting directory /private/var/folders/1x/h0q3vtw17ddbys9bjcf41mtr0000gn/T/spark-5467a437-f3e2-4c23-9a15-9051aa89e222

注意线程“main”org.apache.hadoop.ipc.RemoteException(java.io.ioException)中的错误异常:文件/user/foobar/.sparkstaging/application_1588537985407_0007/__spark_libs__8443981124167043301.zip只能写入1个minReplication节点中的0个。有%1个datanode正在运行,但在此操作中排除了%1个节点。

如果在此过程中检查HDFS,则会看到Spark文件已上载:

hdfs@243579e354c0:/app$ hadoop fs -ls /user/foobar/.sparkStaging

WARNING: log4j.properties is not found. HADOOP_CONF_DIR may be incomplete.

Found 2 items

drwx------ - foobar hadoop 0 2020-05-03 22:43 /user/foobar/.sparkStaging/application_1588537985407_0006

drwx------ - foobar hadoop 0 2020-05-03 22:45 /user/foobar/.sparkStaging/application_1588537985407_0007

Spark Executor Command: "/usr/local/openjdk-8/bin/java" "-cp" "/app/config/:/app/spark/jars/*" "-Xmx1024M" "-Dspark.driver.port=51462" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@foobars-mbp.box:51462" "--executor-id" "0" "--hostname" "192.168.16.10" "--cores" "8" "--app-id" "app-20200503204833-0000" "--worker-url" "spark://Worker@192.168.16.10:41839"

========================================

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

20/05/03 20:48:34 INFO CoarseGrainedExecutorBackend: Started daemon with process name: 84@ad4c05fe6b8a

20/05/03 20:48:34 INFO SignalUtils: Registered signal handler for TERM

20/05/03 20:48:34 INFO SignalUtils: Registered signal handler for HUP

20/05/03 20:48:34 INFO SignalUtils: Registered signal handler for INT

20/05/03 20:48:35 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

20/05/03 20:48:35 INFO SecurityManager: Changing view acls to: hdfs,foobar

20/05/03 20:48:35 INFO SecurityManager: Changing modify acls to: hdfs,foobar

20/05/03 20:48:35 INFO SecurityManager: Changing view acls groups to:

20/05/03 20:48:35 INFO SecurityManager: Changing modify acls groups to:

20/05/03 20:48:35 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hdfs, foobar); groups with view permissions: Set(); users with modify permissions: Set(hdfs, foobar); groups with modify permissions: Set()

Exception in thread "main" java.lang.reflect.UndeclaredThrowableException

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1748)

at org.apache.spark.deploy.SparkHadoopUtil.runAsSparkUser(SparkHadoopUtil.scala:61)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.run(CoarseGrainedExecutorBackend.scala:257)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.main(CoarseGrainedExecutorBackend.scala:247)

at org.apache.spark.executor.CoarseGrainedExecutorBackend.main(CoarseGrainedExecutorBackend.scala)

Caused by: org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:227)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:101)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.$anonfun$run$3(CoarseGrainedExecutorBackend.scala:277)

at scala.runtime.java8.JFunction1$mcVI$sp.apply(JFunction1$mcVI$sp.java:23)

at scala.collection.TraversableLike$WithFilter.$anonfun$foreach$1(TraversableLike.scala:877)

at scala.collection.immutable.Range.foreach(Range.scala:158)

at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:876)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.$anonfun$run$1(CoarseGrainedExecutorBackend.scala:275)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$1.run(SparkHadoopUtil.scala:62)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$1.run(SparkHadoopUtil.scala:61)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

... 4 more

Caused by: java.io.IOException: Failed to connect to foobars-mbp.box:51462

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:253)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:195)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:204)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:202)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:198)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.UnknownHostException: foobars-mbp.box

at java.net.InetAddress.getAllByName0(InetAddress.java:1281)

at java.net.InetAddress.getAllByName(InetAddress.java:1193)

at java.net.InetAddress.getAllByName(InetAddress.java:1127)

at java.net.InetAddress.getByName(InetAddress.java:1077)

at io.netty.util.internal.SocketUtils$8.run(SocketUtils.java:146)

at io.netty.util.internal.SocketUtils$8.run(SocketUtils.java:143)

at java.security.AccessController.doPrivileged(Native Method)

at io.netty.util.internal.SocketUtils.addressByName(SocketUtils.java:143)

at io.netty.resolver.DefaultNameResolver.doResolve(DefaultNameResolver.java:43)

at io.netty.resolver.SimpleNameResolver.resolve(SimpleNameResolver.java:63)

at io.netty.resolver.SimpleNameResolver.resolve(SimpleNameResolver.java:55)

at io.netty.resolver.InetSocketAddressResolver.doResolve(InetSocketAddressResolver.java:57)

at io.netty.resolver.InetSocketAddressResolver.doResolve(InetSocketAddressResolver.java:32)

at io.netty.resolver.AbstractAddressResolver.resolve(AbstractAddressResolver.java:108)

at io.netty.bootstrap.Bootstrap.doResolveAndConnect0(Bootstrap.java:202)

at io.netty.bootstrap.Bootstrap.access$000(Bootstrap.java:48)

at io.netty.bootstrap.Bootstrap$1.operationComplete(Bootstrap.java:182)

at io.netty.bootstrap.Bootstrap$1.operationComplete(Bootstrap.java:168)

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:577)

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:551)

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:490)

at io.netty.util.concurrent.DefaultPromise.setValue0(DefaultPromise.java:615)

at io.netty.util.concurrent.DefaultPromise.setSuccess0(DefaultPromise.java:604)

at io.netty.util.concurrent.DefaultPromise.trySuccess(DefaultPromise.java:104)

at io.netty.channel.DefaultChannelPromise.trySuccess(DefaultChannelPromise.java:84)

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetSuccess(AbstractChannel.java:985)

at io.netty.channel.AbstractChannel$AbstractUnsafe.register0(AbstractChannel.java:505)

at io.netty.channel.AbstractChannel$AbstractUnsafe.access$200(AbstractChannel.java:416)

at io.netty.channel.AbstractChannel$AbstractUnsafe$1.run(AbstractChannel.java:475)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:510)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:518)

at io.netty.util.concurrent.SingleThreadEventExecutor$6.run(SingleThreadEventExecutor.java:1044)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

... 1 more

可能重要的其他配置文件:

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://namenode:9000</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/app/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/app/data/datanode</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

</configuration>

spark.master yarn

spark.driver.memory 512m

spark.executor.memory 1g

spark.yarn.archive hdfs:///user/foo/spark-libs.jar

<configuration>

<property>

<name>yarn.resourcemanager.address</name>

<value>resource-manager:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>resource-manager:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>resource-manager:8031</value>

</property>

<property>

<name>yarn.acl.enable</name>

<value>0</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>resource-manager</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

Docker Exec-IT spark-master/bin/bash

然后在容器中:

spark-submit--主纱--class org.apache.spark.examples.sparkpi/app/spark/examples/jars/spark-examples2.12-3.0.0-preview2.jar 10

当在容器网络之外提交时,这似乎是一个网络问题。有没有调试这个?

共有1个答案

原来是人脉问题。如果您仔细查看问题中最初发布的内容,您将在日志中看到以下错误,一个我最初遗漏的错误:

org.apache.hadoop.net.ConnectTimeoutException: 60000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=/192.168.16.6:9866]

IP地址192.168.16.6是Docker容器的IP地址,在Docker网络中可以看到。由于我是从Docker容器外部提交的,因此该IP将永远不可路由。这里发生了什么:

- 我将作业提交到群集。

- 我最终获得了我的(唯一的)数据阳极的IP地址。

- 我尝试与Docker网络中的datanode通信-它是Docker容器的IP地址。

- 此IP不可路由,最终请求超时。

hostname: hadoop

10.0.2.2 hadoop

在本例中,10.0.2.2是Docker网络之外的localhost-我的实际主机。

然后我更新了我的Hadoop配置,这样datanode将返回一个主机名,而不是像下面这样的IP:

<configuration>

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

</property>

<property>

<name>dfs.datanode.use.datanode.hostname</name>

<value>true</value>

</property>

</configuration>

随后的spark-submit工作。

-

我在VMware上部署了Hadoop集群。它们都在CentOS7上。 在主机上: 从机上:

-

在Namenode上运行的JP提供以下内容: 和jps: 在我看来是对的。 为什么我还在犯错误?

-

我从网上下载了一个文件,并复制到ubuntu本地目录/lab/data中 我已经在Ubuntu中创建了名称为namenodep和datanode的namenode和datanode文件夹(不是hadoop文件夹)。我还在hdfs中创建了一个文件夹作为/input。 当我将文件从ubuntu local复制到hdfs时,为什么该文件同时出现在下面的两个目录中?

-

...或在namenode文件中。jobtracker只是重复在datanode日志中发现的错误。在失败之前几分钟的一个错误是EOF错误,对于PartialBuilder来说,这可能是问题,也可能不是问题: 我能够直接读写文件到DFS。我甚至可以在数据的一个很小的子集上运行这个作业,但是我不能让这个map/reduce作业正确工作。知道我做错了什么吗? 有关我得安装得说明: null dfs.re

-

我正在Azure中的Hadoop中创建一个多节点(1主3从)集群,我想所有的设置都已经完成了,但是当我运行一个测试文件时,它遇到了与Stackoverflow中的其他类似的问题,我尝试了他们的解决方案,但是,这个问题仍然无法解决。谁能帮帮我吗?我在这个问题上困了几天了 我检查了hadoop-hduser-namenode-master.log并得到了一些错误,似乎Damenode无法与nameno

-

问题内容: 我正在尝试删除不符合for循环条件的行。但是,我得到的错误提示是:“试图从第1节中删除第0行,但是在更新之前只有1个节。”我以前从未见过,也不知道为什么得到它。 我的代码: 问题答案: 似乎您在告诉该表中将有比实际要显示的行更多的行,然后在事实之后将其删除。 相反,您应该检查数组中的元素是否满足(或之前)的条件,并将它们放入将实际显示的其他数组中,以便表知道实际将显示多少行。然后,只需