HA配置问题,ActiveMQ Artemis

我试图在ActiveMQ Artemis 2.13上配置HA。我试着从一个简单的主和备份开始。我已经多次阅读关于集群和HA的文档,但我仍然不确定我在做什么。我还研究了replicated-failback java示例。

从客户端,我必须为主节点和备份节点指定连接信息吗?这个html" target="_blank">示例让我感到困惑,因为它看起来URL/连接是通过输入参数传递给java程序的,而我不确定它们来自哪里。

在主控制台中,一切看起来都很正常,但我现在有了“广播组”和“集群连接”。中学只有这两个。

在主服务器上,对于属性“服务器关机上的故障转移”是false...

以下是我所做的HA配置:

主要(192.168.56.105)broker.xml:

<configuration xmlns="urn:activemq"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:xi="http://www.w3.org/2001/XInclude"

xsi:schemaLocation="urn:activemq /schema/artemis-configuration.xsd">

<core xmlns="urn:activemq:core" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:activemq:core ">

<name>0.0.0.0</name>

<persistence-enabled>true</persistence-enabled>

<journal-type>NIO</journal-type>

<paging-directory>data/paging</paging-directory>

<bindings-directory>data/bindings</bindings-directory>

<journal-directory>data/journal</journal-directory>

<large-messages-directory>data/large-messages</large-messages-directory>

<journal-datasync>true</journal-datasync>

<journal-min-files>2</journal-min-files>

<journal-pool-files>10</journal-pool-files>

<journal-device-block-size>4096</journal-device-block-size>

<journal-file-size>10M</journal-file-size>

<journal-buffer-timeout>2884000</journal-buffer-timeout>

<journal-max-io>1</journal-max-io>

<disk-scan-period>5000</disk-scan-period>

<max-disk-usage>90</max-disk-usage>

<critical-analyzer>true</critical-analyzer>

<critical-analyzer-timeout>120000</critical-analyzer-timeout>

<critical-analyzer-check-period>60000</critical-analyzer-check-period>

<critical-analyzer-policy>HALT</critical-analyzer-policy>

<page-sync-timeout>2884000</page-sync-timeout>

<acceptors>

<!-- Acceptor for every supported protocol -->

<acceptor name="artemis">tcp://0.0.0.0:61616?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;amqpMinLargeMessageSize=102400;protocols=CORE,AMQP,STOMP,HORNETQ,MQTT,OPENWIRE;useEpoll=true;amqpCredits=1000;amqpLowCredits=300;amqpDuplicateDetection=true</acceptor>

<!-- AMQP Acceptor. Listens on default AMQP port for AMQP traffic.-->

<acceptor name="amqp">tcp://0.0.0.0:5672?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=AMQP;useEpoll=true;amqpCredits=1000;amqpLowCredits=300;amqpMinLargeMessageSize=102400;amqpDuplicateDetection=true</acceptor>

<!-- STOMP Acceptor. -->

<acceptor name="stomp">tcp://0.0.0.0:61613?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=STOMP;useEpoll=true</acceptor>

<!-- HornetQ Compatibility Acceptor. Enables HornetQ Core and STOMP for legacy HornetQ clients. -->

<acceptor name="hornetq">tcp://0.0.0.0:5445?anycastPrefix=jms.queue.;multicastPrefix=jms.topic.;protocols=HORNETQ,STOMP;useEpoll=true</acceptor>

<!-- MQTT Acceptor -->

<acceptor name="mqtt">tcp://0.0.0.0:1883?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=MQTT;useEpoll=true</acceptor>

</acceptors>

<connectors>

<connector name="artemis">tcp://192.168.56.105:61616</connector>

</connectors>

<broadcast-groups>

<broadcast-group name="broadcast-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

<connector-ref>artemis</connector-ref>

</broadcast-group>

</broadcast-groups>

<discovery-groups>

<discovery-group name="discovery-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

</discovery-group>

</discovery-groups>

<cluster-user>cluster.user</cluster-user>

<cluster-password>password</cluster-password>

<ha-policy>

<replication>

<master>

<check-for-live-server>true</check-for-live-server>

</master>

</replication>

</ha-policy>

<cluster-connections>

<cluster-connection name="cluster-1">

<connector-ref>artemis</connector-ref>

<discovery-group-ref discovery-group-name="discovery-group-1"/>

</cluster-connection>

</cluster-connections>

<security-settings>

<security-setting match="#">

<permission type="createNonDurableQueue" roles="amq"/>

<permission type="deleteNonDurableQueue" roles="amq"/>

<permission type="createDurableQueue" roles="amq"/>

<permission type="deleteDurableQueue" roles="amq"/>

<permission type="createAddress" roles="amq"/>

<permission type="deleteAddress" roles="amq"/>

<permission type="consume" roles="amq"/>

<permission type="browse" roles="amq"/>

<permission type="send" roles="amq"/>

<!-- we need this otherwise ./artemis data imp wouldn't work -->

<permission type="manage" roles="amq"/>

</security-setting>

</security-settings>

<address-settings>

<!-- if you define auto-create on certain queues, management has to be auto-create -->

<address-setting match="activemq.management#">

<dead-letter-address>DLQ</dead-letter-address>

<expiry-address>ExpiryQueue</expiry-address>

<redelivery-delay>0</redelivery-delay>

<!-- with -1 only the global-max-size is in use for limiting -->

<max-size-bytes>-1</max-size-bytes>

<message-counter-history-day-limit>10</message-counter-history-day-limit>

<address-full-policy>PAGE</address-full-policy>

<auto-create-queues>true</auto-create-queues>

<auto-create-addresses>true</auto-create-addresses>

<auto-create-jms-queues>true</auto-create-jms-queues>

<auto-create-jms-topics>true</auto-create-jms-topics>

</address-setting>

<!--default for catch all-->

<address-setting match="#">

<dead-letter-address>DLQ</dead-letter-address>

<expiry-address>ExpiryQueue</expiry-address>

<redelivery-delay>0</redelivery-delay>

<!-- with -1 only the global-max-size is in use for limiting -->

<max-size-bytes>-1</max-size-bytes>

<message-counter-history-day-limit>10</message-counter-history-day-limit>

<address-full-policy>PAGE</address-full-policy>

<auto-create-queues>true</auto-create-queues>

<auto-create-addresses>true</auto-create-addresses>

<auto-create-jms-queues>true</auto-create-jms-queues>

<auto-create-jms-topics>true</auto-create-jms-topics>

</address-setting>

</address-settings>

<addresses>

<address name="DLQ">

<anycast>

<queue name="DLQ" />

</anycast>

</address>

<address name="ExpiryQueue">

<anycast>

<queue name="ExpiryQueue" />

</anycast>

</address>

</addresses>

</core>

</configuration>

备份(192.168.56.106)broker.xml:

<configuration xmlns="urn:activemq"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:xi="http://www.w3.org/2001/XInclude"

xsi:schemaLocation="urn:activemq /schema/artemis-configuration.xsd">

<core xmlns="urn:activemq:core" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:activemq:core ">

<name>0.0.0.0</name>

<persistence-enabled>true</persistence-enabled>

<journal-type>NIO</journal-type>

<paging-directory>data/paging</paging-directory>

<bindings-directory>data/bindings</bindings-directory>

<journal-directory>data/journal</journal-directory>

<large-messages-directory>data/large-messages</large-messages-directory>

<journal-datasync>true</journal-datasync>

<journal-min-files>2</journal-min-files>

<journal-pool-files>10</journal-pool-files>

<journal-device-block-size>4096</journal-device-block-size>

<journal-file-size>10M</journal-file-size>

<journal-buffer-timeout>2868000</journal-buffer-timeout>

<journal-max-io>1</journal-max-io>

<disk-scan-period>5000</disk-scan-period>

<max-disk-usage>90</max-disk-usage>

<critical-analyzer>true</critical-analyzer>

<critical-analyzer-timeout>120000</critical-analyzer-timeout>

<critical-analyzer-check-period>60000</critical-analyzer-check-period>

<critical-analyzer-policy>HALT</critical-analyzer-policy>

<page-sync-timeout>2868000</page-sync-timeout>

<acceptors>

<!-- Acceptor for every supported protocol -->

<acceptor name="artemis">tcp://0.0.0.0:61616?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;amqpMinLargeMessageSize=102400;protocols=CORE,AMQP,STOMP,HORNETQ,MQTT,OPENWIRE;useEpoll=true;amqpCredits=1000;amqpLowCredits=300;amqpDuplicateDetection=true</acceptor>

<!-- AMQP Acceptor. Listens on default AMQP port for AMQP traffic.-->

<acceptor name="amqp">tcp://0.0.0.0:5672?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=AMQP;useEpoll=true;amqpCredits=1000;amqpLowCredits=300;amqpMinLargeMessageSize=102400;amqpDuplicateDetection=true</acceptor>

<!-- STOMP Acceptor. -->

<acceptor name="stomp">tcp://0.0.0.0:61613?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=STOMP;useEpoll=true</acceptor>

<!-- HornetQ Compatibility Acceptor. Enables HornetQ Core and STOMP for legacy HornetQ clients. -->

<acceptor name="hornetq">tcp://0.0.0.0:5445?anycastPrefix=jms.queue.;multicastPrefix=jms.topic.;protocols=HORNETQ,STOMP;useEpoll=true</acceptor>

<!-- MQTT Acceptor -->

<acceptor name="mqtt">tcp://0.0.0.0:1883?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=MQTT;useEpoll=true</acceptor>

</acceptors>

<connectors>

<connector name="artemis">tcp://192.168.56.106:61616</connector>

</connectors>

<security-settings>

<security-setting match="#">

<permission type="createNonDurableQueue" roles="amq"/>

<permission type="deleteNonDurableQueue" roles="amq"/>

<permission type="createDurableQueue" roles="amq"/>

<permission type="deleteDurableQueue" roles="amq"/>

<permission type="createAddress" roles="amq"/>

<permission type="deleteAddress" roles="amq"/>

<permission type="consume" roles="amq"/>

<permission type="browse" roles="amq"/>

<permission type="send" roles="amq"/>

<!-- we need this otherwise ./artemis data imp wouldn't work -->

<permission type="manage" roles="amq"/>

</security-setting>

</security-settings>

<address-settings>

<!-- if you define auto-create on certain queues, management has to be auto-create -->

<address-setting match="activemq.management#">

<dead-letter-address>DLQ</dead-letter-address>

<expiry-address>ExpiryQueue</expiry-address>

<redelivery-delay>0</redelivery-delay>

<!-- with -1 only the global-max-size is in use for limiting -->

<max-size-bytes>-1</max-size-bytes>

<message-counter-history-day-limit>10</message-counter-history-day-limit>

<address-full-policy>PAGE</address-full-policy>

<auto-create-queues>true</auto-create-queues>

<auto-create-addresses>true</auto-create-addresses>

<auto-create-jms-queues>true</auto-create-jms-queues>

<auto-create-jms-topics>true</auto-create-jms-topics>

</address-setting>

<!--default for catch all-->

<address-setting match="#">

<dead-letter-address>DLQ</dead-letter-address>

<expiry-address>ExpiryQueue</expiry-address>

<redelivery-delay>0</redelivery-delay>

<!-- with -1 only the global-max-size is in use for limiting -->

<max-size-bytes>-1</max-size-bytes>

<message-counter-history-day-limit>10</message-counter-history-day-limit>

<address-full-policy>PAGE</address-full-policy>

<auto-create-queues>true</auto-create-queues>

<auto-create-addresses>true</auto-create-addresses>

<auto-create-jms-queues>true</auto-create-jms-queues>

<auto-create-jms-topics>true</auto-create-jms-topics>

</address-setting>

</address-settings>

<addresses>

<address name="DLQ">

<anycast>

<queue name="DLQ" />

</anycast>

</address>

<address name="ExpiryQueue">

<anycast>

<queue name="ExpiryQueue" />

</anycast>

</address>

</addresses>

<broadcast-groups>

<broadcast-group name="broadcast-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

<connector-ref>artemis</connector-ref>

</broadcast-group>

</broadcast-groups>

<discovery-groups>

<discovery-group name="discovery-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

</discovery-group>

</discovery-groups>

<cluster-user>cluster.user</cluster-user>

<cluster-password>password</cluster-password>

<ha-policy>

<replication>

<slave>

<allow-failback>true</allow-failback>

</slave>

</replication>

</ha-policy>

<cluster-connections>

<cluster-connection name="cluster-1">

<connector-ref>artemis</connector-ref>

<discovery-group-ref discovery-group-name="discovery-group-1"/>

</cluster-connection>

</cluster-connections>

</core>

</configuration>

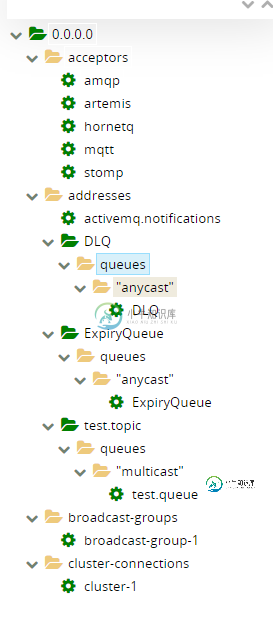

另外,这是控制台上显示的内容的截图。备份服务器中缺少很多内容。

主要:

备份:

共有1个答案

配置集群所需的主要内容是cluster-connection。cluster-connection引用连接器,该连接器指定其他节点如何连接到它。换句话说,在cluster-connection上配置的connector-ref应该与在代理上配置的acceptor匹配。您遇到的一个问题是,这两个节点都有使用0.0.0.0的连接器。这是专门用于接受器中的元地址。对于远程客户端来说,这将是毫无意义的。连接器应该是运行服务器的真实IP地址或主机名。

以下是您的配置:

主要(192.168.56.105)broker.xml:

<acceptors>

<acceptor name="netty-acceptor">tcp://0.0.0.0:61616</acceptor>

</acceptors>

<connectors>

<connector name="netty-connector">tcp://192.168.56.105:61616</connector>

</connectors>

<broadcast-groups>

<broadcast-group name="broadcast-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

<connector-ref>netty-connector</connector-ref>

</broadcast-group>

</broadcast-groups>

<discovery-groups>

<discovery-group name="discovery-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

</discovery-group>

</discovery-groups>

<cluster-user>cluster.user</cluster-user>

<cluster-password>password</cluster-password>

<ha-policy>

<replication>

<master>

<check-for-live-server>true</check-for-live-server>

</master>

</replication>

</ha-policy>

<cluster-connections>

<cluster-connection name="cluster-1">

<connector-ref>netty-connector</connector-ref>

<discovery-group-ref discovery-group-name="discovery-group-1"/>

</cluster-connection>

</cluster-connections>

<acceptor>

<acceptor name="netty-acceptor">tcp://0.0.0.0:61616</acceptor>

</acceptors>

<connectors>

<connector name="netty-connector">tcp://192.168.56.106:61616</connector>

</connectors>

<broadcast-groups>

<broadcast-group name="broadcast-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

<connector-ref>netty-connector</connector-ref>

</broadcast-group>

</broadcast-groups>

<discovery-groups>

<discovery-group name="discovery-group-1">

<group-address>231.7.7.7</group-address>

<group-port>9876</group-port>

</discovery-group>

</discovery-groups>

<cluster-user>cluster.user</cluster-user>

<cluster-password>password</cluster-password>

<ha-policy>

<replication>

<slave>

<allow-failback>true</allow-failback>

</slave>

</replication>

</ha-policy>

<cluster-connections>

<cluster-connection name="cluster-1">

<connector-ref>netty-connector</connector-ref>

<discovery-group-ref discovery-group-name="discovery-group-1"/>

</cluster-connection>

</cluster-connections>

-

我有一个xml配置文件用于设置gemfire,如下所示 工作很好。当我指定id属性时,它会抛出t任何一个都可以,请帮助我

-

我是android开发领域的新手。下载Android Studio后,我尝试运行我的第一个HelloWorld。但是Android Studio给了我任何我不知道如何解决的问题。 在类

-

我正在尝试将log4j2.0配置为报告日志。 我的配置保存为log4j2.xml,这是它的内容: 它存在于项目的类路径中,我试着把它放在许多其他目录中... 我在代码中创建了一个记录器,如下所示: 没有任何东西被写入,也没有文件被创建。当我调试代码时,我看到记录器是默认的记录器(控制台)。

-

此外,我已经用以下参数配置了HBase集群: 它不起作用。 1。HMaster启动 2。我将“http://nn1:16010”放入浏览器 3。HMaster消失 以下是我的日志/hbase-hadoop-master-nn1.log: http://paste.openstack.org/show/549232/

-

我是ActiveMQ Artemis的新手,请社区检查一下我对HA cluster of brokers的配置是否正确,或者我应该以另一种方式配置它们,因为我还没有找到关于我的案例的详细教程。所有代理都在同一台机器上运行。 场景: 在端口上有一个主节点,在和端口上有两个从节点(slave1、slave2)。如果主节点死亡,则其中一个从节点变为活动(复制模式)。 使用者必须将集群作为一个“黑箱”与集

-

我有一个Flink 1.2集群的设置,由3个JobManager1和2个TaskManager1组成。我从JobManager1开始动物园管理员法定人数,我得到确认,动物园管理员开始其他2个JobManager1,然后我在这个JobManager1上开始一个Flink作业。 flink-conf.yaml在所有5个虚拟机上都是相同的,这意味着jobmanager。rpc。地址:指向各处的JobMa