Kafka API:java.io.ioException:无法解析地址:xxx.x.x.xx:9091

我试图发送消息到Kafka,这是安装在一个Ubuntu虚拟机。

有3个Kafka经纪人已经在VM中启动,一个消费者也在VM中监听这个主题。这一切都很好。

在Windows7上的Intellij中,我为KafkaProducer编写了一个小的演示应用程序,

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

public class KafkaProducerApp {

public static void main(String [] args){

// Create a properties dictionary for the required/optional Producer config settings:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9091,localhost:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//--> props.put("config.setting", "value");

//:: http://kafka.apache.org/documentation.html#producerconfigs

System.out.println("program start");

KafkaProducer<String, String> myProducer = new KafkaProducer<String, String>(props);

try{

for (int i = 0; i < 150; i++){

myProducer.send(new ProducerRecord<String, String>("my-topic", Integer.toString(i), "MyMessage: " + Integer.toString(i)));

}

}catch(Exception e){

e.printStackTrace();

}finally{

System.out.println("program close");

myProducer.close();

}

System.out.println("program end");

}

}

连接被拒绝

"C:\Program Files\Java\jdk1.8.0_131\bin\java" -Dorg.slf4j.simpleLogger.defaultLogLevel=DEBUG "-javaagent:C:\Program Files\JetBrains\IntelliJ IDEA Community Edition 2017.1.3\lib\idea_rt.jar=62649:C:\Program Files\JetBrains\IntelliJ IDEA Community Edition 2017.1.3\bin" -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_131\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_131\jre\lib\rt.jar;C:\Dev\kafka-poc\target\classes;C:\Users\rstannard\.m2\repository\org\apache\kafka\kafka-clients\0.10.2.1\kafka-clients-0.10.2.1.jar;C:\Users\rstannard\.m2\repository\net\jpountz\lz4\lz4\1.3.0\lz4-1.3.0.jar;C:\Users\rstannard\.m2\repository\org\xerial\snappy\snappy-java\1.1.2.6\snappy-java-1.1.2.6.jar;C:\Users\rstannard\.m2\repository\org\slf4j\slf4j-api\1.7.21\slf4j-api-1.7.21.jar;C:\Users\rstannard\.m2\repository\org\slf4j\slf4j-simple\1.7.21\slf4j-simple-1.7.21.jar" com.riskcare.kafkapoc.KafkaProducerApp

program start

[main] INFO org.apache.kafka.clients.producer.ProducerConfig - ProducerConfig values:

acks = 1

batch.size = 16384

block.on.buffer.full = false

bootstrap.servers = [localhost:9091, localhost:9092]

buffer.memory = 33554432

client.id =

compression.type = none

connections.max.idle.ms = 540000

interceptor.classes = null

key.serializer = class org.apache.kafka.common.serialization.StringSerializer

linger.ms = 0

max.block.ms = 60000

max.in.flight.requests.per.connection = 5

max.request.size = 1048576

metadata.fetch.timeout.ms = 60000

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.sample.window.ms = 30000

partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner

receive.buffer.bytes = 32768

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retries = 0

retry.backoff.ms = 100

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

timeout.ms = 30000

value.serializer = class org.apache.kafka.common.serialization.StringSerializer

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name bufferpool-wait-time

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name buffer-exhausted-records

[main] DEBUG org.apache.kafka.clients.Metadata - Updated cluster metadata version 1 to Cluster(id = null, nodes = [localhost:9092 (id: -2 rack: null), localhost:9091 (id: -1 rack: null)], partitions = [])

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name connections-closed:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name connections-created:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name bytes-sent-received:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name bytes-sent:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name bytes-received:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name select-time:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name io-time:

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name batch-size

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name compression-rate

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name queue-time

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name request-time

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name produce-throttle-time

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name records-per-request

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name record-retries

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name errors

[main] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name record-size-max

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.producer.internals.Sender - Starting Kafka producer I/O thread.

[main] INFO org.apache.kafka.common.utils.AppInfoParser - Kafka version : 0.10.2.1

[main] INFO org.apache.kafka.common.utils.AppInfoParser - Kafka commitId : e89bffd6b2eff799

[main] DEBUG org.apache.kafka.clients.producer.KafkaProducer - Kafka producer started

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Initialize connection to node -1 for sending metadata request

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Initiating connection to node -1 at localhost:9091.

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name node--1.bytes-sent

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name node--1.bytes-received

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name node--1.latency

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.network.Selector - Connection with localhost/127.0.0.1 disconnected

java.net.ConnectException: Connection refused: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.kafka.common.network.PlaintextTransportLayer.finishConnect(PlaintextTransportLayer.java:51)

at org.apache.kafka.common.network.KafkaChannel.finishConnect(KafkaChannel.java:81)

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:335)

at org.apache.kafka.common.network.Selector.poll(Selector.java:303)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:349)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:225)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:126)

at java.lang.Thread.run(Thread.java:748)

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Node -1 disconnected.

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Initialize connection to node -2 for sending metadata request

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Initiating connection to node -2 at localhost:9092.

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name node--2.bytes-sent

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name node--2.bytes-received

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name node--2.latency

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.common.network.Selector - Connection with localhost/127.0.0.1 disconnected

java.net.ConnectException: Connection refused: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.kafka.common.network.PlaintextTransportLayer.finishConnect(PlaintextTransportLayer.java:51)

at org.apache.kafka.common.network.KafkaChannel.finishConnect(KafkaChannel.java:81)

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:335)

at org.apache.kafka.common.network.Selector.poll(Selector.java:303)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:349)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:225)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:126)

at java.lang.Thread.run(Thread.java:748)

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Node -2 disconnected.

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Initialize connection to node -1 for sending metadata request

[kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - Initiating connection to node -1 at localhost:9091.

Process finished with exit code 1

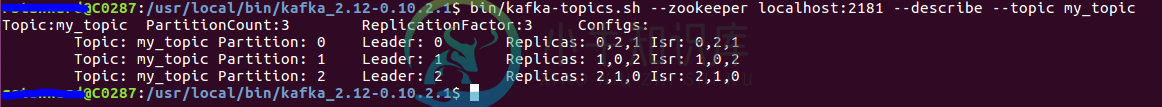

下面是Ubuntu的一些输出

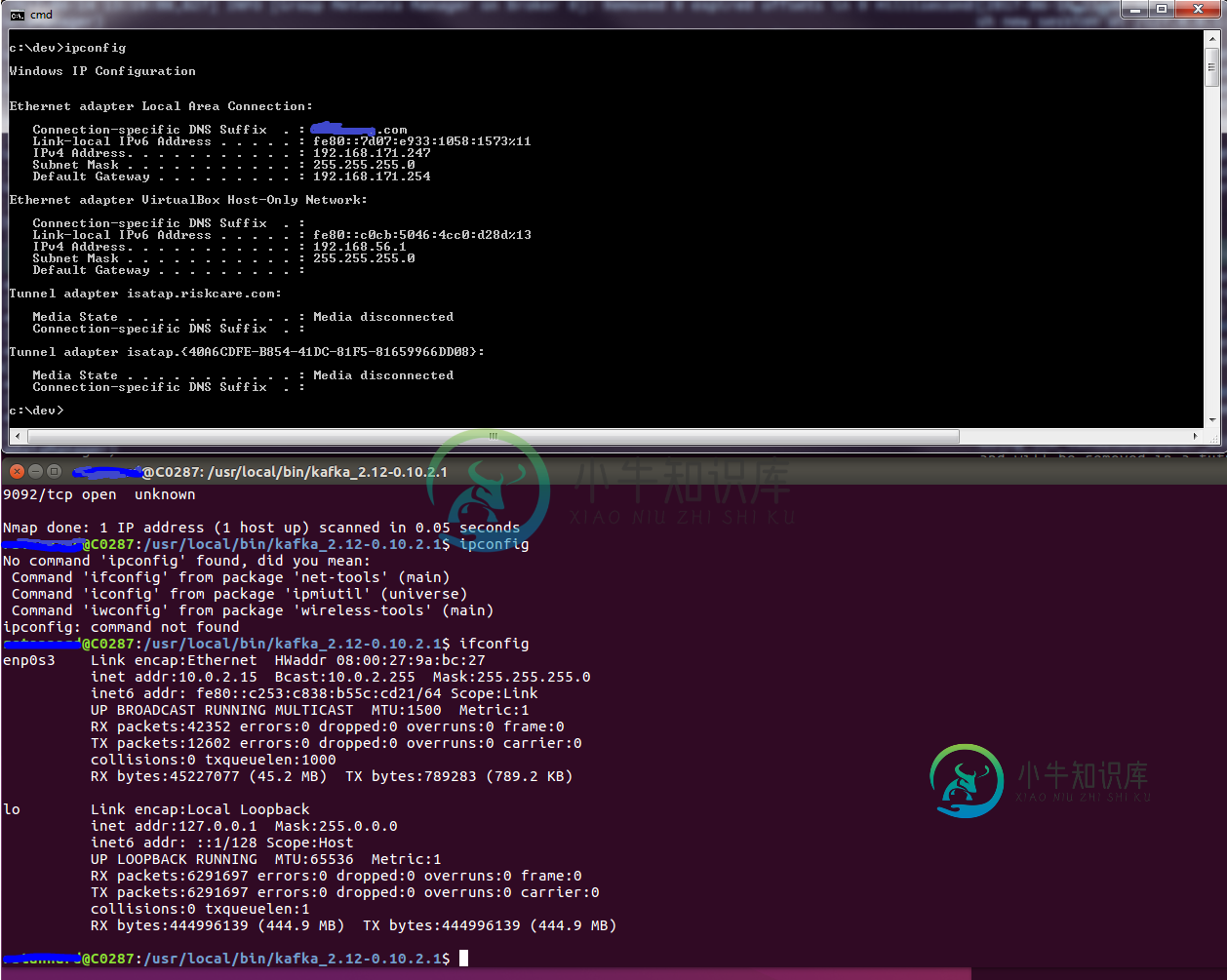

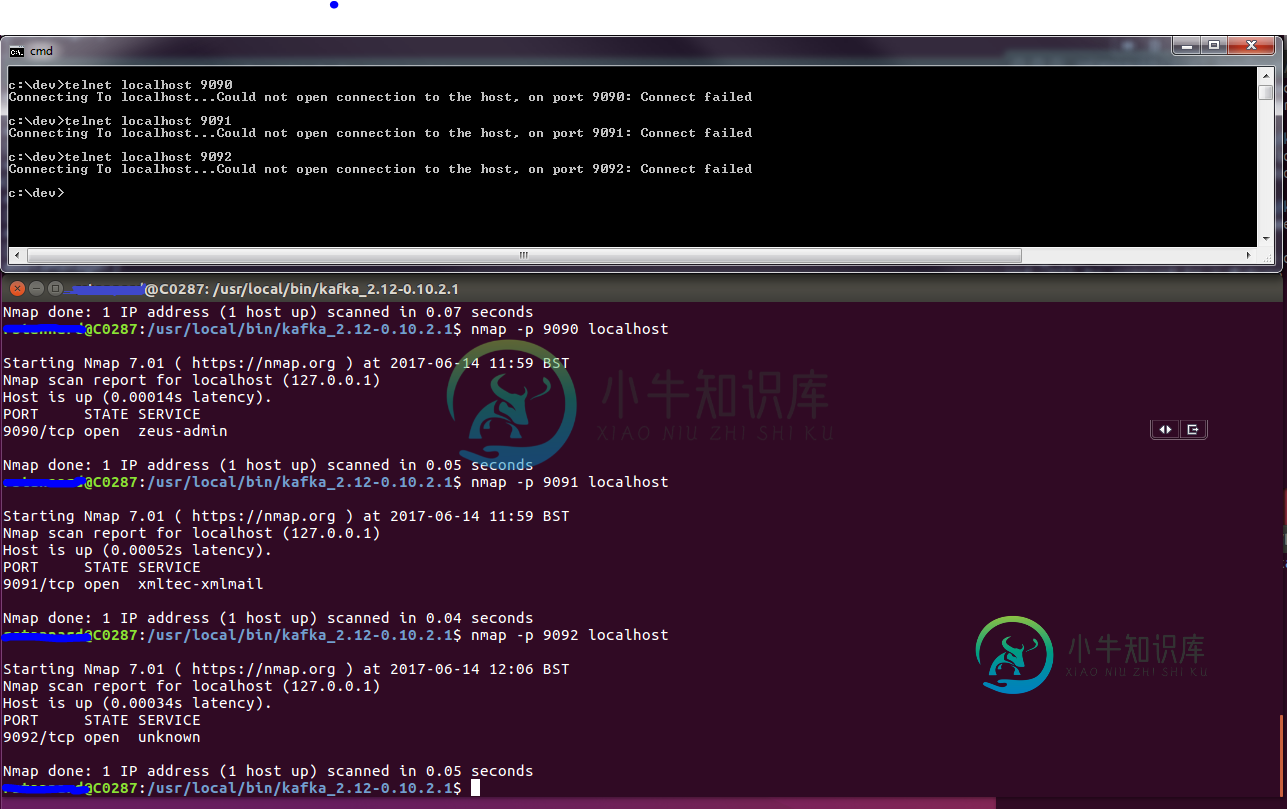

更新从ppatierno回答(我不能添加图片到评论,所以在这里添加更新)

谢谢你的评论。下面是来自

1/my Windows终端使用Telnet尝试连接到我的VM会话

2/my Ubuntu VM会话的屏幕截图,显示本地主机127.0.0.1上打开的端口。

3/ipconfig显示来自主机的ip地址

4/ifconfig显示来自Ubuntu VM(来宾)的ip地址。

https://www.howtogeek.com/122641/how-to-forward-ports-to-a-virtual-machine-and-use-it-as-a-server/

但是现在,当我试图运行Java Producer应用程序时,我遇到了另一个问题。日志似乎表明它能够识别3个代理,但出于某种原因,程序无法与代理连接。

[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkClient-初始化到节点-1的连接,以便发送元数据请求[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkClient-初始化到节点-1的连接,地址为192.168.56.101:9091。[kafka-producer-network-thread producer-1]调试org.apache.kafka.common.metrics.metrics-添加的名称为node-1的传感器。字节-发送的[kafka-producer-1]调试org.apache.kafka.common.metrics-添加的名称为node-1的传感器。字节-接收的[kafka-producer-network-1]调试org.apache.kafka.common.metrics-添加的名称为node-1的传感器sndbuf=131072,SO_TIMEOUT=0到节点-1[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-完成到节点-1的连接。获取API版本。[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-从节点-1获取启动API版本。[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-为节点-1录制的API版本:(Product(0):0到2[可用:2],取(1):0到3[可用:3],偏移(2):0到1[可用:1],元数据(3):0到2[可用:2],LeaderAndIsr(4):0[可用:0],StopReplica(5):0[可用:0],UpdateMetadata(6):0到3[可用:3],ControlledShutdown(7):1[可用:1],OffsetCommit(8):0到2[可用:2],OffsetFetch(9):0到2[可用:2],小组协调员(10):0[可用:0],JoinGroup(11):0到1[可用:1],心跳(12):0[可用:0],LeaveGroup(13):0[可用:0],SyncGroup(14):0[可用:0],描述组(15):0[可用:0],列表组(16):0[可用:0],SaslHandshake(17):0[可用:0],ApiVersions(18):0[可用:0],CreateTopics(19):0到1[可用:1],DeleteTopics(20):0[可用:0])[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-发送元数据请求(type=metadatarequest,topics=my-topic)到节点-1[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.metadata-更新的集群元数据版本2到集群(id=QZEI79XCTmC8qJ48K1JwLw,节点=[C0287.vm.xxxxxx.com:9092(ID:2 RACK:null),C0287.vm.xxxxxx.com:9090(ID:0 RACK:null),C0287.vm.xxxxxx.com:9091(ID:1 RACK:null),C0287.vm.xxxxxxx.com:9091(ID:1 RACK:null),分区=[分区(topic=my-topic,分区=0,leader=1,replicas=[0,1,2],isr=[1,2,0]),分区(topic=my-topic,2,leader=0,replicas=[0,1,2],isr=[0,1,2])][kafka-producer-networkclient-在c0287.vm.xxxxxxx.com:9090启动到节点0的连接。程序关闭[main]信息org.apache.Kafka.clients.producer.kafkaProducer-以timeoutMillis=9223372036854775807 ms关闭Kafka生产者。[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-在c0287.vm.xxxxxx.com:9090:java.io.ioException连接到节点0时出错:无法解析地址:c0287.vm.xxxxx.com:9090:java.io.common.network.selector.connect(selector.java:182)在org.apache.kafka.clients.networkclient.initiateConnect.kafka.clients.producer.internals.sender.run(sender.java:184)在org.apache.kafka.clients.producer.internals.sender.run(sender.java:126)在java.lang.thread.run(thread.java:748)由:java.nio.ch.net.checkaddress(net.java:101)在sun.nio.ch.socketchannelimpl.connect(socketchannelimpl.java:622)在-1]调试org.apache.kafka.clients.producer.internals.sender-开始关闭n的Kafka生产者I/O线程,发送剩余的记录。[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-在c0287.vm.xxxxxxx.com:9091启动到节点1的连接。[kafka-producer-network-thread producer-1]调试org.apache.kafka.clients.networkclient-连接到节点1时在c0287.vm.xxxxxx.com:9091:java.io.ioException:无法解析地址:c0287.vm.xxxxxx.com:9091时出错

共有1个答案

请确保所有三个kafka代理都配置了广告侦听器,该侦听器位于一个IP地址和端口上,可以从运行在VM之外的应用程序访问该IP地址和端口。

请参见此处文档中的adveredsed.listeners代理参数

https://kafka.apache.org/documentation/#brokerconfigs

-

问题内容: 与此问题有些相关,但是在没有有关QuickBooks的明确答案的情况下,有人知道Java的地址解析器吗?可以获取非结构化地址信息并解析出地址行1、2和城市州邮政编码和国家/地区的信息吗? 问题答案: 我确实知道Google Maps网络服务可以 很好 地做到这一点。因此,如果要使用它,可以节省很多精力。 真正的问题是,您需要一个包含城市/国家/地区名称的全球数据库来有效地解析非结构化地

-

当来自后台服务和移动屏幕的API调用关闭时。出现以下错误。 java.net.无法解析主机:没有与主机名相关联的地址W/System.err:在java.net.InetAddress.lookupHostByName(InetAddress.java:424)在java.net.InetAddress.getAllByNameImpl(InetAddress.java:236)在java.net

-

问题内容: 在我的用于读取RSS链接的Android应用程序中,出现此错误: java.net.UnknownHostException:无法解析主机“ example.com”;没有与主机名关联的地址。 在模拟器中,我可以通过浏览器连接到Google。请帮助我解决此问题。 问题答案: 您可能没有权限。尝试将它添加到您的文件中: 注:以上不 必须 的前右侧的标签,但这是把它的好/正确的位置。 注意

-

问题内容: 我有DNS服务器IP地址和主机名。 使用Java,如何使用该IP地址和主机名找到该DNS服务器返回的主机名的IP地址? 问题答案: 看看和方法。

-

当我尝试在android应用程序上按主机名获取ip时,我遇到了一个类似的奇怪错误。 类似的问题在IOs上返回的结果是whitout problems,但我的应用程序android没有返回任何东西。 我创建这些函数来测试我的应用程序: 但是类似的代码,在IOs上,如果我搜索我自己托管的服务器,会返回一个有效的结果,但是在android上搜索相同的页面或服务器时,结果会给我一个“无法解析主机”的警告。

-

我有一个web服务调用来关闭一个JSON对象,它只有在我有IP地址而不是主机名的情况下才起作用。我一直很好地使用IP地址,但现在我需要有主机名。 如果有人有好主意,谢谢。