Android AutoFocusCallback没有被调用或未返回

我正在开发具有以下功能的条形码扫描应用程序:

•访问设备相机,在SurfaceView上预览相机,并自动重新对焦

•Attemtps使用两种方法解码条形码

a)在使用 onTouchEvent(MotionEvent event)的 SurfaceView touch上,尝试拍摄条形码的图片,但获取

java.lang.RuntimeException:takePicture失败

b)或者 最好 可以在 onAutoFocus(boolean success,Camera camera)成功的情况下拍照

,但不会调用onAutoFocus()

我正在实现 “ AutoFocusCallback”, 并在名为 “ ScanVinFromBarcodeActivity”的 扫描活动

中将其 设置回相机对象 。

下面是我的“ ScanVinFromBarcodeActivity”,它负责摄像头的功能:

package com.t.t.barcode;

/* I removed import statements because of character limit*/

import com.t.t.R;

public class ScanVinFromBarcodeActivity extends Activity {

private Camera globalCamera;

private int cameraId = 0;

private TextView VINtext = null;

private Bitmap bmpOfTheImageFromCamera = null;

private boolean isThereACamera = false;

private RelativeLayout RelativeLayoutBarcodeScanner = null;

private CameraPreview newCameraPreview = null;

private SurfaceView surfaceViewBarcodeScanner = null;

private String VIN = null;

private boolean globalFocusedBefore = false;

//This method , finds FEATURE_CAMERA, opens the camera, set parameters ,

@SuppressLint("InlinedApi")

private void initializeGlobalCamera() {

try {

if (!getPackageManager().hasSystemFeature(

PackageManager.FEATURE_CAMERA)) {

Toast.makeText(this, "No camera on this device",

Toast.LENGTH_LONG).show();

} else { // check for front camera ,and get the ID

cameraId = findFrontFacingCamera();

if (cameraId < 0) {

Toast.makeText(this, "No front facing camera found.",

Toast.LENGTH_LONG).show();

} else {

Log.d("ClassScanViewBarcodeActivity",

"camera was found , ID: " + cameraId);

// camera was found , set global camera flag to true

isThereACamera = true;

// OPEN

globalCamera = getGlobalCamera(cameraId);

//PARAMETERS FOR AUTOFOCUS

globalCamera.getParameters().setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);

//set auto focus callback , called when autofocus has completed

globalCamera.autoFocus(autoFocusCallback); //将surfaceView传递给CameraPreview

newCameraPreview = new CameraPreview(this,globalCamera){

@Override

public boolean onTouchEvent(MotionEvent event) {

Log.d("ClassScanViewBarcodeActivity"," onTouchEvent(MotionEvent event) ");

takePicture();

return super.onTouchEvent(event);

}

};

// pass CameraPreview to Layout

RelativeLayoutBarcodeScanner.addView(newCameraPreview);

//give reference SurfaceView to camera object

globalCamera

.setPreviewDisplay(surfaceViewBarcodeScanner

.getHolder());

// PREVIEW

globalCamera.startPreview();

Log.d("ClassScanViewBarcodeActivity",

"camera opened & previewing");

}

}// end else

}// end try

catch (Exception exc) {

// resources & cleanup

if (globalCamera != null) {

globalCamera.stopPreview();

globalCamera.setPreviewCallback(null);

globalCamera.release();

globalCamera = null;

}

Log.d("ClassScanViewBarcodeActivity initializeGlobalCamera() exception:",

exc.getMessage());

exc.printStackTrace();

}// end catch

}

// onCreate, instantiates layouts & surfaceView used for video preview

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_barcode_vin_scanner);

Log.d("ClassScanViewBarcodeActivity", "onCreate ");

// create surfaceView for previewing

RelativeLayoutBarcodeScanner = (RelativeLayout) findViewById(R.id.LayoutForPreview);

surfaceViewBarcodeScanner = (SurfaceView) findViewById(R.id.surfaceViewBarcodeScanner);

initializeGlobalCamera();

}// end onCreate

@Override

protected void onResume() {

Log.d("ClassScanViewBarcodeActivity, onResume() globalCamera:",

String.valueOf(globalCamera));

initializeGlobalCamera();

super.onResume();

}

@Override

protected void onStop() {

if (globalCamera != null) {

globalCamera.stopPreview();

globalCamera.setPreviewCallback(null);

globalCamera.release();

globalCamera = null;

}

super.onStop();

}

@Override

protected void onPause() {

if (globalCamera != null) {

globalCamera.stopPreview();

globalCamera.setPreviewCallback(null);

globalCamera.release();

globalCamera = null;

}

super.onPause();

}// end onPause()

// callback used by takePicture()

@SuppressLint("NewApi")

public void onPictureTaken(byte[] imgData, Camera camera) {

BinaryBitmap bitmap = null ;

try {

Log.d("ClassScanViewBarcodeActivity", "onPictureTaken()");

// 1)down-sampled the image via to prevent MemoryOutOfBounds exception.

BitmapFactory.Options options = new BitmapFactory.Options();

options.inSampleSize = 4;

options.inPreferQualityOverSpeed =true;

bmpOfTheImageFromCamera = BitmapFactory.decodeByteArray(

imgData, 0, imgData.length, options);

if (bmpOfTheImageFromCamera != null) {

// 2) Samsung galaxy S only , rotate to correct orientation

Matrix rotationMatrix90CounterClockWise = new Matrix();

rotationMatrix90CounterClockWise.postRotate(90);

bmpOfTheImageFromCamera = Bitmap.createBitmap(

bmpOfTheImageFromCamera, 0, 0,

bmpOfTheImageFromCamera.getWidth(),

bmpOfTheImageFromCamera.getHeight(),

rotationMatrix90CounterClockWise, true);

// 3) calculate relative x,y & width & height , because SurfaceView coordinates are different than the coordinates of the image

guidanceRectangleCoordinatesCalculations newguidanceRectangleCoordinatesCalculations = new guidanceRectangleCoordinatesCalculations(

bmpOfTheImageFromCamera.getWidth(),

bmpOfTheImageFromCamera.getHeight());

// 4) crop & only take image/data that is within the bounding rectangle

Bitmap croppedBitmap = Bitmap

.createBitmap(

bmpOfTheImageFromCamera,

newguidanceRectangleCoordinatesCalculations

.getGuidanceRectangleStartX(),

newguidanceRectangleCoordinatesCalculations

.getGuidanceRectangleStartY(),

newguidanceRectangleCoordinatesCalculations

.getGuidanceRectangleEndX()

- newguidanceRectangleCoordinatesCalculations

.getGuidanceRectangleStartX(),

newguidanceRectangleCoordinatesCalculations

.getGuidanceRectangleEndY()

- newguidanceRectangleCoordinatesCalculations

.getGuidanceRectangleStartY());

//Bitmap to BinaryBitmap

bitmap = cameraBytesToBinaryBitmap(croppedBitmap);

//early cleanup

croppedBitmap.recycle();

croppedBitmap = null;

if (bitmap != null) {

// 7) decode the VIN

VIN = decodeBitmapToString(bitmap);

Log.("***ClassScanViewBarcodeActivity ,onPictureTaken(): VIN ",

VIN);

if (VIN!=null)

{

//original before sharpen

byte [] bytesImage = bitmapToByteArray ( croppedBitmap );

savePicture(bytesImage, 0 , "original");

//after sharpen

croppedBitmap = sharpeningKernel (croppedBitmap);

bytesImage = bitmapToByteArray ( croppedBitmap );

savePicture(bytesImage, 0 , "sharpened");

Toast toast= Toast.makeText(getApplicationContext(),

"VIN:"+VIN, Toast.LENGTH_SHORT);

toast.setGravity(Gravity.TOP|Gravity.CENTER_HORIZONTAL, 0, 0);

toast.show();

}

} else {

Log.d("ClassScanViewBarcodeActivity ,onPictureTaken(): bitmap=",

String.valueOf(bitmap));

}

} else {

Log.d("ClassScanViewBarcodeActivity , onPictureTaken(): bmpOfTheImageFromCamera = ",

String.valueOf(bmpOfTheImageFromCamera));

}

}// end try

catch (Exception exc) {

exc.getMessage();

Log.d("ClassScanViewBarcodeActivity , scanButton.setOnClickListener(): exception = ",

exc.getMessage());

}

finally {

// start previewing again onthe SurfaceView in case use wants to

// take another pic/scan

globalCamera.startPreview();

bitmap = null;

}

}// end onPictureTaken()

};//end

//autoFocuscallback

AutoFocusCallback autoFocusCallback = new AutoFocusCallback() {

@Override

public void onAutoFocus(boolean success, Camera camera) {

Log.d("***onAutoFocus(boolean success, Camera camera)","success: "+success);

if (success)

{

globalFocusedBefore = true;

takePicture();

}

}

};//end

public Camera getGlobalCamera(int CameraId)

{

if (globalCamera==null)

{

// OPEN

globalCamera = Camera.open( CameraId);

}

return globalCamera;

}

/* find camera on device */

public static int findFrontFacingCamera() {

int cameraId = -1;

// Search for the front facing camera

int numberOfCameras = Camera.getNumberOfCameras();

for (int i = 0; i < numberOfCameras; i++) {

CameraInfo info = new CameraInfo();

Camera.getCameraInfo(i, info);

if (info.facing == CameraInfo.CAMERA_FACING_BACK) {

Log.d("ClassScanViewBarcodeActivity , findFrontFacingCamera(): ",

"Camera found");

cameraId = i;

break;

}

}

return cameraId;

}// end findFrontFacingCamera()

/*

* created savePicture(byte [] data) for testing

*/

public void savePicture(byte[] data, double sizeInPercent , String title) {

Log.d("ScanVinFromBarcodeActivity ", "savePicture(byte [] data)");

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyymmddhhmmss");

String date = dateFormat.format(new Date());

String photoFile = "Picture_sample_sizePercent_" + sizeInPercent + "_" + title + "_"

+ date + ".jpg";

File sdDir = Environment

.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES);

String filename = sdDir + File.separator + photoFile;

File pictureFile = new File(filename);

try {

FileOutputStream fos = new FileOutputStream(pictureFile);

fos.write(data);

fos.close();

Toast.makeText(this, "New Image saved:" + photoFile,

Toast.LENGTH_LONG).show();

} catch (Exception error) {

Log.d("File not saved: ", error.getMessage());

Toast.makeText(this, "Image could not be saved.", Toast.LENGTH_LONG)

.show();

}

}

/*

* Attempts to decode barcode data inside the input bitmap. Uses zxing API */

@SuppressWarnings("finally")

public String decodeBitmapToString(BinaryBitmap bitmap) {

Log.d("ClassScanViewBarcodeActivity",

"decodeBitmapToString(BinaryBitmap bitmap");

Reader reader = null;

Result result = null;

String textResult = null;

Hashtable<DecodeHintType, Object> decodeHints = null;

try {

decodeHints = new Hashtable<DecodeHintType, Object>();

decodeHints.put(DecodeHintType.TRY_HARDER, Boolean.TRUE);

decodeHints.put(DecodeHintType.PURE_BARCODE, Boolean.TRUE);

reader = new MultiFormatReader();

if (bitmap != null) {

result = reader.decode(bitmap, decodeHints);

if (result != null) {

Log.d("decodeBitmapToString (BinaryBitmap bitmap): result = ",

String.valueOf(result));

Log.d("decodeBitmapToString (BinaryBitmap bitmap): result = ",

String.valueOf(result.getBarcodeFormat().toString()));

textResult = result.getText();

} else {

Log.d("decodeBitmapToString (BinaryBitmap bitmap): result = ",

String.valueOf(result));

}

} else {

Log.d("decodeBitmapToString (BinaryBitmap bitmap): bitmap = ",

String.valueOf(bitmap));

}

} catch (NotFoundException e) {

// TODO Auto-generated catch block

textResult = "Unable to decode. Please try again.";

e.printStackTrace();

Log.d("ClassScanViewBarcodeActivity, NotFoundException:",

e.getMessage());

} catch (ChecksumException e) {

textResult = "Unable to decode. Please try again.";

e.printStackTrace();

Log.d("ClassScanViewBarcodeActivity, ChecksumException:",

e.getMessage());

} catch (FormatException e) {

textResult = "Unable to decode. Please try again.";

e.printStackTrace();

Log.d("ClassScanViewBarcodeActivity, FormatException:",

e.getMessage());

}

finally {

//cleanup

reader = null;

result = null;

decodeHints = null;

return textResult;

}

}// end decodeBitmapToString ()

@SuppressWarnings("finally")

public BinaryBitmap cameraBytesToBinaryBitmap(Bitmap bitmap) {

Log.d("ClassScanViewBarcodeActivity , cameraBytesToBinaryBitmap (Bitmap bitmap):",

"");

BinaryBitmap binaryBitmap = null;

RGBLuminanceSource source = null;

HybridBinarizer bh = null;

try {

if (bitmap != null) {

//bitmap.getPixels(pixels, 0, bitmap.getWidth(), 0, 0,bitmap.getWidth(), bitmap.getHeight());

//RGBLuminanceSource source = new RGBLuminanceSource(bitmap.getWidth(), bitmap.getHeight(), pixels);

source = new RGBLuminanceSource(bitmap);

bh = new HybridBinarizer(source);

binaryBitmap = new BinaryBitmap(bh);

} else {

Log.d("ClassScanViewBarcodeActivity , cameraBytesToBinaryBitmap (Bitmap bitmap): bitmap = ",

String.valueOf(bitmap));

}

} catch (Exception exc) {

exc.printStackTrace();

} finally {

source = null;

bh = null;

return binaryBitmap;

}

}

@SuppressLint("NewApi")

@SuppressWarnings("deprecation")

/*

* The method getScreenOrientation() return screen orientation either

* landscape or portrait. IF width < height , than orientation = portrait,

* ELSE landscape For backwards compatibility we use to methods to detect

* the orientation. The first method is for API versions prior to 13 or

* HONEYCOMB.

*/

public int getScreenOrientation() {

int currentapiVersion = android.os.Build.VERSION.SDK_INT;

// if API version less than 13

Display getOrient = getWindowManager().getDefaultDisplay();

int orientation = Configuration.ORIENTATION_UNDEFINED;

if (currentapiVersion < android.os.Build.VERSION_CODES.HONEYCOMB) {

// Do something for API version less than HONEYCOMB

if (getOrient.getWidth() == getOrient.getHeight()) {

orientation = Configuration.ORIENTATION_SQUARE;

} else {

if (getOrient.getWidth() < getOrient.getHeight()) {

orientation = Configuration.ORIENTATION_PORTRAIT;

} else {

orientation = Configuration.ORIENTATION_LANDSCAPE;

}

}

} else {

// Do something for API version greater or equal to HONEYCOMB

Point size = new Point();

this.getWindowManager().getDefaultDisplay().getSize(size);

int width = size.x;

int height = size.y;

if (width < height) {

orientation = Configuration.ORIENTATION_PORTRAIT;

} else {

orientation = Configuration.ORIENTATION_LANDSCAPE;

}

}

return orientation;

}// end getScreenOrientation()

public Bitmap sharpeningKernel (Bitmap bitmap)

{

if (bitmap!=null)

{

int result = 0 ;

int pixelAtXY = 0;

for (int i=0 ; i<bitmap.getWidth(); i++)

{

for (int j=0 ; j<bitmap.getHeight(); j++)

{

//piecewise multiply with kernel

//add multiplication result to total / sum of kernel values

/* Conceptual kernel

* int kernel [][]=

{{0,-1,0},

{-1,5,-1},

{0,-1,0}};

*/

pixelAtXY = bitmap.getPixel(i, j);

result = ((pixelAtXY * -1) + (pixelAtXY * -1) + (pixelAtXY * 5)+ (pixelAtXY * -1) + (pixelAtXY * -1));

bitmap.setPixel(i, j,result);

}

}

}

return bitmap;

}//end

public byte [] bitmapToByteArray (Bitmap bitmap)

{

ByteArrayOutputStream baoStream = new ByteArrayOutputStream();

bitmap.compress(Bitmap.CompressFormat.JPEG, 100,

baoStream);

return baoStream.toByteArray();

}

public void takePicture()

{

Log.d("ClassScanViewBarcodeActivity","takePicture()");

try {

// if true take a picture

if (isThereACamera) {

Log.d("ClassScanViewBarcodeActivity",

"setOnClickListener() isThereACamera: "

+ isThereACamera);

// set picture format to JPEG, everytime makesure JPEg

globalCamera.getParameters().setPictureFormat(ImageFormat.JPEG);

if ( globalFocusedBefore = true)

{

// take pic , should call Callback

globalCamera.takePicture(null, null, jpegCallback);

//reset autofocus flag

globalFocusedBefore = false;

}

}

}// end try

catch (Exception exc) {

// in case of exception release resources & cleanup

if (globalCamera != null) {

globalCamera.stopPreview();

globalCamera.setPreviewCallback(null);

globalCamera.release();

globalCamera = null;

}

Log.d("ClassScanViewBarcodeActivity setOnClickListener() exceprtion:",

exc.getMessage());

exc.printStackTrace();

}// end catch

}// end takePicture()

}// end activity class

以下是我的预览课程,其中显示了相机的实时图像

package com.t.t.barcode;

/* I removed imports due to character limit on Stackoverflow*/

package com.t.t.barcode;

/** A basic Camera preview class */

public class CameraPreview extends SurfaceView implements

SurfaceHolder.Callback {

private SurfaceHolder mHolder;

private Camera mCamera;

private Context context;

//

private int guidanceRectangleStartX;

private int guidanceRectangleStartY;

private int guidanceRectangleEndX;

private int guidanceRectangleEndY;

//

private ImageView imageView = null;

private Bitmap guidanceScannerFrame = null;

public CameraPreview(Context context, Camera camera) {

super(context);

mCamera = camera;

this.context = context;

// Install a SurfaceHolder.Callback so we get notified when the

// underlying surface is created and destroyed.

mHolder = getHolder();

mHolder.addCallback(this);

// deprecated setting, but required on Android versions prior to 3.0

mHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

setWillNotDraw(false);

setFocusable(true);

requestFocus();

guidanceScannerFrame = getBitmapFromResourcePath("res/drawable/scanner_frame.png");

}

public void drawGuidance(Canvas canvas)

{

Paint paintRed = new Paint();

paintRed.setStyle(Paint.Style.STROKE);

paintRed.setStrokeWidth(6f);

paintRed.setAntiAlias(true);

paintRed.setColor(Color.RED);

//drawMiddleline

canvas.drawLine(0,this.getHeight()/2, this.getWidth(),this.getHeight()/2, paintRed);

if (guidanceScannerFrame!=null)

{

Paint paint = new Paint();

paint.setFilterBitmap(true);

int diffInWidth =0;

int diffInHeight = 0;

try

{

diffInWidth = this.getWidth() - guidanceScannerFrame.getScaledWidth(canvas);

diffInHeight = this.getHeight() - guidanceScannerFrame.getScaledHeight(canvas);

//

canvas.drawBitmap(guidanceScannerFrame,(diffInWidth/2),(diffInHeight/2) , paint);

}

catch (NullPointerException exc)

{

exc.printStackTrace();

}

/*create rectangle that is relative to the SurfaceView coordinates and fits inside the Bitmap rectangle,

* this coordinates will later be used to crop inside of the Bitmap to take in the only the barcode's picture*/

Paint paintGray = new Paint();

paintGray.setStyle(Paint.Style.STROKE);

paintGray.setStrokeWidth(2f);

paintGray.setAntiAlias(true);

paintGray.setColor(Color.GRAY);

//drawRect

canvas.drawRect((diffInWidth/2), (diffInHeight/2), (diffInWidth/2)+guidanceScannerFrame.getScaledWidth(canvas),(diffInHeight/2)+guidanceScannerFrame.getScaledHeight(canvas), paintGray);

this.guidanceRectangleStartX = this.getWidth()/12;

this.guidanceRectangleStartY= this.getHeight()/3;

this.guidanceRectangleEndX = this.getWidth()-this.getWidth()/12;

this.guidanceRectangleEndY =(this.getHeight()/3)+( 2 *(this.getHeight()/3));

}

}

@Override

public void onDraw(Canvas canvas) {

//drawGuidance

drawGuidance(canvas);

}

public void surfaceCreated(SurfaceHolder holder) {

// The Surface has been created, now tell the camera where to draw the

// preview.

try {

Camera.Parameters p = mCamera.getParameters();

// get width & height of the SurfaceView

int SurfaceViewWidth = this.getWidth();

int SurfaceViewHeight = this.getHeight();

List<Size> sizes = p.getSupportedPreviewSizes();

Size optimalSize = getOptimalPreviewSize(sizes, SurfaceViewWidth, SurfaceViewHeight);

// set parameters

p.setPreviewSize(optimalSize.width, optimalSize.height);

p.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);

/*rotate the image by 90 degrees clockwise , in order to correctly displayed the image , images seem to be -90 degrees (counter clockwise) rotated

* I even tried setting it to p.setRotation(0); , but still no effect.

*/

mCamera.setDisplayOrientation(90);

mCamera.setParameters(p);

mCamera.setPreviewDisplay(holder);

mCamera.startPreview();

} catch (IOException e) {

e.printStackTrace();

}

}

public void surfaceDestroyed(SurfaceHolder holder) {

// empty. Take care of releasing the Camera preview in your activity.

}

@SuppressLint("InlinedApi")

public void surfaceChanged(SurfaceHolder holder, int format, int w, int h) {

// If your preview can change or rotate, take care of those events here.

// Make sure to stop the preview before resizing or reformatting it.

if (mHolder.getSurface() == null) {

// preview surface does not exist

return;

}

// stop preview before making changes

try {

mCamera.stopPreview();

} catch (Exception e) {

// ignore: tried to stop a non-existent preview

}

// start preview with new settings

try {

mCamera.setPreviewDisplay(mHolder);

mCamera.startPreview();

} catch (Exception e) {

Log.d("CameraPreview , surfaceCreated() , orientation: ",

String.valueOf(e.getMessage()));

}

}// end surfaceChanged()

static Size getOptimalPreviewSize(List <Camera.Size>sizes, int w, int h) {

final double ASPECT_TOLERANCE = 0.1;

final double MAX_DOWNSIZE = 1.5;

double targetRatio = (double) w / h;

if (sizes == null) return null;

Size optimalSize = null;

double minDiff = Double.MAX_VALUE;

int targetHeight = h;

// Try to find an size match aspect ratio and size

for (Camera.Size size : sizes) {

double ratio = (double) size.width / size.height;

double downsize = (double) size.width / w;

if (downsize > MAX_DOWNSIZE) {

//if the preview is a lot larger than our display surface ignore it

//reason - on some phones there is not enough heap available to show the larger preview sizes

continue;

}

if (Math.abs(ratio - targetRatio) > ASPECT_TOLERANCE) continue;

if (Math.abs(size.height - targetHeight) < minDiff) {

optimalSize = size;

minDiff = Math.abs(size.height - targetHeight);

}

}

// Cannot find the one match the aspect ratio, ignore the requirement

//keep the max_downsize requirement

if (optimalSize == null) {

minDiff = Double.MAX_VALUE;

for (Size size : sizes) {

double downsize = (double) size.width / w;

if (downsize > MAX_DOWNSIZE) {

continue;

}

if (Math.abs(size.height - targetHeight) < minDiff) {

optimalSize = size;

minDiff = Math.abs(size.height - targetHeight);

}

}

}

//everything else failed, just take the closest match

if (optimalSize == null) {

minDiff = Double.MAX_VALUE;

for (Size size : sizes) {

if (Math.abs(size.height - targetHeight) < minDiff) {

optimalSize = size;

minDiff = Math.abs(size.height - targetHeight);

}

}

}

return optimalSize;

}

public Bitmap getBitmapFromResourcePath (String path)

{

InputStream in =null;

Bitmap guidanceBitmap = null;

try

{

if (path!=null)

{

in = this.getClass().getClassLoader().getResourceAsStream(path);

guidanceBitmap = BitmapFactory.decodeStream(in);

}

}

catch (Exception exc)

{

exc.printStackTrace();

}

finally

{

if (in!=null)

{

try {

in.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

return guidanceBitmap;

}

public int getGuidanceRectangleStartX() {

return guidanceRectangleStartX;

}

public int getGuidanceRectangleStartY() {

return guidanceRectangleStartY;

}

public int getGuidanceRectangleEndX() {

return guidanceRectangleEndX;

}

public int getGuidanceRectangleEndY() {

return guidanceRectangleEndY;

}

}//end preview class

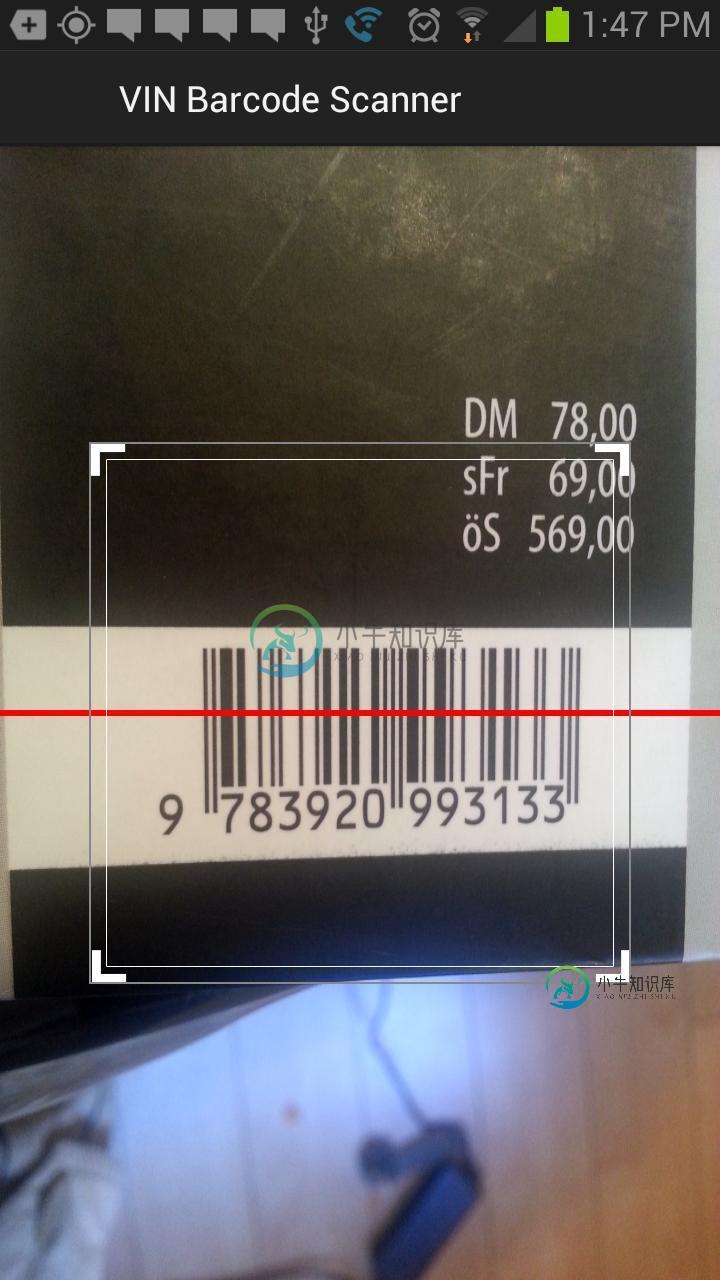

屏幕截图活动GUI:

任何帮助将不胜感激,谢谢。

问题答案:

您在预览类中的Oncreate上调用了自动对焦,因此应该在创建曲面或更改曲面时调用它,因为一旦开始camerapreview就会发生自动对焦,请查看此代码,我已经对此进行了工作并且正在工作

private void tryAutoFocus() {

if( MyDebug.LOG )

Log.d(TAG, "tryAutoFocus");

if( camera == null ) {

if( MyDebug.LOG )

Log.d(TAG, "no camera");

}

else if( !this.has_surface ) {

if( MyDebug.LOG )

Log.d(TAG, "preview surface not yet available");

}

else if( !this.is_preview_started ) {

if( MyDebug.LOG )

Log.d(TAG, "preview not yet started");

}

else if( is_taking_photo ) {

if( MyDebug.LOG )

Log.d(TAG, "currently taking a photo");

}

else {

// if a focus area is set, we always call autofocus even if it isn't supported, so we get the focus box

// otherwise, it's only worth doing it when autofocus has an effect (i.e., auto or macro mode)

Camera.Parameters parameters = camera.getParameters();

String focus_mode = parameters.getFocusMode();

if( has_focus_area || focus_mode.equals(Camera.Parameters.FOCUS_MODE_AUTO) || focus_mode.equals(Camera.Parameters.FOCUS_MODE_MACRO) ) {

if( MyDebug.LOG )

Log.d(TAG, "try to start autofocus");

Camera.AutoFocusCallback autoFocusCallback = new Camera.AutoFocusCallback() {

@Override

public void onAutoFocus(boolean success, Camera camera) {

if( MyDebug.LOG )

Log.d(TAG, "autofocus complete: " + success);

focus_success = success ? FOCUS_SUCCESS : FOCUS_FAILED;

focus_complete_time = System.currentTimeMillis();

if(_automationStarted){

takeSnapPhoto();

}

}

};

this.focus_success = FOCUS_WAITING;

this.focus_complete_time = -1;

camera.autoFocus(autoFocusCallback);

}

}

}

public void surfaceCreated(SurfaceHolder holder) {

if( MyDebug.LOG )

Log.d(TAG, "surfaceCreated()");

// The Surface has been created, acquire the camera and tell it where to draw.

this.has_surface = true;

this.openCamera(false); // if setting up for the first time, we wait until the surfaceChanged call to start the preview

this.setWillNotDraw(false); // see http://stackoverflow.com/questions/2687015/extended-surfaceviews-ondraw-method-never-called

}

public void surfaceDestroyed(SurfaceHolder holder) {

if( MyDebug.LOG )

Log.d(TAG, "surfaceDestroyed()");

// Surface will be destroyed when we return, so stop the preview.

// Because the CameraDevice object is not a shared resource, it's very

// important to release it when the activity is paused.

this.has_surface = false;

this.closeCamera();

}

public void takeSnapPhoto() {

camera.setOneShotPreviewCallback(new Camera.PreviewCallback() {

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

Camera.Parameters parameters = camera.getParameters();

int format = parameters.getPreviewFormat();

//YUV formats require more conversion

if (format == ImageFormat.NV21 || format == ImageFormat.YUY2 || format == ImageFormat.NV16) {

int w = parameters.getPreviewSize().width;

int h = parameters.getPreviewSize().height;

// Get the YuV image

YuvImage yuv_image = new YuvImage(data, format, w, h, null);

// Convert YuV to Jpeg

Rect rect = new Rect(0, 0, w, h);

ByteArrayOutputStream output_stream = new ByteArrayOutputStream();

yuv_image.compressToJpeg(rect, 100, output_stream);

byte[] byt = output_stream.toByteArray();

FileOutputStream outStream = null;

try {

// Write to SD Card

File file = createFileInSDCard(FOLDER_PATH, "Image_"+System.currentTimeMillis()+".jpg");

//Uri uriSavedImage = Uri.fromFile(file);

outStream = new FileOutputStream(file);

outStream.write(byt);

outStream.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

}

}

}

});

}

初始化相机时(或在这种情况下打开相机)

private void openCamera(boolean start_preview) {

tryautofocus();//call this after setting camera parameters

}

-

问题内容: 您能帮忙检查一下为什么doFilter没有被调用吗 web.xml: 类签名: 调用http:// localhost:8080 / hello / world时 返回404 ,我在doFilter处设置了断点,看来doFilter没有被调用?(我尝试了tomcat 6.0.18、6.0.29,jdk1.6) 问题答案: 在以下情况下将不会调用该过滤器: 过滤器类在类路径中丢失和/或不

-

下面是我要测试的代码类 我的测试类

-

问题内容: 我在告诉Android 方向更改时不打电话时遇到了麻烦。我已添加到清单中,但仍在调用方向更改时添加。这是我的代码。 AndroidManifest.xml SearchMenuActivity.java 还有我的LogCat输出 有人知道我在做什么错吗?谢谢。 问题答案: 要尝试的几件事: 而不是 确保您没有在任何地方打电话。这将导致onConfigurationChange()无法触

-

问题内容: 我正在尝试在Angular中实现d3指令,这很困难,因为在视觉上什么也没有发生,并且在控制台上没有抛出任何错误。 这是我的d3指令: 这是我的HTML: 起初我以为不是要附加,因为要检查看起来像的元素,但是现在我认为该指令根本没有在运行。我从一开始就将其嵌入其中,也没有出现。我缺少简单的东西吗? 编辑: 我尝试将顶行更改为 但这也不起作用。我什至不知道两个标题之间有什么区别… 问题答案

-

我编写了一个代码来启动活动A到活动B,活动A和B都有片段实现。 场景:如果活动A经常启动包含碎片的活动B,那么大多数时候它都错过了碎片。ondetach...我检查了日志,通常它会给我下面的重写方法日志: null null 我在用 我有什么遗漏吗?有什么建议吗?

-

问题内容: 我需要一种方法来获取TensorFlow中任何类型的层(即Dense,Conv2D等)的输出张量的形状。根据文档,有可以解决问题的属性。但是,每次访问它都会得到。 这是显示问题的代码示例: 该语句将产生错误消息: 或将产生: 有什么办法可以解决该错误? PS: 我知道在上面的示例中,我可以通过获得输出张量的形状。但是我想知道为什么财产不起作用以及如何解决? 问题答案: TL; DR 我