Database modeling for international and multilingual purposes

I need to create a large scale DB Model for a web application that will be

multilingual.

One doubt that I’ve every time I think on how to do it is how I can resolve

having multiple translations for a field. A case example.

The table for language levels, that administrators can edit from the backend,

can have multiple items like: basic, advance, fluent, mattern… In the near

future probably it will be one more type. The admin goes to the backend and

add a new level, it will sort it in the right position.. but how I handle all

the translations for the final users?

Another problem with internationalization of a database is that probably for

user studies can differ from USA to UK to DE… in every country they will

have their levels (that probably it will be equivalent to another but finally,

different). And what about billing?

How you model this in a big scale?

问题答案:

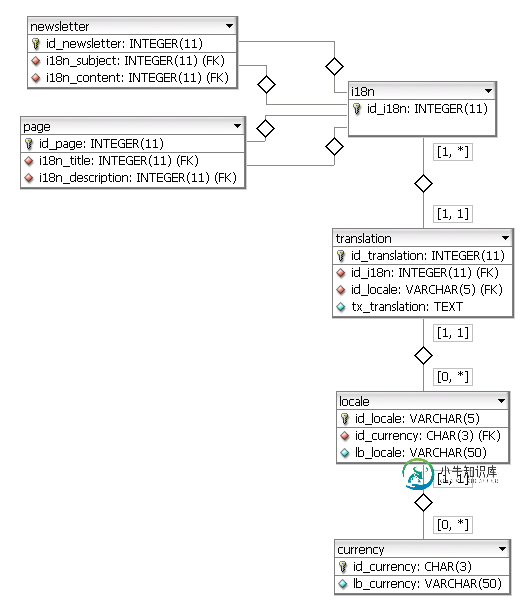

Here is the way I would design the database:

Visualization byDB Designer

Fork

The i18n table only contains a PK, so that any table just has to reference

this PK to internationalize a field. The table translation is then in charge

of linking this generic ID with the correct list of translations.

locale.id_locale is a VARCHAR(5) to manage both of en and en_US ISO

syntaxes.

currency.id_currency is a CHAR(3) to manage the ISO 4217

syntax.

You can find two examples: page and newsletter. Both of these admin-

managed entites need to internationalize their fields, respectively

title/description and subject/content.

Here is an example query:

select

t_subject.tx_translation as subject,

t_content.tx_translation as content

from newsletter n

-- join for subject

inner join translation t_subject

on t_subject.id_i18n = n.i18n_subject

-- join for content

inner join translation t_content

on t_content.id_i18n = n.i18n_content

inner join locale l

-- condition for subject

on l.id_locale = t_subject.id_locale

-- condition for content

and l.id_locale = t_content.id_locale

-- locale condition

where l.id_locale = 'en_GB'

-- other conditions

and n.id_newsletter = 1

Note that this is a normalized data model. If you have a huge dataset, maybe

you could think about denormalizing

it to optimize your queries.

You can also play with indexes to improve the queries performance (in some DB,

foreign keys are automatically indexed, e.g.

MySQL/InnoDB).